The arithmetic mean of two numbers a and b is (a + b)/2.

The geometric mean of a and b is √(ab).

The harmonic mean of a and b is 2/(1/a + 1/b).

This post will generalize these definitions of means and state a general inequality relating the generalized means.

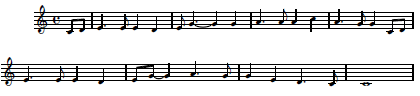

Let x be a vector of non-negative real numbers, x = (x1, x2, x3…, xn). Define Mr( x ) to be

unless r = 0 or r is negative and one of the xi is zero. If r = 0, define Mr( x ) to be the limit of Mr( x ) as r decreases to 0 . And if r is negative and one of the xi is zero, define Mr( x ) to be zero. The arithmetic, geometric, and harmonic means correspond to M1, M0, and M-1 respectively.

Define M∞( x ) to be the limit of Mr( x ) as r goes to ∞. Similarly, define M-∞( x ) to be the limit of Mr( x ) as r goes to –∞. Then M∞( x ) equals max(x1, x2, x3…, xn) and M-∞( x ) equals min(x1, x2, x3…, xn).

In summary, the minimum, harmonic mean, geometric mean, arithmetic mean and maximum are all special cases of Mr( x ) corresponding to r = –∞, –1, 0, 1, and ∞ respectively. Of course other values of r are possible; these five are just the most familiar. Another common example is the root-mean-square (RMS) corresponding to r = 2.

A famous theorem says that the geometric mean is never greater than the arithmetic mean. This is a very special case of the following theorem.

If r ≤ s then Mr( x ) ≤ Ms( x ).

In fact we can say a little more. If r < s then Mr( x ) < Ms( x ) unless x1 = x2 = x3 = …. = xn or s ≤ 0 and one of the xi is zero.

We could generalize the means Mr a bit more by introducing positive weights pi such that p1 + p2 + p3 + … + pn = 1. We could then define Mr( x ) as

with the same fine print as in the previous definition. The earlier definition reduces to this new definition with pi = 1/n. The above statements about the means Mr( x ) continue to hold under this more general definition.

For more on means and inequalities, see Inequalities by Hardy, Littlewood, and Pólya.

Update: Analogous results for means of functions, replacing sums with integrals. Also, physical examples of harmonic mean with springs and resistors.

Related post: Old math books