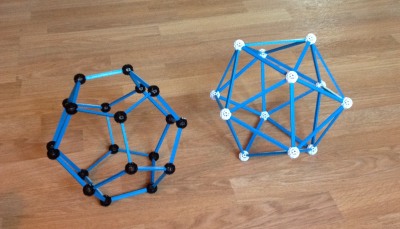

Suppose you have a regular pentagon inscribed in a unit circle, and connect one vertex to each of the other four vertices. Then the product of the lengths of these four lines is 5.

More generally, suppose you have a regular n-gon inscribed in a unit circle. Connect one vertex to each of the others and multiply the lengths of each of these line segments. Then the product is n.

I ran across this theorem recently thumbing through Mathematical Diamonds and had a flashback. This was a homework problem in a complex variables class I took in college. The fact that it was a complex variables class gives a big hint at the solution: Put one of the vertices at 1 and then the rest are nth roots of 1. Connect all the roots to 1 and use algebra to show that the product of the lengths is n. This will be much easier than a geometric proof.

Let ω = exp(2πi/n). Then the roots of 1 are powers of ω. The products of the diagonals equals

|1 – ω| |1 – ω2| |1 – ω3| … |1 – ωn-1|

You can change the absolute value signs to parentheses because the terms come in conjugate pairs. That is,

|1 – ωk| |1 – ωn-k| = (1 – ωk) (1 – ωn-k).

So the task is to prove

(1 – ω)(1 – ω2)(1 – ω3) … (1 – ωn-1) = n.

Since

(zn – 1) = (z – 1)(zn-1 + zn-2 + … 1)

it follows that

(zn-1 + zn-2 + … 1) = (z – ω)(z – ω2) … (z – ωn-1).

The result follows from evaluating the expression above at z = 1.

Related: Applied complex analysis