I was studying a statistics paper the other day in which the author said to solve

t log( 1 + n/t ) = k

for t as part of an algorithm. Assume 0 < k < n.

Is this well posed?

First of all, can this equation be solved for t? Second, if there is a solution, is it unique?

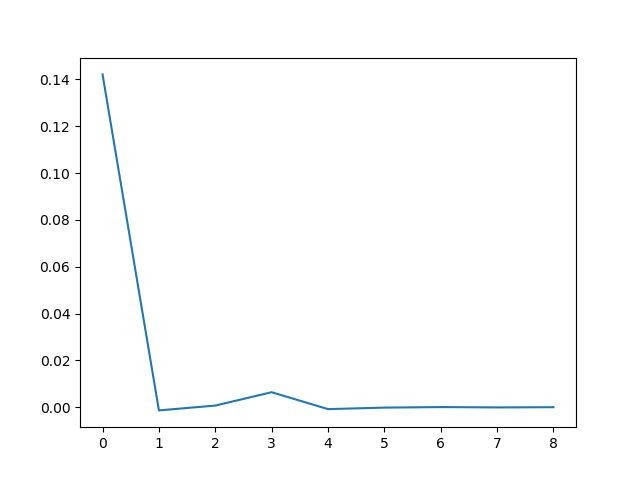

It’s not hard to show that as a function of t, the left side approaches 0 as t approaches 0, and it approaches n as t goes to infinity. So there is a solution for all k between 0 and n. The restriction on k is necessary since the left side cannot exceed n.

With a little more work one can show that the derivative is always positive, so the left side is a monotonically increasing function, and so the solution given each value of k is unique.

Analytic solution

Now if we fix n, we can think of the equation above as defining t as a function of k. Can we solve for t exactly? I suspected that there might be a solution in terms of the Lambert W function because if you divide by t and exponentiate, you get an equation that smells like the equation

z = w exp(w)

defining the function W(z). It turns out this is indeed the case.

If we ask Mathematica

Solve[t Log[1 + n/t] == k, t]

we get

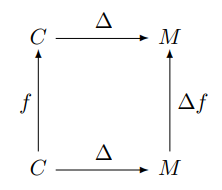

Great! There’s a closed-form solution, if you accept using the W function as being closed form.

Problems with the solution

I found the solution in Mathematica, but I tested it in Python to make sure both platforms define W the same way.

from numpy import log, exp

from scipy.special import lambertw

def f(t, n):

return t*log(1 + n/t)

def g(k, n):

r = k/n

return -k/(lambertw(-r*exp(-r)) + r)

n, k = 10, 8

t = g(k, n)

print(f(t, n))

This should print k, so it prints 8, right? No, it prints 10.

What’s up with that?

If we look back at the equation for t above, we see that the W function is being evaluated at x exp(x) where x = –k/n, so we should get –k/n back since W(x exp(x)) = x by definition. But that means our denominator is zero, and so the equation doesn’t make sense!

Things are getting worse. At first we had a wrong value, but at least it was finite!

The problem is not a difference between Mathematica and Python.

Resolution

The problem is we’ve glossed over a branch cut of the W function. To make a long story short, we were using the principal branch of the W function, but we should have used a different branch.

Let’s go back to where I asked Mathematica

Solve[t Log[1 + n/t] == k, t]

I ran the solution through TeXForm to get the TeX code that produced the image for the solution equation. I made a few aesthetic changes to the TeX, but it was essentially Mathematica’s output.

Without the TeXForm, Mathematica’s solution was in terms of ProductLog, not in terms of W; the TeXForm function turned ProductLog into W. If you look up ProductLog, it will tell you

ProductLog[z] gives the principal solution for w in z = wew.

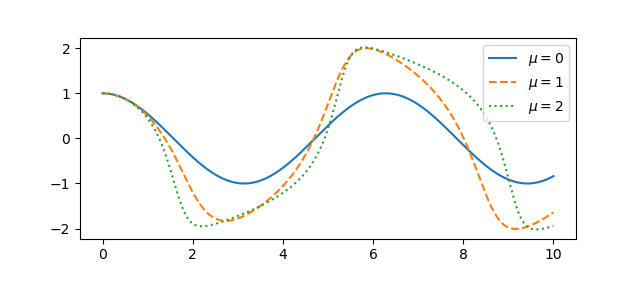

The principal solution. So we should be on the alert for difficulties with branches. There are two real solutions to z = wew for some values of z, and we have to choose the right one. For example, if z = -0.1, the w could be -0.1118 or -3.5772.

Mathematica gave me the wrong branch. But to be fair, it did try to warn me.

Solve::ifun: Inverse functions are being used by Solve, so some solutions may not be found; use Reduce for complete solution information.

The solution is to use the -1 branch. In Mathematica’s notation, the branch comes before the argument. In SciPy, the branch comes second. So to fix the code above, we need to change

lambertw(-r*exp(-r))

to

lambertw(-r*exp(-r), -1)

and then the code will be correct.

If x is negative, and we use the -1 branch of W, then

W-1(x exp(x)) ≠ x

and so we’re not dividing by zero in our solution.