When I shared an image from the previous post on Twitter, someone who goes by the handle Nonetheless made the astute observation that image looked like the Maxwell-Boltzmann distribution. That made me wonder what 1/Γ(x) would be like turned into a probability distribution, and whether it would be approximately like the Maxwell-Boltzmann distribution.

(Here I’m looking at something related to what Nonetheless said, but different. He was looking at my plot of the error in approximating 1/Γ(x) by partial products, but I’m looking at 1/Γ(x) itself.)

Making probability distributions

You can make any non-negative function with a finite integral into a probability density function by dividing it by its integral. So we can define a probability density function

where

and so c = 0.356154. (The integral cannot be computed in closed form and so has to be evaluated numerically.)

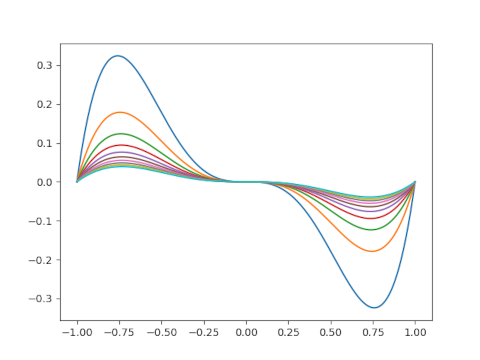

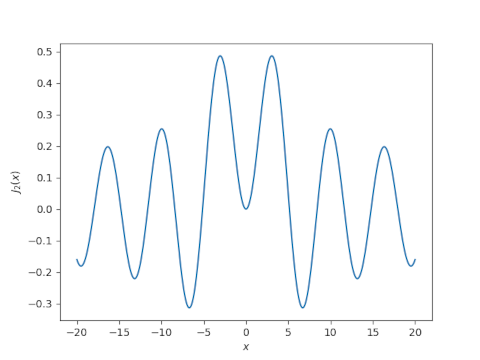

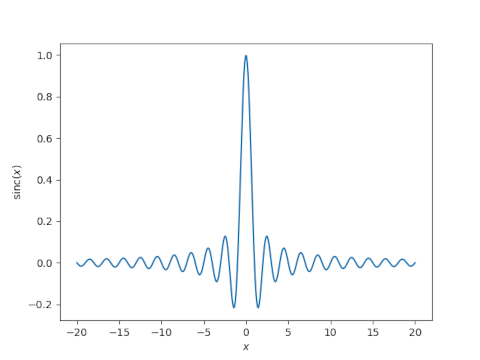

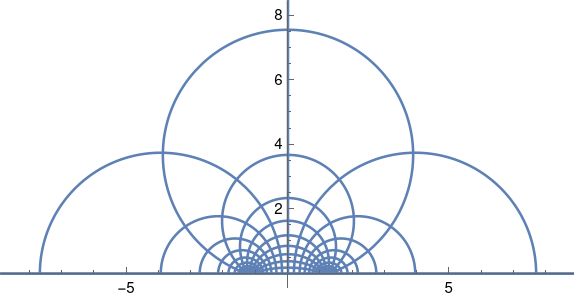

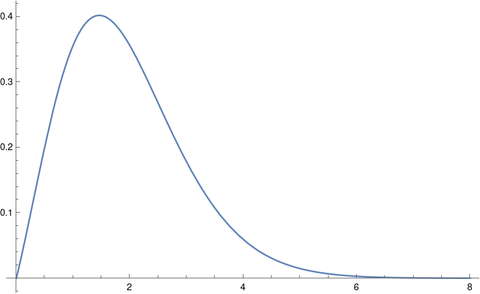

Here’s a plot of f(x).

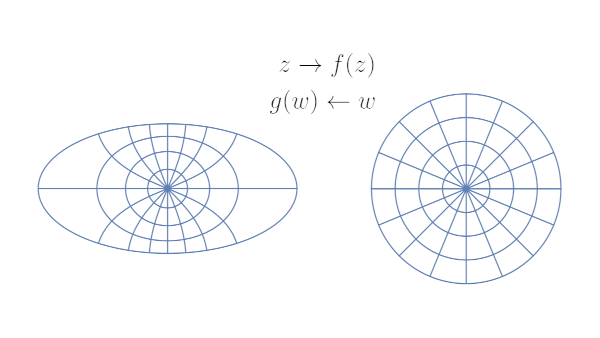

Note that we’re doing something kind of odd here. It’s common to see the gamma function in the definition of probability distributions, but the function is always evaluated at distribution parameters, not at the free variable x. We’ll give an example of this shortly.

Maxwell-Boltzmann distribution

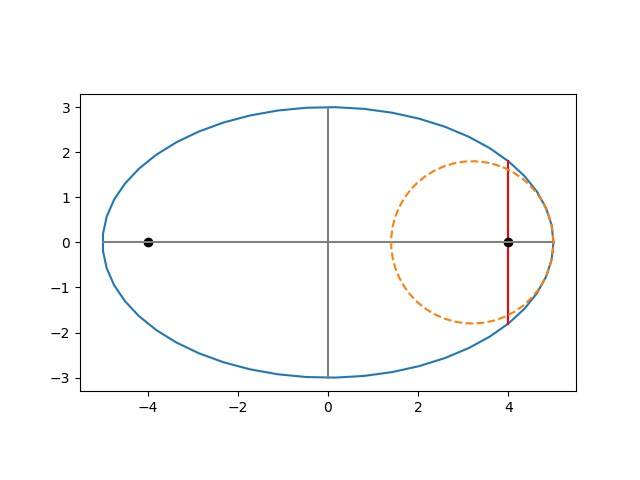

The Maxwell-Boltzmann distribution, sometimes called just the Maxwell distribution, is used in statistical mechanics to give a density function for particle velocities. In statistical terminology, the Maxwell-Boltzmann distribution is a chi distribution [1] with three degrees of freedom and a scale parameter σ that depends on physical parameters.

The density function for a Maxwell-Boltzmann distribution with k degrees of freedom and scale parameter σ has the following equation.

Think of this as xk-1 exp(-x² / 2σ²) multiplied by whatever you have to multiply it by to make it integrate to 1; most of the complexity in the definition is in the proportionality constant. And as mentioned above, the gamma function appears in the proportionality constant.

For the Maxwell-Boltzmann distribution, k = 3.

Lining up distributions

Now suppose we want to compare the distribution we’ve created out of 1/Γ(x) with the Maxwell-Boltzman distribution. One way of to do this would be to align the two distributions to have their peaks at the same place. The mode of a chi random variable with k degrees of freedom and scale σ is

and so for a given k we can solve for σ to put the mode where we’d like.

For positive values, the minimum of the gamma function, and hence the maximum of its reciprocal, occurs at 1.46163. As with the integral above, this has to be computed numerically.

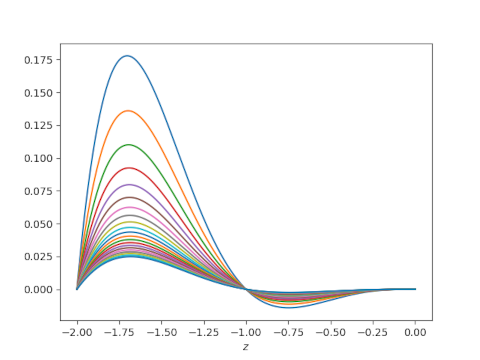

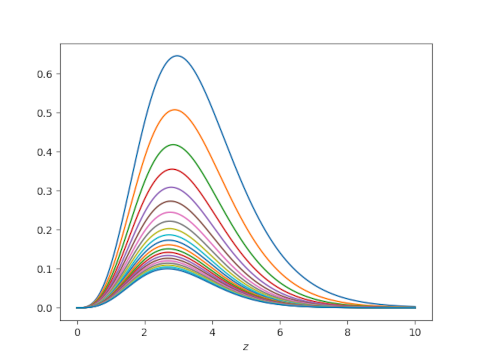

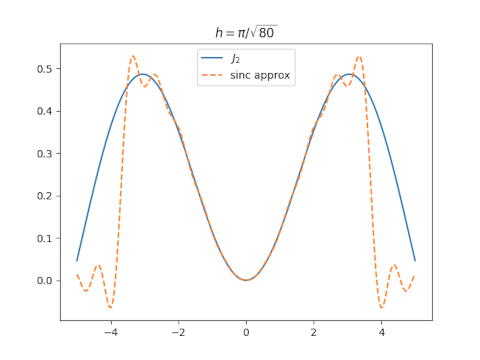

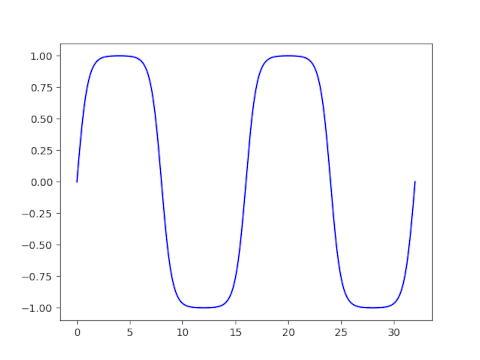

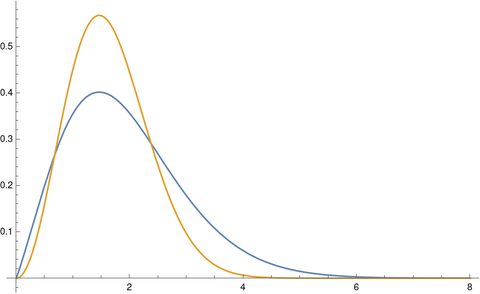

For the Maxwell-Boltzmann distribution, i.e. when k = 3, we don’t get a very good fit.

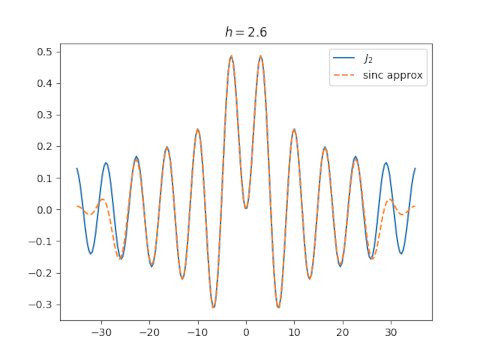

The tails on the chi distribution are too thin. If we try again with k = 2 we get a much better fit.

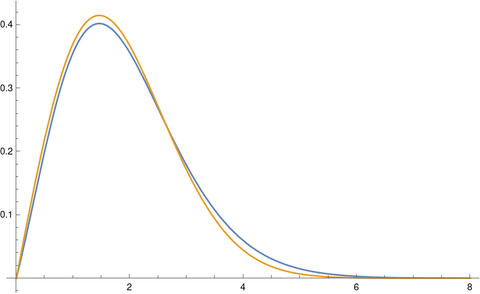

You get an even better fit with k = 1.86. The optimal value of k would depend on your criteria for optimality, but k = 1.86 looks like a good value just eyeballing it.

[1] This is a chi distribution, not the far more common chi-square distribution. The first time you see this is looks like a typo. If X is a random variable with a chi random variable with k degrees of freedom then X² has a chi-square distribution with k degrees of freedom.