A chromatic scale in Western music divides an octave into 12 parts. There are slightly different ways of partitioning the octave into 12 parts, and the various approaches have long and subtle histories. This post will look at the root of the differences.

An octave is a ratio of 2 to 1. Suppose a string of a certain tension and length produces an A when plucked. If you make the string twice as tight, or keep the same tension and cut the string in half, the string will sound the A an octave higher. The new sound will vibrate the air twice as many times per second.

A fifth is a ratio of 3 to 2 in the same way that an octave is a ratio of 2 to 1. So if we start with an A 440 (a pitch that vibrates at 440 Hz, 440 vibrations per second) then the E a fifth above the A vibrates at 660 Hz.

We can go up by fifths and down by octaves to produce every note in the chromatic scale. For example, if we go up another fifth from the E 660 we get a B 990. Then if we go down an octave to B 495 we have the B one step above the A 440. This says that a “second,” such as the interval from A to B, is a ratio of 9 to 8. Next we could produce the F# by going up a fifth from B, etc. This progression of notes is called the circle of fifths.

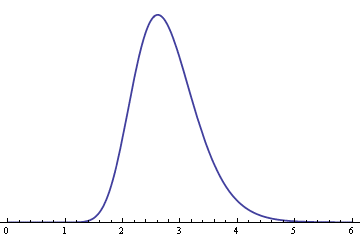

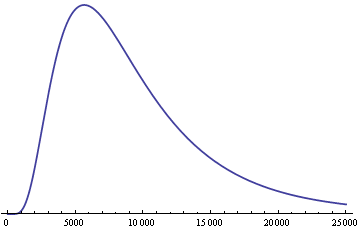

Next we take a different approach. Every time we go up by a half-step in the chromatic scale, we increase the pitch by a ratio r. When we do this 12 times we go up an octave, so r12 must be 2. This says r is the 12th root of 2. If we start with an A 440, the pitch n half steps higher must be 2n/12 times 440.

Now we have two ways of going up a fifth. The first approach says a fifth is a ratio of 3 to 2. Since a fifth is seven half-steps, the second approach says that a fifth is a ratio of 27/12 to 1. If these are equal, then we’ve proven that 27/12 equals 3/2. Unfortunately, that’s not exactly true, though it is a good approximation because 27/12 = 1.498. The ratio of 3/2 is called a “perfect” fifth to distinguish it from the ratio 1.498. The difference between perfect fifths and ordinary fifths is small, but it compounds when you use perfect fifths to construct every pitch.

The approach making every note via perfect fifths and octaves is known as Pythagorean tuning. The approach using the 12th root of 2 is known as equal temperament. Since 1.498 is not the same as 1.5, the two approaches produce different tuning systems. There are various compromises that try to preserve aspects of both systems. Each set of compromises produces a different tuning system. And in fact, the Pythagorean tuning system is a little more complicated than described above because it too involves some compromise.

Related post: Circle of fifths and number theory