Walter Bright made an interesting point in his talk at GOTO Aarhus this afternoon. He says that software developers like to think of their code as pipelines. Data comes in, goes through an assembly line-like series of processing steps, and comes out the end.

input -> step1 -> step 2 -> step3 -> output

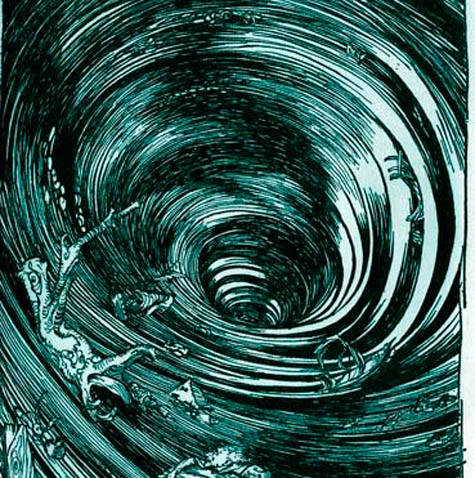

And yet code hardly ever looks like that. Most software looks more like a whirlpool than a pipeline. Data swirls around in loops before going down the drain.

These loops make it hard to identify parts that can be extracted into encapsulated components that can be snapped together or swapped out. You can draw a box around a piece of an assembly line and identify it as a component. But you can’t draw a box around a portion of a whirlpool and identify it as something than can be swapped out like a black box.

It is possible to write pipe-and-filter style apps, but the vast majority of programmers find loops more natural to write. And according to Walter Bright, it takes the combination of a fair number of programming language features to pull it off well, features which his D language has. I recommend watching his talk when the conference videos are posted. (Update: This article contains much of the material as the conference talk.)

I’ve been thinking about pipeline-style programming lately, and wondering why it’s so much harder than it looks. It’s easy to find examples of Unix shell scripts that solve some problem by snapping a handful of utilities together with pipes. And yet when I try to write my own software like that, especially outside the context of a shell, it’s not as easy as it looks. Brian Marick has an extended example in his book that takes a problem that appears to require loops and branching logic, but that can be turned into a pipeline. I haven’t grokked his example yet; I want to go back and look at it in light of today’s talk.

Hi John,

I’m a student with English as my second language, so please excuse my grammar and ignorance :-)

Lately I’ve had a (small) revaluation regarding pipe and filter architecture, regarding the way it forces strong coders with weak analytical skills, to compartmentalize their functionality. Making larger application subsystems into pipe and filter applications also brings a lot of flexibility to the table….

Also, the reason i began this comment: Pipers and filters doesn’t guarantee whirlpool-free experience in anyway. In my opinion it still needs proper designing.

Does anyone remember the time, when the style was new and even fashionable? In my imagination it must have been REALLY hot stuff.

Thank you for yet another interesting read.

I just a few moments ago got the first results from my pipeline and I don’t have a single for-loop in it.

I wrote it in Clojure (lisp dialect) – was that cheating? :-)

If there are any developers out there looking to broaden their horizons, I think a paradigm similar to this is dataflow programming. Dataflow programming makes some problems trivial and other quite devilish – as a student I worked with puredata and MAX/MSP to build audio applications. Being able to chain, interrupt, modify and branch off the flow of data and / or signals was fundamental to the system; on the other hand the implementation of even the most simple procedural algorithms represented a real challenge.

I have fond memories of working late into the night on a Markov chain process for generating aleatoric melody lines; you would feed it Bach and it would play back Bach; you would feed it Ravel and Bach and it would play back Bavel!

Have you looked at KNIME and Pipeline Pilot (non-free)? They are written for science types and follow the pipeline concept very literally. They even let you make calls to scripting languages (python, perl, R) when you need more complex behavior.

I think the reason this happens is because it all comes down to effects. When programming with effects, it tends to entangle the design into the whirlpool as you describe.

The one practical language that I have used which does not fall into this trap in my opinion is Haskell. Since it makes effects explicit, it really encourages a design that looks exactly like a pipeline. Everything including GUIs, Web Applications, 3D Graphics tends to end up as a pipeline if written in an idiomatic style.

Techniques such as iteratees/enumerators really help with this pipeline style of thinking. There has been a lot of activity in the design of these libraries within the Haskell ecosystem and coming up with a solid approach to dealing with pipelined deterministic I/O (effects).

Walter has also posted an article on Dr. Dobbs today regarding Component Programming. Interesting read.

I think that part of the problem is that few programming environments support message/data passing between independent units. At my last job we had some really nice middleware, and each step was implemented in a seperate binary. Step 3 taking too long? Start up another instance on a new machine, to spread the work.

Are you familiar with the Chain of Responsibility pattern? I’ve used it to do precisely this. Like you’ll probably guess, it’s not exactly intuitive to most programmers but you end up with very simple classes that chain together into something very powerful.

Once upon a time, I was spent a lot of time making my loops efficient. No wasted motion was my goal.

And then, I started running across programming practices that encapsulate the loops. Instead of a hand tailored loop, nowadays I’ll of ten use a canned one. When I code this way, most loops get pushed out into my computational leaves — they’re part of my reusable infrastructure, instead of being specialized application code.

And, yes, might “sound inefficient”. And, in a few cases it actually be inefficient. Much of the time, however, it’s not — CPUs and many development tools are optimized for dealing with simple loops. Also, efficiency is usually only matters at the bottlenecks. Shades of the old C language discussions about strlen()…

A related issue is that you can describe a relationship “as a whole” and leave it to your infrastructure to only use “the part that it currently needs”. This might sound like “lazy evaluation” but this should also sound like a database trigger (or a dependency) — but anyways, it’s a pattern that can get wide use.

There are different ways of looking at this kind of problem, but I think a lot of this issue is that loops are tedious to write and we like to minimize our tedium. (And note that map, foldl, each, and so on are still loops — maybe not quite as tedious to write as a for loop, but they still do not write themselves.)

Anyways, I suppose my point is: if I am going to factor a “loop” I should consider factoring it into “multiple loops”.

And, recursion (and loops which do not deal with multiple data elements) can be even worse — I should think about factoring some of these cases into multiple recursions, factoring them into loops, and factoring them into things which are neither (addition, for example, can be expressed recursively — can I take advantage of that, can I express a part of my recursion as addition? How about multiplication? …)

That said, on a related note: sometimes to simplify code you need to push back into the design. This can be uncomfortable (“I’m a programmer, I solve problems, I do not make them up”), but when I can see how to do it, I can sometimes turn a massive, difficult problem into an easy one.

In Python, you can use generators to build your program as a pipeline of well defined, specialized functions that work on and transform a stream in funny ways. I discovered this technique with enlightening examples in this set of slides by David Beazley, which I highly recommend:

http://www.dabeaz.com/generators/

I programmed in C#. I feel like Linq and “yield return” work great together.

You are free to mix and match paradigms together.

I could write a DFS traversal of a graph using recursion, but then filter the elements with .Select, and even join it with the other data source, and then go back and then aggregate, grouping, do whatever, really cool.

Today’s buzzword is “monad”.

I often write pipelines at work when I am writing sequences of mapreduces, but rarely when I am not writing mapreduces. Why? Well, with the mapreduces, you really need a clean, serialized interface between the phases. There is no choice. But in single machine/do big chunk of computation style programs, clean interfaces between phases aren’t strictly needed, and so I often omit them. The mapreduce sequence is pipelined and modular not because mapreduce is rich, but rather because it is rather rigid and demanding of a certain style.

I think it’s because there is some inherent “interface complexity” on the arrows. Every time one box flows into another, the outgoing box has to make some meaningful contract to the incoming box. Having a contract more meaningful than “there is a blob of stuff in memory in some convenient format” imposes a cost.

Of course, structuring a program in a pipeline style benefits (modularity, isolation), but often those aren’t the most important things to optimize compared to the sum of the complexity of the entire program, or time to deliver it.

SQL scripting provides an interesting comparison to the pipeline and whirlpool paradigms. A complex SQL query may make use of multiple temporary queries (or tables) and CTEs that build on one another to produce the final result. This is akin to the pipeline paradigm–there are no loops–but is variant where the pipeline can branch and rejoin itself.

The loop paradigm is based upon the batch paradigm whereas the pipeline is based upon an atomic-transaction perspective. The single most important thing that our whole industry needs to do now, at every level, is unroll ALL the batches (Google “Clean ALL the things!” if that reference flew over your head).

@FrankWilhoit except when batches should be atomic…

Consider your typical first person pov 3d graphics game: A single frame of video is a complex but ultimately static thing — they are strung together to give an appearance of change. Of critical importance is the latency between user input and the reflection of that input in subsequent frames. But you only want unrolled issues that convolve across multiple frames — within a frame you want the tightest loops possible so you can get them over with and get on with other things.

(Now, for added fun, try designing a static type system aimed at providing resource guarantees for people innovating new systems like this, while allowing for new graphics hardware to be used after the code is written…)

Something to keep in mind: Just b/c you don’t know of it or don’t know how to do it does not mean it is not possible, or that no one is doing it, or that no one knows how to do it.

A couple of thoughts:

* passing file descriptors: in C this is a safe way to achieve “pipes” within C programs

* reference counts vs. loops: most languages rely heavily on loops, but not all do; most programmers cannot easily think in terms other that loops – it’s too difficult for them – and programmers are usually lazy

Most inputs are whirlpool-like (dirty, unclear, self-referential).

Your post made me think of Stuart Sierra’s syntactic pipelines. Check out his blog post here

http://stuartsierra.com/2012/05/16/syntactic-pipelines. His Clojure West talk is linked from the post.