This is reprint of Nick Higham’s post of the same title from the Princeton University Press blog, used with permission.

Color is a fascinating subject. Important early contributions to our understanding of it came from physicists and mathematicians such as Newton, Young, Grassmann, Maxwell, and Helmholtz. Today, the science of color measurement and description is well established and we rely on it in our daily lives, from when we view images on a computer screen to when we order paint, wallpaper, or a car, of a specified color.

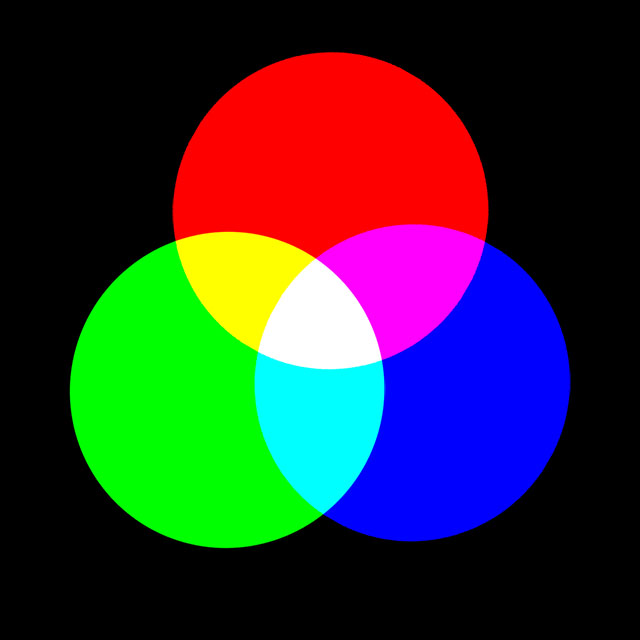

For practical purposes color space, as perceived by humans, is three-dimensional, because our retinas have three different types of cones, which have peak sensitivities at wavelengths corresponding roughly to red, green, and blue. It’s therefore possible to use linear algebra in three dimensions to analyze various aspects of color.

Metamerism

A good example of the use of linear algebra is to understand metamerism, which is the phenomenon whereby two objects can appear to have the same color but are actually giving off light having different spectral decompositions. This is something we are usually unaware of, but it is welcome in that color output systems (such as televisions and computer monitors) rely on it.

Mathematically, the response of the cones on the retina to light can be modeled as a matrix-vector product Af, where A is a 3-by-n matrix and f is an n-vector that contains samples of the spectral distribution of the light hitting the retina. The parameter n is a discretization parameter that is typically about 80 in practice. Metamerism corresponds to the fact that Af1 = Af2 is possible for different vectors f1 and f2. This equation is equivalent to saying that Ag = 0 for a nonzero vector g = f1 – f2, or, in other words, that a matrix with fewer rows than columns has a nontrivial null space.

Metamerism is not always welcome. If you have ever printed your photographs on an inkjet printer you may have observed that a print that looked fine when viewed indoors under tungsten lighting can have a color cast when viewed in daylight.

LAB Space: Separating Color from Luminosity

In digital imaging the term channel refers to the grayscale image representing the values of the pixels in one of the coordinates, most often R, G, or B (for red, green, and blue) in an RGB image. It is sometimes said that an image has ten channels. The number ten is arrived at by combining coordinates from the representation of an image in three different color spaces. RGB supplies three channels, a space called LAB (pronounced “ell-A-B”) provides another three channels, and the last four channels are from CMYK (cyan, magenta, yellow, black), the color space in which all printing is done.

LAB is a rather esoteric color space that separates luminosity (or lightness, the L coordinate) from color (the A and B coordinates). In recent years photographers have realized that LAB can be very useful for image manipulations, allowing certain things to be done much more easily than in RGB. This usage is an example of a technique used all the time by mathematicians: if we can’t solve a problem in a given form then we transform it into another representation of the problem that we can solve.

As an example of the power of LAB space, consider this image of aeroplanes at Schiphol airport.

Original image.

Suppose that KLM are considering changing their livery from blue to pink. How can the image be edited to illustrate how the new livery would look? “Painting in” the new color over the old using the brush tool in image editing software would be a painstaking task (note the many windows to paint around and the darker blue in the shadow area under the tail). The next image was produced in

just a few seconds.

Image converted to LAB space and A channel flipped.

How was it done? The image was converted from RGB to LAB space (which is a nonlinear transformation) and then the coordinates of the A channel were replaced by their negatives. Why did this work? The A channel represents color on a green–magenta axis (and the B channel on a blue–yellow axis). Apart from the blue fuselage, most pixels have a small A component, so reversing the sign of this component doesn’t make much difference to them. But for the blue, which has a negative A component, this flipping of the A channel adds just enough magenta to make the planes pink.

You may recall from earlier this year the infamous photo of a dress that generated a huge amount of interest on the web because some viewers perceived the dress as being blue and black while others saw it as white and gold. A recent paper What Can We Learn from a Dress with Ambiguous Colors? analyzes both the photo and the original dress using LAB coordinates. One reason for using LAB in this context is its device independence, which contrasts with RGB, for which the coordinates have no universally agreed meaning.

Nicholas J. Higham is the Richardson Professor of Applied Mathematics at The University of Manchester, and editor of The Princeton Companion to Applied Mathematics. His article Color Spaces and Digital Imaging in The Princeton Companion to Applied Mathematics gives an introduction to the mathematics of color and the representation and manipulation of digital images. In particular, it emphasizes the role of linear algebra in modeling color and gives more detail on LAB space.

Related posts

- Three algorithms for converting to gray scale

- FFT and Big Data (quotes from Princeton Companion to Applied Mathematics)

About a decade ago I was tasked with developing the color processing algorithms for an ultra-high-speed digital video camera (up to 100K fps).

I attended the Color Science summer course series at the Munsell Color Science Laboratory (MCSL) at the Rochester Institute of Technology (RIT). We covered human color perception, technologies for color detection and rendition, and the surprising number of ways to map between them (the nonlinearities in human color perception are often neglected).

This course series is highly recommended. It is an excellent combination of theoretical study with focused lab application.

The first lab was to characterize color perception within our class, so we took a battery of color perception tests. The variability was very surprising, astounding even, to those of us who had naively considered the human eye to be a good instrument. It isn’t!

As a color sensor, the human eye is really only adequate, when viewed across the entire population. For related colors, the space between folks saying “These two colors match” and those saying “These two colors don’t match” is often a gulf within the overall perception space. But, as with all human perception, it is subjective, so an important goal of any technological image system is to avoid situations where “color confusion” would become an issue.

My specific tasks were to: 1. Perform a color calibration of the sensor under a variety of imaging conditions (straightforward). And: 2. Maintain video color consistency as the photons per pixel dropped as frame rate increased and exposure time decreased (the fun part).

Fortunately, my color perception tested at the top of the class (what the industry calls a “golden eye”), so I could trust my own eyes when judging the results of the color processing algorithms.

Until the late 1980’s it was not uncommon to find papers heralding new color processing algorithms that failed the reproducibility test: The experimenters had color perception defects that were not accounted for. There are now very sensitive tests that, when applied to even relatively small sample populations, can distinguish the relative effectiveness of color processing algorithms.