Simple groups are to groups as prime numbers are to numbers.A simple group has no non-trivial normal subgroups, just as a prime number has no non-trivial factors.

Classification

Finite simple groups have been classified into five broad categories:

- Cyclic groups of prime order

- Alternating groups

- Classical groups

- Exceptional groups of Lie type

- Sporadic groups.

Three of these categories are easy to describe.

The cyclic groups of prime order are simply the integers mod p where p is prime. These are the only Abelian finite simple groups.

The alternating groups are even-order permutations of a set. These groups are simple if the set it permutes has at least 5 elements.

The sporadic groups are a list of 26 groups that don’t fit anywhere else. The other categories are infinite families of groups, but the sporadic groups are just individual groups.

The classical groups and exceptional Lie groups are harder to describe. I’d like to write about them in some detail down the road. For now, I’ll be deliberately vague.

This post is a broad overview, and may be the first of more posts in a series. This post just looks at the sizes (orders) of the groups.

Update: Here’s a follow-on post that looks at the groups denoted An(q).

Smallest group in each family

You can find a list of the families of finite simple groups on Wikipedia, along with their orders (the number of elements in each group). We can use this to determine the smallest group in each family, just to get an idea of how these families spread out.

The classical groups and exceptional Lie groups that I glossed over depend on a parameter n and/or a parameter q where q is a prime power. Even for very small values of n and q, the smallest ones for which the groups are simple, some of these groups are BIG.

|------------------+----------------------------------------------|

| Family | Order of smallest simple group |

|------------------+----------------------------------------------|

| Cyclic(p) | 2 |

| Alternating(n) | 60 |

| A_n(q) | 60 |

| Sporadic | 7920 |

| B_n(q) | 25920 |

| Suzuki(2^(2n+1)) | 29120 |

| 2A_n(q^2) | 126000 |

| C_n(q) | 1251520 |

| G_2(q) | 4245696 |

| 2F_4(q)' | 17971200 |

| D_n(q) | 174182400 |

| 3D_n(q^2) | 197406720 |

| 3D_4(q^3) | 211341312 |

| 2G_2(q) | 10073444472 |

| F_4(q) | 3311126603366400 |

| 2E_6(q^2) | 76532479683774853939200 |

| E_6(q) | 214841575522005575270400 |

| 2F_4(q) | 264905352699586176614400 |

| E_7(q) | 7997476042075799759100487262680802918400 |

| E_8(q) | 33780475314363480626138819061408559507999169 |

|------------------+----------------------------------------------|

Never mind the cryptic family names for now; I may get into these in future posts. My point here is that for some of these families, even the smallest member is quite large.

Interestingly, the smallest sporadic group has a modest size of 7920. But the largest sporadic group, “The Monster,” has order nearly 1054. You could think of each sporadic group of being a lonely family of one, so I’ll list their orders here. (There are groupings within the sporadic groups, but I’m not clear how much these are grouped together for historical reasons (i.e. who discovered them) or mathematical reasons. I expect there’s a blurry line between the historical and mathematical since the groups discovered by an individual were amenable to that person’s techniques.)

|-------+--------------------------------------------------------|

| Group | Order of smallest simple group |

|-------+--------------------------------------------------------|

| M_11 | 7920 |

| M_12 | 95040 |

| J_1 | 175560 |

| M_22 | 443520 |

| J_2 | 604800 |

| M_23 | 10200960 |

| HS | 44352000 |

| J_3 | 50232960 |

| M_24 | 244823040 |

| McL | 898128000 |

| He | 4030387200 |

| Ru | 145926144000 |

| Suz | 448345497600 |

| O'N | 460815505920 |

| Co_3 | 495766656000 |

| Co_2 | 42305421312000 |

| Fi_22 | 64561751654400 |

| HN | 273030912000000 |

| Ly | 51765179004000000 |

| Th | 90745943887872000 |

| Fi_23 | 4089470473293004800 |

| Co_1 | 4157776806543360000 |

| J_4 | 86775571046077562880 |

| Fi_24 | 1255205709190661721292800 |

| B | 4154781481226426191177580544000000 |

| M | 808017424794512875886459904961710757005754368000000000 |

|-------+--------------------------------------------------------|

Groups of order less than a million

In 1972, Marshall Hall published a list of the (non-Abelian) finite simple groups of order less than one million. Hall said that there were 56 such groups known, and now that the classification theorem has been completed we know he wasn’t missing any groups.

There are 78,498 primes less than one million, so there are that many cyclic (Abelian) finite groups of order less than one million. In the range of orders one to a million, Abelian simple groups outnumber non-Abelian simple groups by over 1400 to 1. Of the 56 non-Abelian orders, 46 belong to groups of the form An(q). Most of the families in the table above don’t make an appearance since their smallest representatives have order much larger than one million.

There can be multiple non-isomorphic groups with the same order, especially for small orders. This is another detail I may get into in future posts.

Growth of group orders

Now let’s look at plots to see how the size of the groups grow. Because these numbers quickly get very large, all plots are on a log scale.

In case you have difficulty seeing the color differences, the legends are in the same vertical order as the plots.

Some of the captions list two or three groups. That is because the curves corresponding to the separate groups are the same at the resolution of the image.

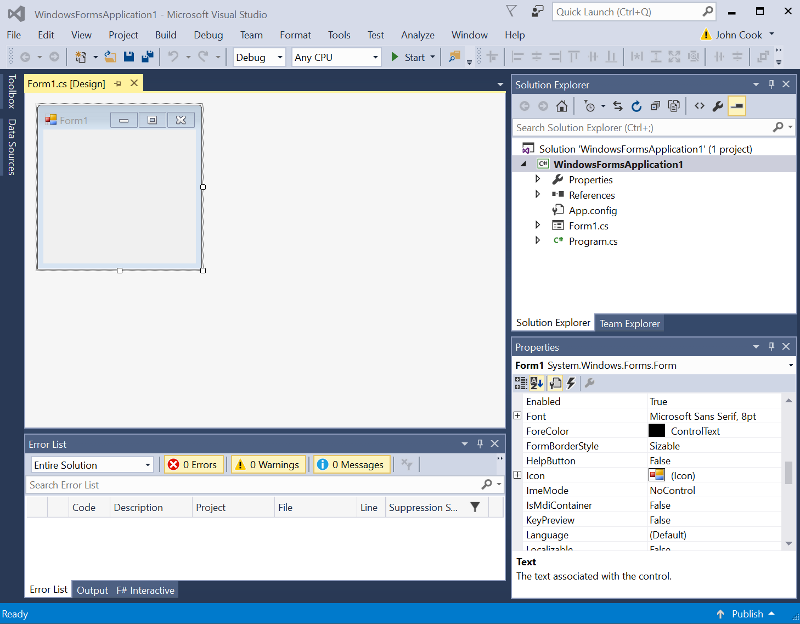

Here are groups that only depend on a parameter n.

Here are groups that only depend on a parameter q. The q‘s vary over prime powers: 2, 3, 4, 5, 7, 8, 9, 11, etc. Note that the horizontal axis is not q itself but the index of q, i.e. i on the horizontal scale corresponds to the ith prime power.

For the groups that depend on n and q, we vary these separately. First we hold n fixed at 5 and let q vary.

Finally, we now fix q at 5 and let n vary.

Looking up group by size

Imagine writing a program that takes the size n of a finite simple group and tells you what groups it could be. What would such a program look like?

Since the vast majority of finite simple groups below a given size are cyclic, we’d check whether n is prime. If it is, then the group is the integers mod n. What about the non-Abelian groups? These are so thinly spread out that the simplest thing to do would be to make a table of the possible values less than the maximum program input size N. If we set N = 10,000,000,000, then David Madore has already been done here. There are only 491 possible orders for non-Abelian simple groups of order less than 10 billion.

We said above that Abelian groups outnumbered non-Abelian groups by over 1400 to 1 for simple groups of order less than one million. What about for simple groups of order less than 10 billion? The number of primes less than 10 billion is 455,052,511 and so Abelian simple groups outnumber non-Abelian simple groups by a little less than a million to one.

Conclusion

This post began by saying simple groups are analogous to prime numbers. The Abelian finite simple groups are exactly integers modulo prime numbers. The orders of the non-Abelian finite simple groups are spread out much more sparsely than prime numbers.

Also, the way you combine simple groups to make general groups is more complicated than the way you multiply prime numbers to get all integers. There was speculation that group theory would die once finite simple groups were classified, but that has not been the case. Unfortunately (or fortunately if you’re a professional group theorist) the classification theorem doesn’t come close to telling us everything we’d want to know about groups.