Windows has never made it easy to read long environment variables. If I display the path on one machine I get something like this, both from cmd and from PowerShell.

C:bin;C:binPython25;C:binTeXmiktexbin;C:binTeXMiKTeXmiktexbin;C:binPerlbin;C:ProgramFilesCompaqCompaq Management AgentsDmiWin32Bin; ...

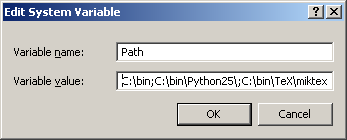

The System Properties window is worse since you can only see a tiny slice of your path at a time.

Here’s a PowerShell one-liner to produce readable path listing:

$env:path -replace ";", "`n"

This produces

C:bin C:binPython25 C:binTeXmiktexbin C:binTeXMiKTeXmiktexbin C:binPerlbin C:Program FilesCompaqCompaq Management AgentsDmiWin32Bin ...

(If you’re not familiar with PowerShell, note the backquote before the n to indicate the newline character to replace semicolons. This is one of the most unconventional features of PowerShell since backslash is the escape character in most contexts. Because Windows uses either forward or backward slashes as path separators, PowerShell could not use backslash as an escape character. Think of the backquote as a little backslash. Once you get over the initial shock, you get used to the backquote quickly.)

Update: It occurred to me after the original post that there’s an even simpler way to display the path.

$env:path.split(';')