There are tricks for determining whether a number is divisible by various primes, but many of these tricks have to be applied one at a time. You can make a procedure for testing divisibility by any prime p that is easier than having to carry out long division, but these rules are of little use if every one of them is different.

Say I make a rule for testing whether a number is divisible by 59. That’s great, if you routinely need to test divisibility by 59. Maybe you work for a company that, for some bizarre reason, ships widgets in boxes of 59 and you frequently have to test whether numbers are multiples of 59.

When you want to factor numbers, you’d like to test divisibility by a set of primes at once, using fewer separate algorithms, and taking advantage of work you’ve already done.

John Conway came up with his 150 Method to test for divisibility by a sequence of small primes. This article explains how Conway’s 150 method and a couple variations work. The core idea behind Conway’s 150 Method, his 2000 Method, and analogous methods developed by others is this:

- Find a range of integers, near a round number, that contains a lot of distinct prime factors.

- Reduce your number modulo the round number, then test for divisibility sequentially, reusing work.

Conway’s 150 Method starts by taking the quotient and remainder by 150. And you’ll never guess what his 2000 Method does. :)

This post will focus on the pattern behind Conway’s method, and similar methods. For examples and practical tips on carrying out the methods, see the paper linked above and a paper I’ll link to below.

The 150 Method

Conway exploited the fact that the numbers 152 through 156 are divisible by a lot of primes: 2, 3, 5, 7, 11, 13, 17, 19, and 31.

He starts his method with 150 rather than 152 because 150 is a round number and easier to work with. We start by taking the quotient and remainder by 150.

Say n = 150q + r. Then n – 152q = r – 2q. If n has three or four digits, q only has one or two digits, and so subtracting q is relatively easy.

Since 19 divides 152, we can test whether n is divisible by 19 by testing whether r – 2q is divisible by 19.

The next step is where sequential testing saves effort. Next we want to subtract off a multiple of 153 to test for divisibility by 17, because 17 divides 153. But we don’t have to start over. We can reuse our work from the previous step.

We want n – 153q = (n – 152q) – q, and we’ve already calculated n – 152q in the previous step, so we only need to subtract q.

The next step is to find n – 154q, and that equals (n – 153q) – q, so again we subtract q from the result of the previous step. We repeat this process, subtracting q each time, and testing for divisibility by a new set of primes each time.

The 2000 method

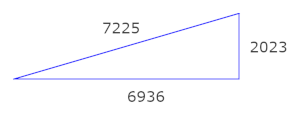

Conway’s more extensive method exploited the fact that the numbers 1998 through 2021 are divisible by all primes up to 67. So he would start by taking the quotient and remainder by 2000, which is really easy to do.

Say n = 2000q + r. Then we would add (or subtract) q each time.

You could start with r, then test r for divisibility by the factors of 2000, then test r – q for divisibility by the factors of 2001, then test r – 2q for divisibility by the factors of 2002, and so on up to testing r – 21q for divisibility by the factors of 2021. Then you’d need to go back and test r + q for divisibility by the factors of 1999 and test r + 2q for divisibility by the factors of 1998.

In principle that’s how Conways 2000 Method works. In practice, he did something more clever.

Most of the prime factors of the numbers 1998 through 2021 are prime factors of 1998 through 2002, so it makes sense to test this smaller range first hoping for early wins. Also, there’s no need to test divisibility by the factors of 1999 because 1999 is prime.

Conway tested r – kq for k = -2 through 21, but not sequentially. He would try out the values of k in an order most likely to terminate the factoring process early.

The 10,000 method

This paper gives a much more extensive approach to mental factoring than Conway’s 150 method. The authors, Hilarie Orman and Richard Schroeppel, outline a strategy for factoring any six-digit number. Conway’s rule is more modest, intended for three and four digit numbers.

Orman and Schroeppel suggest a sequence of factoring methods, including more advanced techniques to use after you’ve tried testing for divisibility by small primes. One of the techniques in the paper might be called the 10,000 Method by analogy to Conway’s method, though the authors don’t call it that. They call it “check the m‘s” for reasons that make more sense if you read the paper.

The 10,000 Method is much like the 2000 Method. The numbers 10,001 through 10,019 have a lot of prime factors, and the method tests for divisibility by these factors sequentially, taking advantage of previous work at each step, just as Conway’s methods do. The authors do not backtrack the way Conway did; they test numbers in order. However, they do skip over some numbers, like Conway skipped over 1999.