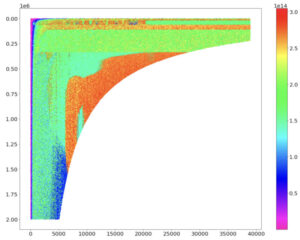

Large language models display emergence behaviors: when the parameter count is scaled to a certain value, suddenly the LLM is capable of performing a new task not possible at a smaller size. Some say the abruptness of this change is merely a spurious artifact of how it is measured. Even so, many would like to understand, predict, and even facilitate the emergence of these capabilities.

The following is not a mathematical proof , but a plausibility argument as to why such behavior should not be surprising, and a possible mechanism. I’ll start with simple cases and work up to more complex ones.

In nature

An obvious point. Emergence is ubiquitous in nature. Ice near the freezing point that is slightly heated suddenly becomes drinkable (phase change). An undrivable car with three wheels gets a fourth wheel and is suddenly drivable. Nonlinearity exists in nature.

In machine learning

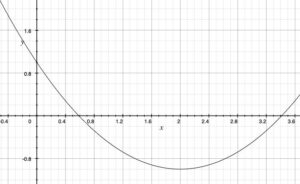

A simple example: consider fitting N arbitrary points in one dimension with linear regression using monomials. For a basis up to degree less than N-1, for most possible sets of data points (excluding “special” cases like collinear), the regression error will be non-zero, and reciprocally, the accuracy will be some finite value. Increase the number of monomials (parameter count) to N-1, and suddenly the error drops to zero, and accuracy jumps to infinity.

When using k-means clustering, if one has n clusters and runs k-means clustering with K<N cluster centers, the error will be significant, but when K=N, suddenly the cluster centers can model all clusters well, and the error drops dramatically.

In algorithms

Consider all Boolean circuits composed from some fixed logically complete set of gate types. Now consider the goal of constructing a Boolean circuit that takes a single byte representing the integer N and increments it to N+1, modulo 256 (8 bits input, 8 bits output). Clearly, such a circuit exists, for example, the standard chain of 1-bit add-and-carry circuits. Note one can in principle enumerate all possible circuits of finite gate count. It is manifest that an integer K>0 exists for which no circuit with less than K gates solves the problem but there exists a circuit with K gates that does. The standard chain of 8 1-bit adders might be such a minimizer, or maybe the optimal circuit is more exotic (for example see here, though this method is not guaranteed to compute a minimizer).

One would thus see this capability potentially emerge as soon as one reaches a gate budget of K gates. Now, one could argue that for a smaller gate budget, a partial result might be possible, for example, incrementing any 7-bit number—so the increase in capability is continuous, not emergent or wholly new. However, if all you care about is correctly incrementing any byte (for example, for manipulating ASCII text), then it’s all or nothing; there’s no partial credit. Even so, the gate budget required for incrementing 8 bits compared to only 7-bit integers is only slightly higher, but this minor increase in gate count actually doubles the quantity of integers that can be incremented, which might be perceived as a surprising, unexpected (emergent) jump.

In LLMs

The parameter count of an LLM defines a certain bit budget. This bit budget must be spread across many, many tasks the final LLM will be capable of, as defined by the architecture and the training process (in particular, the specific mix of training data). These tasks are implemented as “algorithms” (circuits) within the LLM. The algorithms are mixed together and (to some extent) overlap in a complex way that is difficult to analyze.

Suppose one of these desired capabilities is some task X. Suppose all possible input/output pairs for this operation are represented in the training data (or, maybe not—maybe some parts of the algorithm can be interpolated from the training data). The LLM is trained with SGD, typically with 2-norm minimization. The unit ball in the 2-norm is a sphere in high dimensional space. Thus “all directions” of the loss are pressed down equally by the minimization process—which is to say, the LLM is optimized on all the inputs for many, many tasks, not just task X. The limited parameter bit budget must be spread across many, many other tasks the LLM must be trained to do. As LLMs of increasing size are trained, at some point enough parameter bits in the budget will be allocatable to represent a fully accurate algorithm for task X, and at this point the substantially accurate capability to do “task X” will be perceivable—“suddenly.”

Task X could be the 8-bit incrementer, which from an optimal circuit standpoint would manifest emergence, as described above. However, due to the weakness of the SGD training methodology and possibly the architecture, there is evidence that LLM training does not learn optimal arithmetic circuits at all but instead does arithmetic by a “bag of heuristics” (which incidentally really is, itself, an algorithm, albeit a piecemeal one). In this case, gradually adding more and more heuristics might be perceived to increase the number of correct answers in a somewhat more incremental way, to be sure. However, this approach is not scalable—to perform accurate arithmetic for any number of digits, if one does not use an exact arithmetic algorithm or circuit, one must use increasingly more heuristics to increase coverage to try to capture all possible inputs accurately. And still, transitioning from an approximate to an exact 8-bit incrementer might in practice be perceived as an abrupt new capability, albeit a small one for this example.

One could alternatively consider tool use (for example, a calculator function that is external to the LLM proper), but then a new tool must be written for every new task, and the LLM needs to understand how to use the tool. (Maybe at some point LLMs will know how to write and use their own algorithmic tools?)

Predicting emergence

The real question is how can we predict when a new LLM will achieve some new capability X. For example, X = “Write a short story that resonates with the social mood of the present time and is a runaway hit” (and do the same thing again once a year based on new data, indefinitely into the future without failure). We don’t know an “algorithm” for this, and we can’t even begin to guess the required parameter budget or the training data needed. That’s the point of using an LLM—its training internally “discovers” new, never seen before algorithms from data that would be difficult for humans to formulate or express from first principles. Perhaps there is some indirect way of predicting the emergence of such X, but it doesn’t seem obvious on the face of it how to predict this directly.

Conclusion

Based on these examples, it would seem not at all surprising for LLMs to exhibit emergent behaviors, though in our experience our encounter with them may be startling. Predicting them may be possible to a limited extent but for the general case seems really hard.

Do you have any thoughts? If so, please leave them in the comments.