In the context of programming languages, “magic” is often a pejorative term for code that does something other than what it appears to do.

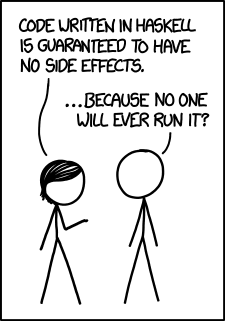

Programmers seem to have a love/hate relationship with magic. Even people who say that don’t like magic (e.g. because it’s hard to debug) end up using it. The Haskell community prides itself on having a transparent language with no magic, and yet monads are slightly magical. The whole purpose of a monad is to hide explicit data flow, though in a principled way. Haskell’s do notation is more magical, and templates are even more magical still. (However, I do hear some Haskellers express disdain for templates.)

People who like magic tend to use the word “automagic” instead. It means about the same thing as “magic” but with a positive connotation.

To conclude with a couple sweeping generalizations, magic fans tend to be tool-oriented (such as Microsoft developers) while magic detractors tend to be language-oriented (such as Haskell developers ).

Update: Someone asked me on Twitter about the difference between abstraction and magic. I’d say abstraction hides details, but magic is actively misleading or ironic.