This post is a set of footnotes to my previous post on the Lindy effect. This effect says that creative artifacts have lifetimes that follow a power law distribution, and hence the things that have been around the longest have the longest expected future.

Works of art

The previous post looked at technologies, but the Lindy effect would apply, for example, to books, music, or movies. This suggests the future will be something like a mirror of the present. People have listened to Beethoven for two centuries, the Beatles for about four decades, and Beyoncé for about a decade. So we might expect Beyoncé to fade into obscurity a decade from now, the Beatles four decades from now, and Beethoven a couple centuries from now.

Disclaimer

Lindy effect estimates are crude, only considering current survival time and no other information. And they’re probability statements. They shouldn’t be taken too seriously, but they’re still interesting.

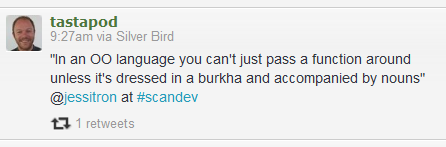

Programming languages

Yesterday was the 25th birthday of the Perl programming language. The Go language was announced three years ago. The Lindy effect suggests there’s a good chance Perl will be around in 2037 and that Go will not. This goes against your intuition if you compare languages to mechanical or living things. If you look at a 25 year-old car and a 3 year-old car, you expect the latter to be around longer. The same is true for a 25 year-old accountant and a 3 year-old toddler.

Life expectancy

Someone commented on the original post that for a British female, life expectancy is 81 years at birth, 82 years at age 20, and 85 years at age 65. Your life expectancy goes up as you age. But your expected additional years of life does not. By contrast, imagine a pop song that has a life expectancy of 1 year when it comes out. If it’s still popular a year later, we could expect it to be popular for another couple years. And if people are still listening to it 30 years after it came out, we might expect it to have another 30 years of popularity.

Mathematical details

In my original post I looked at a simplified version of the Pareto density:

f(t) = c/tc+1

starting at t = 1. The more general Pareto density is

f(t) = cac/tc+1

and starts at t = a. This says that if a random variable X has a Pareto distribution with exponent c and starting time a, then the conditional distribution on X given that X is at least b is another Pareto distribution, now with the same exponent but starting time b. The expected value of X a priori is ac/(c-1), but conditional on having survived to time b, the expected value is now bc/(c-1). That is, the expected value has gone up in proportion to the ratio of starting times, b/a.