Sometimes making a task just a little simpler can make a huge difference. Making something 5% easier might make you 20% more productive. Or 100% more productive.

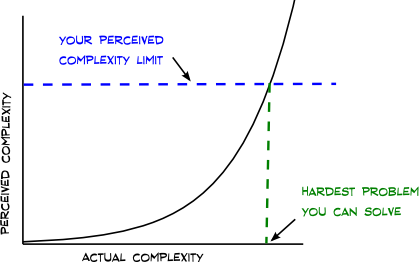

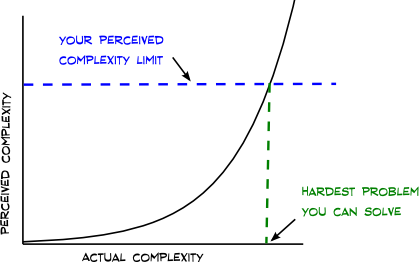

To see how valuable a little simplification can be, turn it around and think about making things more complicated. A small increase in complexity might go unnoticed. But as complexity increases, your subjective perception of complexity increases even more. As you start to become stressed out, small increases in objective complexity produce big increases in perceived complexity. Eventually any further increase in complexity is fatal to creativity because it pushes you over your complexity limit.

Clay Shirky discusses how this applies to information overload. He points out that we can feel like the amount of information coming in has greatly increased when it actually hasn’t. He did a little experiment to quantify this. When he thought that the amount of spam he was receiving had doubled, he would find that it had actually increased by about 25%. Turning this around, you may be able to feel like you’ve cut your amount of spam in half by just filtering out 20% of it.

A small decrease in complexity can be a big relief if you’re under stress. It may be enough to make the difference between being in a frazzled mental state to a calm mental state (moving out of F-state into C-state). If you’re up against your maximum complexity, a small simplification could make the difference between a problem being solvable or unsolvable.

Small simplifications are often dismissed as unimportant when they’re evaluated in the small. Maybe a new term makes it possible to refer to an idea in three syllables rather than six. No big deal if it’s a term you don’t use much. But if it’s a term you use all the time, it makes a difference. That’s why every group has its own jargon.

Suppose one application takes five mouse clicks to do what another can do in three. Maybe that’s no big deal. But if you’re under stress, those two mouse clicks might make the difference between deciding a finishing touch is worthwhile versus not worthwhile.

Suppose one programming language takes five lines of code to do what another language can do in four lines. So what? How long does it take to type one line of code? But multiply that by 10. Maybe you see 40 lines of code on your laptop at once but you can’t see 50. Or multiply by 10 again. Maybe you can hold 400 lines of code in your head but you can’t hold 500. Language features dismissed as “syntactic sugar” can make a real difference.

When you’re stressed and feel like only a radical change will do any good, think again. A small simplification might be enough to give you some breathing room by pulling you back down below your complexity limit.

Related post: What happens when you add another teller?