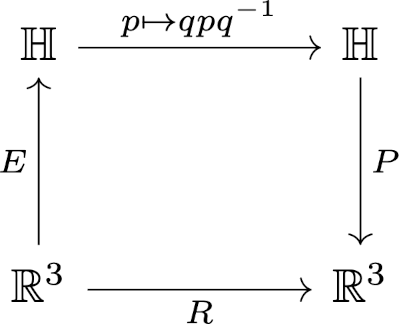

In the previous post I wrote about representing rotations with quaternions. This representation has several advantages, such as making it clear how rotations compose. Rotations are often represented as matrices, and so it’s useful to be able to go between the two representations.

A unit-length quaternion (q0, q1, q2, q3) represents a rotation by an angle θ around an axis in the direction of (q1, q2, q3) where cos(θ/2) = q0. The corresponding rotation matrix is given below.

Going the other way around, inferring a quaternion representation from a rotation matrix, is harder. Here is a mathematically correct but numerically suboptimal method known [1] as the Chiaverini-Siciliano method.

Here sgn is the sign function; sgn(x) equals 1 if x is positive and −1 if x is negative. Note that the components only depend on the diagonal of the rotation matrix, aside from the sign terms. Better numerical algorithms make more use of the off-diagonal elements.

Accounting for degrees of freedom

Something seems a little suspicious here. Quaternions contain four real numbers, and 3 by 3 matrices contain nine. How can four numbers determine nine numbers? And going the other way, out of the nine, we essentially choose three that determine the four components of a quaternion.

Quaternions have four degrees of freedom, but we’re using unit quaternions, so there are basically three degrees of freedom. Likewise orthogonal matrices have three degrees of freedom. An axis of rotation is a point on a sphere, so that has two degrees of freedom, and the degree of rotation is the third degree of freedom.

In topological terms, the unit quaternions and the set of 3 by 3 orthogonal matrices are both three dimensional manifolds, and the former is a double cover of the latter. It is a double cover because a unit quaternion q corresponds to the same rotation as −q.

Python code

Implementing the equations above is straightforward.

import numpy as np

def quaternion_to_rotation_matrix(q):

q0, q1, q2, q3 = q

return np.array([

[2*(q0**2 + q1**2) - 1, 2*(q1*q2 - q0*q3), 2*(q1*q3 + q0*q2)],

[2*(q1*q2 + q0*q3), 2*(q0**2 + q2**2) - 1, 2*(q2*q3 - q0*q1)],

[2*(q1*q3 - q0*q2), 2*(q2*q3 + q0*q1), 2*(q0**2 + q3**2) - 1]

])

def rotation_matrix_to_quaternion(R):

r11, r12, r13 = R[0, 0], R[0, 1], R[0, 2]

r21, r22, r23 = R[1, 0], R[1, 1], R[1, 2]

r31, r32, r33 = R[2, 0], R[2, 1], R[2, 2]

# Calculate quaternion components

q0 = 0.5 * np.sqrt(1 + r11 + r22 + r33)

q1 = 0.5 * np.sqrt(1 + r11 - r22 - r33) * np.sign(r32 - r23)

q2 = 0.5 * np.sqrt(1 - r11 + r22 - r33) * np.sign(r13 - r31)

q3 = 0.5 * np.sqrt(1 - r11 - r22 + r33) * np.sign(r21 - r12)

return np.array([q0, q1, q2, q3])

Random testing

We’d like to test the code above by generating random quaternions, converting the quaternions to rotation matrices, then back to quaternions to verify that the round trip puts us back essentially where we started. Then we’d like to go the other way around, starting with randomly generated rotation matrices.

To generate a random unit quaternion, we generate a vector of four independent normal random values, then normalize by dividing by its length. (See this recent post.)

To generate a random rotation matrix, we use a generator that is part of SciPy.

Here’s the test code:

def randomq():

q = norm.rvs(size=4)

return q/np.linalg.norm(q)

def randomR():

return special_ortho_group.rvs(dim=3)

np.random.seed(20250507)

N = 10

for _ in range(N):

q = randomq()

R = quaternion_to_rotation_matrix(q)

t = rotation_matrix_to_quaternion(R)

print(np.linalg.norm(q - t))

for _ in range(N):

R = randomR()

q = rotation_matrix_to_quaternion(R)

T = quaternion_to_rotation_matrix(q)

print(np.linalg.norm(R - T))

The first test utterly fails, returning six 2s, i.e. the round trip vector is as far as possible from the vector we started with. How could that happen? It must be returning the negative of the original vector. Now go back to the discussion above about double covers: q and −q correspond to the same rotation.

If we go back and add the line

q *= np.sign(q[0])

then we standardize our random vectors to have a positive first component, just like the vectors returned by rotation_matrix_to_quaternion.

Now our tests all return norms on the order of 10−16 to 10−14. There’s a little room to improve the accuracy, but the results are good.

Update: I did some more random testing, and found errors on the order of 10−10. Then I was able to create a test case where rotation_matrix_to_quaternion threw an exception because one of the square roots had a negative argument. In [1] the authors get around this problem by evaluating two theoretically equivalent expressions for each of the square root arguments. The expressions are complementary in the sense that both should not lead to numerical difficulties at the same time.

[1] See “Accurate Computation of Quaternions from Rotation Matrices” by Soheil Sarabandi and Federico Thomas for a better numerical algorithm. See also the article “A Survey on the Computation of Quaternions From Rotation Matrices” by the same authors.