I mentioned the other day that the US military standard MIL-STD-105 for statistical sampling procedures lives on in the ASQ/ANSI standard Z1.4. The Department of Defense cancelled their own standard in 1995 in favor of adopting civilian standards, in particular ASQ/ANSI Z1.4.

There are two main differences between military standard and its replacement. First, the military standard is free and the ASNI standard costs $199. Second, the ASNI standard has better typography. Otherwise the two standards are remarkably similar.

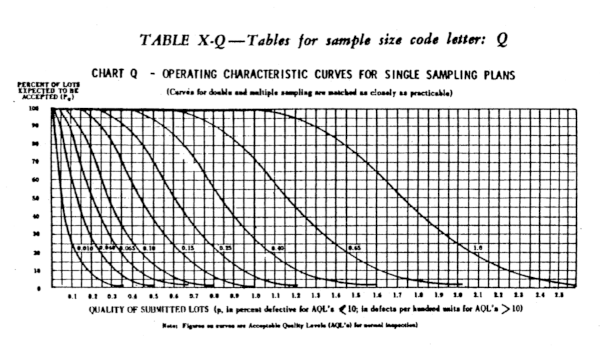

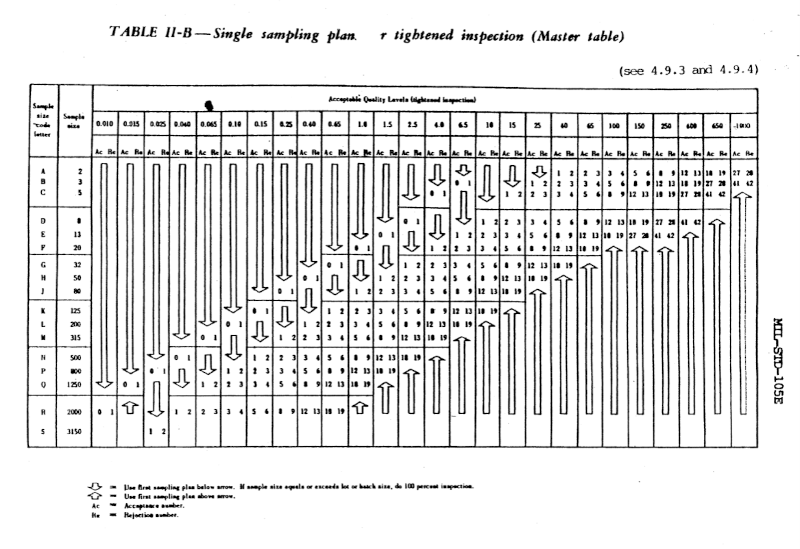

For example, the screen shot from MIL-STD-105 that I posted the other day appears verbatim in ASQ/ASNI Z1.4, except with better typesetting. The table even has the same name: “Table II-B Single sampling plans for tightened inspection (Master table).” Since the former is public domain and the latter is copyrighted, I’ll repeat my screenshot of the former.

Everything I said about the substance of the military standard applies to the ASNI standard. The two give objective, checklist-friendly statistical sampling plans and acceptance criteria. The biggest strength and biggest weakness of these plans is the lack of nuance. One could create more sophisticated statistical designs that are more powerful, but then you lose the simplicity of a more regimented approach.

A company could choose to go down both paths, using more informative statistical models for internal use and reporting results from the tests dictated by standards. For example, a company could create a statistical model that takes more information into account and use it to assess the predictive probability of passing the tests required by sampling standards.