There are five ways to parenthesize a product of four things:

- ((ab)c)d

- (ab)(cd)

- (a(b(cd))

- (a(bc))d

- (a((bc)d)

In a context where multiplication is not associative, the five products above are not necessarily the same. Maybe all five are different.

This post will give two examples where the products above are all different: octonions and matrix multiplication.

If you’re thinking “But wait: matrix multiplication is associative!” then read further and see in what sense I’m saying it’s not.

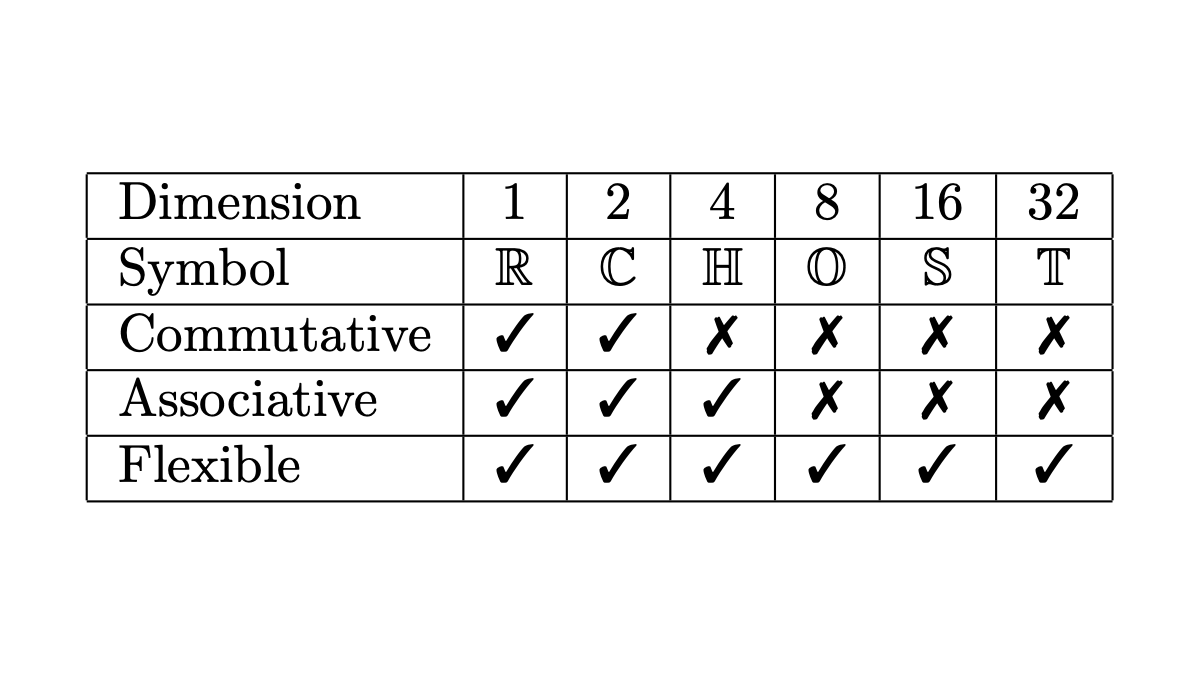

Octonioins

Octonion multiplication is not associative. I wrote a blog post a while back asking how close the octonions come to being associative. That is, if we randomly generate unit-length octonions a, b, and c, we can calculate the norm of

(ab)c − a(bc)

and ask about its expected value. Sometimes for a triple of octonions this value is zero, but on average this expression has norm greater than 1. I estimated the average value via simulation, and later Greg Egan worked out the exact value. His write-up had gone down with the Google+ ship, but recently Greg posted a new version of his notes.

In this post I gave Python code for multiplying octonions using a function called CayleyDickson named after the originators of the algorithm. Let’s rename it m to have something shorter to work with and compute all five ways of associating a product of four octonions.

import numpy as np

def m(x, y):

return CayleyDickson(x, y)

def take_five(a, b, c, d):

return [

m(a, (m(b, m(c, d)))),

m(m(a, b), m(c, d)),

m(m(m(a, b), c), d),

m(a, m(m(b, c), d)),

m(m(a, m(b, c)), d)

]

I first tried products of basis elements, and I only got two different products out of the five ways of associating multiplication, but I only tried a few examples. However, when I tried using four random octonions, I got five different products.

np.random.seed(20220201)

a = np.random.rand(8)

b = np.random.rand(8)

c = np.random.rand(8)

d = np.random.rand(8)

for t in take_five(a, b, c, d):

print(t)

This gave a very interesting result: I got five different results, but only two different real (first) components. The five vectors all differed in the last seven components, but only produced two distinct first components.

[ 2.5180856 -2.61618184 ...]

[ 2.5180856 0.32031027 ...]

[ 2.5180856 1.13177500 ...]

[ 3.0280984 -0.30169446 ...]

[ 3.0280984 -2.36523580 ...]

I repeated this experiment a few times, and the first three results always had the same real component, and the last two results had another real component.

I suspect there’s a theorem that says

Re( ((ab)c)d ) = Re( (ab)(cd) ) = Re( a(b(cd)) )

and

Re( (a(bc))d ) = Re( a((bc)d) )

but I haven’t tried to prove it. If you come with a proof, or a counterexample, please post a link in the comments.

Matrix multiplication

Matrix multiplication is indeed associative, but the efficiency of matrix multiplication is not. That is, any two ways of parenthesizing a matrix product will give the same final matrix, but the cost of the various products are not the same. I first wrote about this here.

This is a very important result in practice. Changing the parentheses in a matrix product can make the difference between a computation being practical or impractical. This comes up, for example, in automatic differentiation and in backpropagation for neural networks.

Suppose A is an m by n matrix and B is an n by p matrix. Then AB is an m by p matrix, and forming the product AB requires mnp scalar multiplications. If C is a p by q matrix, then (AB)C takes

mnp + mpq = mp(n + q)

scalar multiplications, but computing A(BC) takes

npq + mnq = nq(m + p)

scalar multiplications, and in general these are not equal.

Let’s rewrite our multiplication function m and our take_five function to compute the cost of multiplying four matrices of random size.

We’ve got an interesting programming problem in that our multiplication function needs to do two different things. First of all, we need to know the size of the resulting matrix. But we also want to keep track of the number of scalar multiplications the product would require. We have a sort of main channel and a side channel. Having our multiplication function return both the dimension and the cost would make composition awkward.

This is is kind of a fork in the road. There are two ways to solving this problem, one high-status and one low-status. The high-status approach would be to use a monad. The low-status approach would be to use a global variable. I’m going to take the low road and use a global variable. What’s one little global variable among friends?

mults = 0

def M(x, y):

global mults

mults += x[0]*x[1]*y[0]

return (x[0], y[1])

def take_five2(a, b, c, d):

global mults

costs = []

mults = 0; M(a, (M(b, M(c, d)))); costs.append(mults)

mults = 0; M(M(a, b), M(c, d)); costs.append(mults)

mults = 0; M(M(M(a, b), c), d); costs.append(mults)

mults = 0; M(a, M(M(b, c), d)); costs.append(mults)

mults = 0; M(M(a, M(b, c)), d); costs.append(mults)

return costs

Next, I’ll generate five random integers, and group them in pairs as sizes of matrices conformable for multiplication.

dims = np.random.random_integers(10, size=5)

dim_pairs = zip(dims[:4], dims[1:])

c = take_five2(*[p for p in dim_pairs])

When I ran this dims was set to

[3 9 7 10 6]

and so my matrices were of size 3×9, 9×7, 7×10, and 10×6.

The number of scalar multiplications required by each way of multiplying the four matrices, computed by take_five2 was

[1384, 1090, 690, 1584, 984]

So each took a different number of operations. The slowest approach would take more than twice as long as the fastest approach. In applications, matrices can be very long and skinny, or very wide and thin, in which case one approach may take orders of magnitude more operations than another.

Related posts