UTF-8 is a clever way of encoding Unicode text. I’ve mentioned it a couple times lately, but I haven’t blogged about UTF-8 per se. Here goes.

The problem UTF-8 solves

US keyboards can often produce 101 symbols, which suggests 101 symbols would be enough for most English text. Seven bits would be enough to encode these symbols since 27 = 128, and that’s what ASCII does. It represents each character with 8 bits since computers work with bits in groups of sizes that are powers of 2, but the first bit is always 0 because it’s not needed. Extended ASCII uses the left over space in ASCII to encode more characters.

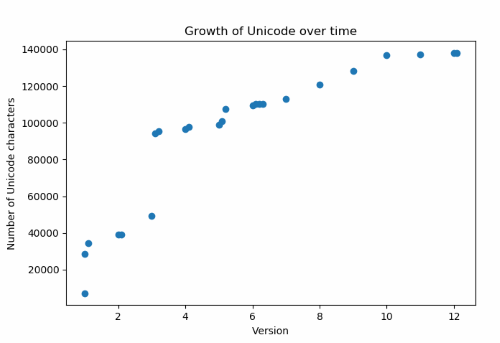

A total of 256 characters might serve some users well, but it wouldn’t begin to let you represent, for example, Chinese. Unicode initially wanted to use two bytes instead of one byte to represent characters, which would allow for 216 = 65,536 possibilities, enough to capture a lot of the world’s writing systems. But not all, and so Unicode expanded to four bytes.

If you were to store English text using two bytes for every letter, half the space would be wasted storing zeros. And if you used four bytes per letter, three quarters of the space would be wasted. Without some kind of encoding every file containing English test would be two or four times larger than necessary. And not just English, but every language that can represented with ASCII.

UTF-8 is a way of encoding Unicode so that an ASCII text file encodes to itself. No wasted space, beyond the initial bit of every byte ASCII doesn’t use. And if your file is mostly ASCII text with a few non-ASCII characters sprinkled in, the non-ASCII characters just make your file a little longer. You don’t have to suddenly make every character take up twice or four times as much space just because you want to use, say, a Euro sign € (U+20AC).

How UTF-8 does it

Since the first bit of ASCII characters is set to zero, bytes with the first bit set to 1 are unused and can be used specially.

When software reading UTF-8 comes across a byte starting with 1, it counts how many 1’s follow before encountering a 0. For example, in a byte of the form 110xxxxx, there’s a single 1 following the initial 1. Let n be the number of 1’s between the initial 1 and the first 0. The remaining bits in this byte and some bits in the next n bytes will represent a Unicode character. There’s no need for n to be bigger than 3 for reasons we’ll get to later. That is, it takes at most four bytes to represent a Unicode character using UTF-8.

So a byte of the form 110xxxxx says the first five bits of a Unicode character are stored at the end of this byte, and the rest of the bits are coming in the next byte.

A byte of the form 1110xxxx contains four bits of a Unicode character and says that the rest of the bits are coming over the next two bytes.

A byte of the form 11110xxx contains three bits of a Unicode character and says that the rest of the bits are coming over the next three bytes.

Following the initial byte announcing the beginning of a character spread over multiple bytes, bits are stored in bytes of the form 10xxxxxx. Since the initial bytes of a multibyte sequence start with two 1 bits, there’s no ambiguity: a byte starting with 10 cannot mark the start of a new multibyte sequence. That is, UTF-8 is self-punctuating.

So multibyte sequences have one of the following forms.

110xxxxx 10xxxxxx

1110xxxx 10xxxxxx 10xxxxxx

11110xxx 10xxxxxx 10xxxxxx 10xxxxxx

If you count the x’s in the bottom row, there are 21 of them. So this scheme can only represent numbers with up to 21 bits. Don’t we need 32 bits? It turns out we don’t.

Although a Unicode character is ostensibly a 32-bit number, it actually takes at most 21 bits to encode a Unicode character for reasons explained here. This is why n, the number of 1’s following the initial 1 at the beginning of a multibyte sequence, only needs to be 1, 2, or 3. The UTF-8 encoding scheme could be extended to allow n = 4, 5, or 6, but this is unnecessary.

Efficiency

UTF-8 lets you take an ordinary ASCII file and consider it a Unicode file encoded with UTF-8. So UTF-8 is as efficient as ASCII in terms of space. But not in terms of time. If software knows that a file is in fact ASCII, it can take each byte at face value, not having to check whether it is the first byte of a multibyte sequence.

And while plain ASCII is legal UTF-8, extended ASCII is not. So extended ASCII characters would now take two bytes where they used to take one. My previous post was about the confusion that could result from software interpreting a UTF-8 encoded file as an extended ASCII file.