Monte Carlo integration has been called “Integration by Darts,” a clever pun on “integration by parts.” I ran across the phrase looking at some slides by Brian Hayes, but apparently it’s been around a while. The explanation that Monte Carlo is “integration by darts” is fine as a 0th order explanation, but it can be misleading.

Introductory courses explain Monte Carlo integration as follows.

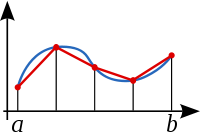

- Plot the function you want to integrate.

- Draw a box that contains the graph.

- Throw darts (random points) at the box.

- Count the proportion of darts that land between the graph and the horizontal axis.

- Estimate the area under the graph by multiplying the area of the box by the proportion above.

In principle this is correct, but this is far from how Monte Carlo integration is usually done in practice.

For one thing, Monte Carlo integration is seldom used to integrate functions of one variable. Instead, it is mostly used on functions of many variables, maybe hundreds or thousands of variables. This is because more efficient methods exist for low-dimensional integrals, but very high dimensional integrals can usually only be computed using Monte Carlo or some variation like quasi-Monte Carlo.

If you draw a box around your integrand, especially in high dimensions, it may be that nearly all your darts fall outside the region you’re interested in. For example, suppose you throw a billion darts and none land inside the volume determined by your integration problem. Then the point estimate for your integral is 0. Assuming the true value of the integral is positive, the relative error in your estimate is 100%. You’ll need a lot more than a billion darts to get an accurate estimate. But is this example realistic? Absolutely. Nearly all the volume of a high-dimensional cube is in the “corners” and so putting a box around your integrand is naive. (I’ll elaborate on this below. [1])

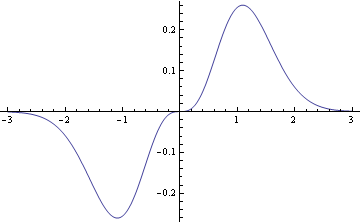

So how do you implement Monte Carlo integration in practice? The next step up in sophistication is to use “importance sampling.” [2] Conceptually you’re still throwing darts at a box, but not with a uniform distribution. You find a probability distribution that approximately matches your integrand, and throw darts according to that distribution. The better the fit, the more efficient the importance sampler. You could think of naive importance sampling as using a uniform distribution as the importance sampler. It’s usually not hard to find an importance sampler much better than that. The importance sampler is so named because it concentrates more samples in the important regions.

Importance sampling isn’t the last word in Monte Carlo integration, but it’s a huge improvement over naive Monte Carlo.

***

[1] So what does it mean to say most of the volume of a high-dimensional cube is in the corners? Suppose you have an n-dimensional cube that runs from −1 to 1 in each dimension and you have a ball of radius 1 inside the cube. To make the example a little simpler, assume n is even, n = 2k. Then the volume of the cube is 4k and the volume of the sphere is πk / k!. If k = 1 (n = 2) then the sphere (circle in this case) takes up π/4 of the volume (area), about 79%. But when k = 100 (n = 200), the ball takes up 3.46×10−169 of the volume of the cube. You could never generate enough random samples from the cube to ever hope to land a single point inside the ball.

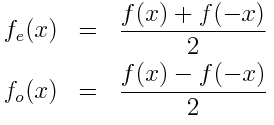

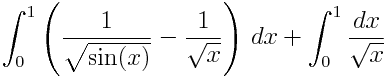

[2] In a nutshell, importance sampling replaces the problem of integrating f(x) with that of integrating (f(x) / g(x)) g(x) where g(x) is the importance sampler, a probability density. Then the integral of (f(x) / g(x)) g(x) is the expected value of (f(X) / g(X)) where X is a random variable with density given by the importance sampler. It’s often a good idea to use an importance sampler with slightly heavier tails than the original integrand.