Earlier this week I wrote about several ways to generalize trig functions. Since trig functions have addition theorems like

a natural question is whether generalized trig functions also have addition theorems.

Hyperbolic functions have well-known addition theorems analogous to the addition theorems above. This isn’t too surprising since circular and hyperbolic functions are fundamentally two sides of the same coin.

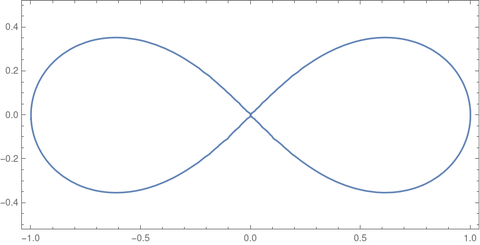

I mentioned that the lemniscate functions satisfy many identities but didn’t give any examples. Here are addition theorems satisfied by the lemniscate sine sl and the lemniscate cosine cl.

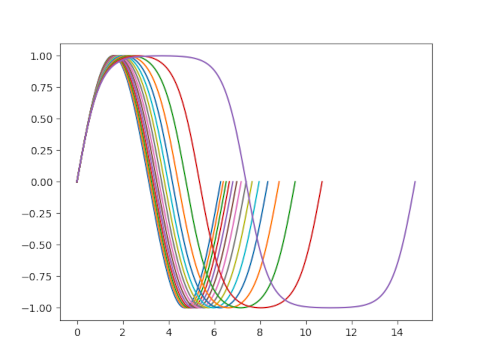

Addition theorems for sinp and friends are harder to come by. In [1] the authors say “no addition formula for sinp is known to us” but they did come up with a double-argument theorem for a special case of sinp,q:

There is a deep reason why the lemniscate and hyperbolic functions have addition theorems and sinp does not, namely a theorem of Weierstrass. This theorem says that a meromorphic function has an algebraic addition theorem if and only if it is an elliptic function of z, a rational function of z, or a rational function of exp(λz).

The leminscate functions have addition theorems because they are elliptic functions. Circular and hyperbolic functions have addition theorems because they are rational functions of exp(iz). But sinp does not have an addition theorem because it is not elliptic, rational, or a rational function of exp(λz). It’s possible that sinp has some sort of addition theorem that falls outside of Weiersrass’ theorem, i.e. an addition theorem using a non-algebraic function.

You may have noticed that the addition rule for sine involves not only sine but also cosine. But using the Pythagorean identity we can turn an addition rule involving sines and cosines into one only involving sines. Similarly, we can use a Pythagorean-like theorem to turn the identities involving sl and cl into identities involving only one of these functions.

Elliptic functions satisfy addition theorems, and functions satisfying addition theorems are elliptic (or the other two cases of Weierstrass’ theorem). Rational functions of x and rational functions of exp(λz) are easy to spot, so if you see an unfamiliar function that has an algebraic addition theorem, you know it’s an elliptic function. If you saw the addition theorems for sl and cl before knowing what these functions are, you could say to yourself that these are probably elliptic functions.

You may see other theorems called addition theorems. For example, the gamma function satisfies an addition theorem, although it is not elliptic or rational. But this is a restricted kind of addition theorem: it applies to x + 1 and not to general x + y. Also, the Bessel functions have addition theorems, but these theorems involve infinite sums; they are not algebraic addition theorems.

[1] David E. Edmunds, Petr Gurka, Jan Lang. Properties of generalized trigonometric functions. Journal of Approximation Theory 164 (2012) 47–56.