Suppose you take two numbers, a and b, and repeatedly take their arithmetic mean and their geometric mean. That is, suppose we set

a0 = a

b0 = b

then

a1 = (a0 + b0)/2

b1 = √(a0 b0)

and repeat this process, each new a becoming the arithmetic mean of the previous a and b, and each new b becoming the geometric mean of the previous a and b. The sequence of a‘s and b‘s converge to a common limit, unsurprisingly called the arithmetic-geometric mean of a and b, written AGM(a, b).

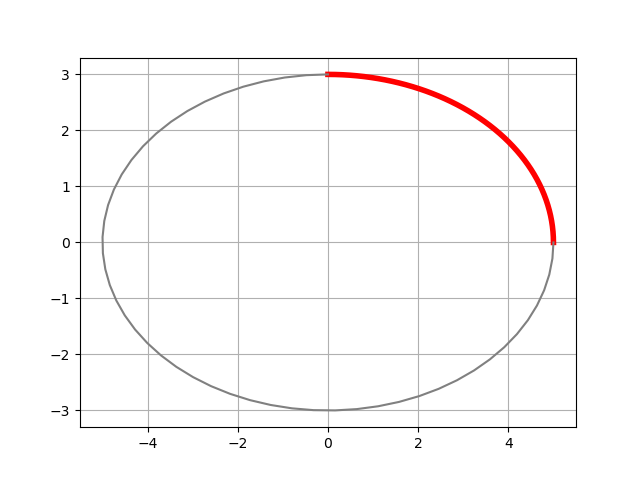

Gauss found that AGM(a, b) could be expressed as an elliptic integral K:

AGM(a, b) = π(a + b) / 4K((a–b)/(a+b)).

You might read that as saying “OK, if I ever need to compute the AGM, I can do it by calling a function that evaluates K.” That’s true, but in practice it’s backward! The sequence defining the AGM converges very quickly, and so it is used to compute K.

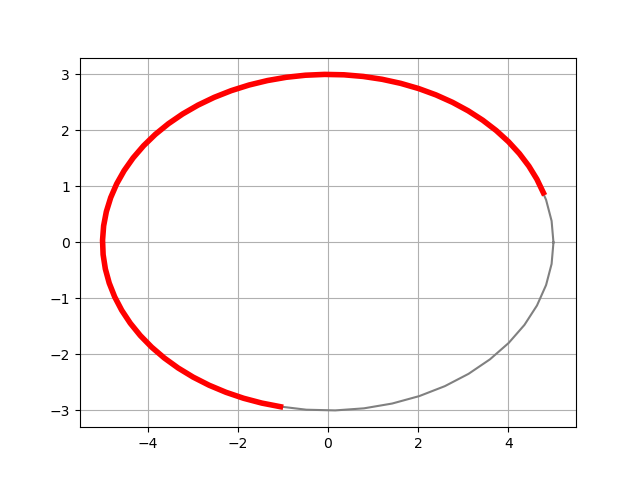

Because the AGM converges so quickly, you may wonder what else you might be able to compute using a variation on the AGM. Gauss knew you could compute inverse cosine by altering the AGM. This idea was later rediscovered and developed more thoroughly by C. W. Borchardt and is now known as Borchardt’s algorithm. This algorithm iterates the following.

a1 = (a0 + b0)/2

b1 = √(a1 b0)

It’s a small, slightly asymmetric variant of the AGM. Instead of taking the geometric mean of the previous a and b, you take the geometric mean of the new a and the previous b.

I am certain someone writing a program to compute the AGM has implemented Borchardt’s algorithm by mistake. It’s what you get when you update a and b sequentially rather than simultaneously. But if you want to compute arccos, it’s a feature, not a bug.

Borchardt’s algorithm computes arccos(a/b) as √(b² – a²) divided by the limit of his iteration. Here it is in Python.

def arccos(a, b):

"Compute the inverse cosine of a/b."

assert(0 <= a < b)

L = (b**2 - a**2)**0.5

while abs(a - b) > 1e-15:

a = 0.5*(a + b)

b = (a*b)**0.5

return L/a

We can compare our implementation of inverse cosine with the one in NumPy.

import numpy as np

a, b = 2, 3

print( arccos(a, b) )

print( np.arccos(a/b) )

Both agree to 15 figures as we’d hope.

A small variation on the function above can compute inverse hyperbolic cosine.

def arccosh(a, b):

"Compute the inverse hyperbolic cosine of a/b."

assert(a > b > 0)

L = (a**2 - b**2)**0.5

while abs(a - b) > 1e-15:

a = 0.5*(a + b)

b = (a*b)**0.5

return L/a

Again we can compare our implementation to the one in NumPy

a, b = 7, 4

print( arccosh(a, b) )

print( np.arccosh(a/b) )

and again they agree to 15 figures.

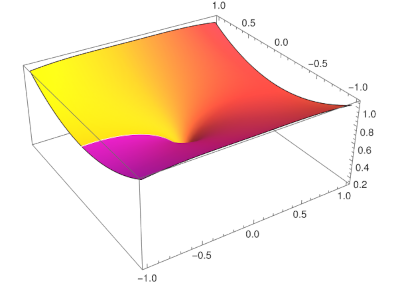

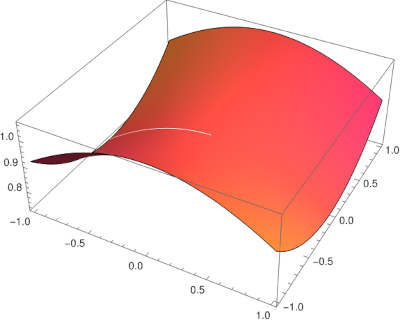

In an earlier post I wrote about Bille Carlson’s work on elliptic integrals, finding a more symmetric basis for these integrals. A consequence of his work, and possibly a motivation for his work, was to be able to extend Borchardt’s algorithm.” He said in [1]

The advantage of using explicitly symmetric functions is illustrated by generalizing Borchardt’s iterative algorithm for computing an inverse cosine to an algorithm for computing an inverse elliptic cosine.

I doubt Borchardt’s algorithm is used to compute arccos except in special situations. Maybe it’s used for extended precision. If you had an extended precision library without an arccos function built in, you could implement your own as above.

The practical value of Borschardt’s algorithm today is more likely in evaluating elliptic integrals. It also alludes to a deep theoretical connection between AGM-like iterations and elliptic-like integrals.

Related posts

[1] B. C. Carlson. Hidden Symmetries of Special Function. SIAM Review , Jul., 1970, Vol. 12, No. 3 (Jul., 1970), pp. 332‐345