It is sometimes said that neural networks are “just” logistic regression. (Remember neural networks? LLMs are neural networks, but nobody talks about neural networks anymore.) In some sense a neural network is logistic regression with more parameters, a lot more parameters, but more is different. New phenomena emerge at scale that could not have been anticipated at a smaller scale.

Logistic regression can work surprisingly well on small data sets. One of my clients filed a patent on a simple logistic regression model I created for them. You can’t patent logistic regression—the idea goes back to the 1840s—but you can patent its application to a particular problem. Or at least you can try; I don’t know whether the patent was ever granted.

Some of the clinical trial models that we developed at MD Anderson Cancer Center were built on Bayesian logistic regression. These methods were used to run early phase clinical trials, with dozens of patients. Far from “big data.” Because we had modest amounts of data, our models could not be very complicated, though we tried. The idea was that informative priors would let you fit more parameters than would otherwise be possible. That idea was partially correct, though it leads to a sensitive dependence on priors.

When you don’t have enough data, additional parameters do more harm than good, at least in the classical setting. Over-parameterization is bad in classical models, though over-parameterization can be good for neural networks. So for a small data set you commonly have only two parameters. With a larger data set you might have three or four.

There is a rule of thumb that you need at least 10 events per parameter (EVP) [1]. For example, if you’re looking at an outcome that happens say 20% of the time, you need about 50 data points per parameter. If you’re analyzing a clinical trial with 200 patients, you could fit a four-parameter model. But those four parameters better pull their weight, and so you typically compute some sort of information criteria metric—AIC, BIC, DIC, etc.—to judge whether the data justify a particular set of parameters. Statisticians agonize over each parameter because it really matters.

Imaging working in the world of modest-sized data sets, carefully considering one parameter at a time for inclusion in a model, and hearing about people fitting models with millions, and later billions, of parameters. It just sounds insane. And sometimes it is insane [2]. And yet it can work. Not automatically; developing large models is still a bit of a black art. But large models can do amazing things.

How do LLMs compare to logistic regression as far as the ratio of data points to parameters? Various scaling laws have been suggested. These laws have some basis in theory, but they’re largely empirical, not derived from first principles. “Open” AI no longer shares stats on the size of their training data or the number of parameters they use, but other models do, and as a very rough rule of thumb, models are trained using around 100 tokens per parameter, which is not very different from the EVP rule of thumb for logistic regression.

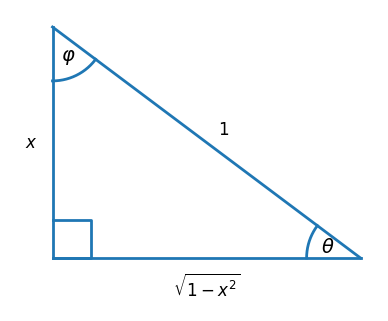

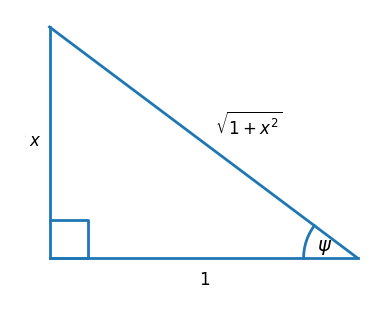

Simply counting tokens and parameters doesn’t tell the full story. In a logistic regression model, data are typically binary variables, or maybe categorical variables coming from a small number of possibilities. Parameters are floating point values, typically 64 bits, but maybe the parameter values are important to three decimal places or 10 bits. In the example above, 200 samples of 4 binary variables determine 4 ten-bit parameters, so 20 bits of data for every bit of parameter. If the inputs were 10-bit numbers, there would be 200 bits of data per parameter.

When training an LLM, a token is typically a 32-bit number, not a binary variable. And a parameter might be a 32-bit number, but quantized to 8 bits for inference [3]. If a model uses 100 tokens per parameter, that corresponds to 400 bits of training data per inference parameter bit.

In short, the ratio of data bits to parameter bits is roughly similar between logistic regression and LLMs. I find that surprising, especially because there’s a sort of no man’s land between [2] a handful of parameters and billions of parameters.

Related posts

- Sensitivity in logistic regression

- Experiences with GPT-5 codex

- Prompting peril

- Using classical statistics to avoid regulatory burdens

[1] P Peduzzi 1, J Concato, E Kemper, T R Holford, A R Feinstein. A simulation study of the number of events per variable in logistic regression analysis. Journal of Clinical Epidemiology 1996 Dec; 49(12):1373-9. doi: 10.1016/s0895-4356(96)00236-3.

[2] A lot of times neural networks don’t scale down to the small data regime well at all. It took a lot of audacity to believe that models would perform disproportionately better with more training data. Classical statistics gives you good reason to expect diminishing returns, not increasing returns.

[3] There has been a lot of work lately to find low precision parameters directly. So you might find 16-bit parameters rather than finding 32 bit parameters then quantizing to 16 bits.