I find it amusing when I hear someone say something is “just an approximation” because their “exact” answer is invariably “just an approximation” from someone else’s perspective. When someone says “mere approximation” they often mean “that’s not the kind of approximation my colleagues and I usually make” or “that’s not an approximation I understand.”

For example, I once audited a class in celestial mechanics. I was surprised when the professor spoke with disdain about some analytical technique as a “mere approximation” since his idea of “exact” only extended to Newtonian physics. I don’t recall the details, but it’s possible that the disreputable approximation introduced no more error than the decision to only consider point masses or to ignore relativity. In any case, the approximation violated the rules of the game.

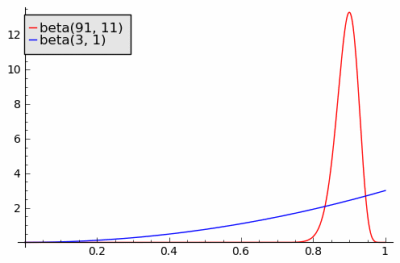

Statisticians can get awfully uptight about numerical approximations. They’ll wring their hands over a numerical routine that’s only good to five or six significant figures but not even blush when they approximate some quantity by averaging a few hundred random samples. Or they’ll make a dozen gross simplifications in modeling and then squint over whether a p-value is 0.04 or 0.06.

The problem is not accuracy but familiarity. We all like to draw a circle around our approximation of reality and distrust anything outside that circle. After a while we forget that our approximations are even approximations.

This applies to professions as well as individuals. All is well until two professional cultures clash. Then one tribe will be horrified by an approximation another tribe takes for granted. These conflicts can be a great reminder of the difference between trying to understand reality and playing by the rules of a professional game.