Unix philosophy says a program should do only one thing and do it well. Solve problems by sewing together a sequence of small, specialized programs. Doug McIlroy summarized the Unix philosophy as follows.

This is the Unix philosophy: Write programs that do one thing and do it well. Write programs to work together. Write programs to handle text streams, because that is a universal interface.

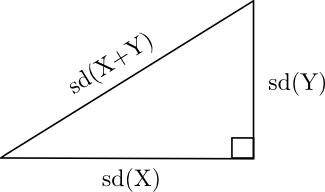

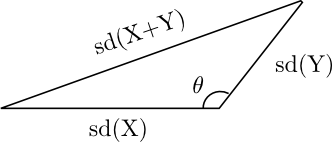

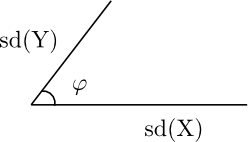

This design philosophy is closely related to “orthogonality.” Programs should have independent features just as perpendicular (orthogonal) lines run in independent directions.

In practice, programs gain overlapping features over time. A set of programs may start out orthogonal but lose their uniqueness as they evolve. I used to think that the departure from orthogonality was due to a loss of vision or a loss of discipline, but now I have a more charitable explanation.

The hard part isn’t writing little programs that do one thing well. The hard part is combining little programs to solve bigger problems. In McIlroy’s summary, the hard part is his second sentence: Write programs to work together.

Piping the output of a simple shell command to another shell command is easy. But as tasks become more complex, more and more work goes into preparing the output of one program to be the input of the next program. Users want to be able to do more in each program to avoid having to switch to another program to get their work done.

An example of the opposite of the Unix philosophy would be the Microsoft Office suite. There’s a great deal of redundancy between the programs. At a high level, Word is for word processing, Excel is the spreadsheet, Access is the database etc. But Excel has database features, Word has spreadsheet features, etc. You could argue that this is a terrible mess and that the suite of programs should be more orthogonal. But someone who spends most of her day in Excel doesn’t want to switch over to Access to do a query or open Word to format text. Office users are grateful for the redundancy.

Software applications do things they’re not good at for the same reason companies do things they’re not good at: to avoid transaction costs. Companies often pay employees more than they would have to pay contractors for the same work. Why? Because the total cost includes more than the money paid to contractors. It also includes the cost of evaluating vendors, writing contracts, etc. Having employees reduces transaction costs.

When you have to switch software applications, that’s a transaction cost. It may be less effort to stay inside one application and use it for something it does poorly than to switch to another tool. It may be less effort to learn how to do something awkwardly in a familiar application than to learn how to do it well in an unfamiliar application.

Companies expand or contract until they reach an equilibrium between bureaucracy costs and transaction costs. Technology can cause the equilibrium point to change over time. Decades ago it was efficient for organizations to become very large. Now transaction costs have lowered and organizations outsource more work.

Software applications may follow the pattern of corporations. The desire to get more work done in a single application leads to bloated applications, just as the desire to avoid transaction costs leads to bloated bureaucracies. But bloated bureaucracies face competition from lean start-ups and eventually shed some of their bloat or die. Bloated software may face similar competition from leaner applications. There are some signs that consumers are starting to appreciate software and devices that do less and do it well.