For small angles, sin(θ) is approximately θ. This post takes a close look at this familiar approximation.

I was confused when I first heard that sin(θ) ≈ θ for small θ. My thought was “Of course they’re approximately equal. All small numbers are approximately equal to each other.” I also was confused by the footnote “The angle θ must be measured in radians.” If the approximation is just saying that small angles have small sines, it doesn’t matter whether you measure in radians or degrees. I missed the point, as I imagine most students do. I run into people who have completed math or science degrees without understanding this tidbit from their freshman year.

The point is that the error in the approximation sin(θ) ≈ θ is small, even relative to the size of θ. If θ is small, the error in the approximation sin(θ) ≈ θ is really, really small. I use the phrase “really, really” in a precise sense; I’m not just talking like an eight-year-old child. If θ is small, θ2 is really small and θ3 is really, really small.

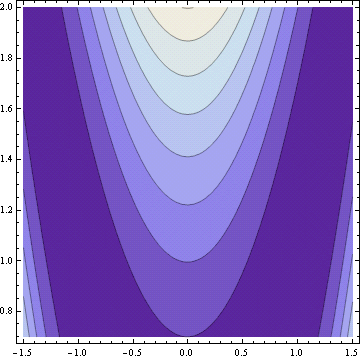

For small values of θ, say θ < 1, the difference between sin(θ) and θ is very nearly θ3/6 and so the absolute error is really, really small. The relative error is about θ2/6. If the absolute error is really, really small, the relative error is really small.

The approximation sin(θ) ≈ θ comes from a Taylor series, but there’s more going on than that. For example, the approximation log(1 + x) ≈ x also comes from a Taylor series, but that approximation is not nearly as accurate.

The Taylor series for log(1 + x) is

x – x2/2 + x3/3 – …

This means that for small x, log(1 + x) is approximately x, and the error in the approximation is roughly x2/2. So if x is small, the absolute error in the approximation is really small and the relative error is small. Notice this is one less “really” than the analogous statement about sines.

The reason the sine approximation is one “really” better than the log approximation is that sine is an odd function, so its Taylor series has only odd terms. The error is approximately the first term left out. The series for sin(θ) is

θ − θ3 / 3! + θ5/5! – …

so sin(θ) – θ is on the order of θ3, not just θ2.

We can say even more. Not only is the error in sin(θ) ≈ θ approximately θ3/6, it is in fact bounded by θ3/6 for small, positive θ. This comes from the alternating series theorem. When you make an approximation from an alternating Taylor series, the error is bounded by the first term you leave out. The argument has to be small enough that the terms of the series decrease in absolute value. For example, if you stick θ = 1 into the series for sine, each term of the series is smaller than the previous one. But if you stick in θ = 2 then the terms get larger before they get smaller. So the precise meaning of “small enough” is small enough that the terms of the alternating series decrease in absolute value.

The series for log(1 + x) also alternates, so the error estimate is also an upper bound on the error in that case. Not all series are alternating series. But when we do have an alternating series, we get a bonus as far as being able to control the error in approximations.

It’s also true that for small θ, cos(θ) is approximately 1. At first this may seem analogous to saying that for small θ, sin(θ) is approximately 0. While both are true, the former is much better.

The series for cosine is

1 − θ2/2! + θ4/4! – …

and so the error in approximating cos(θ) with 1 is approximately θ2/2. In fact, since the series alternates, the error is bound by θ2/2, if θ is small enough. And in this case the relative error is approximately equal to the absolute error. This says that for small θ, the approximation cos(θ) ≈ 1 is really, really good. By contrast, saying that sin(θ) ≈ 0 for small θ is a bad approximation: the absolute error is approximately θ and the relative error is 100%. Rounding small values of θ down to 0 produces a very good approximation in one context and a poor approximation in another context.

Related posts