This post is a side quest in the series on navigating by the stars. It expands on a footnote in the previous post.

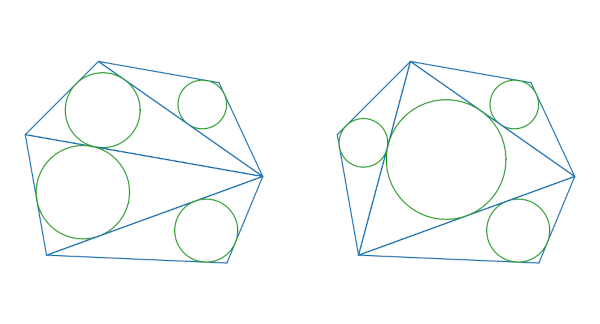

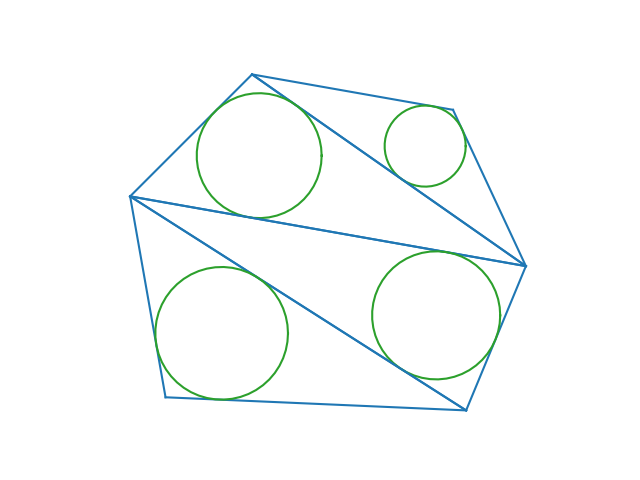

There are six pieces of information associated with a spherical triangle: three sides and three angles. I said in the previous post that given three out of these six quantities you could solve for the other three. Then I dropped a footnote saying sometimes the missing quantities are uniquely determined but sometimes there are two solutions and you need more data to uniquely determine a solution.

Todhunter’s textbook on spherical trig gives a thorough account of how to solve spherical triangles under all possible cases. The first edition of the book came out in 1859. A group of volunteers typeset the book in TeX. Project Gutenberg hosts the PDF version of the book and the TeX source.

I don’t want to duplicate Todhunter’s work here. Instead, I want to summarize when solutions are or are not unique, and make comparisons with plane triangles along the way.

SSS and AAA

The easiest cases to describe are all sides or all angles. Given three sides of a spherical triangle (SSS), you can solve for the angles, as with a plane triangle. Also, given three angles (AAA) you can solve for the remaining sides of a spherical triangle, unlike a plane triangle.

SAS and SSA

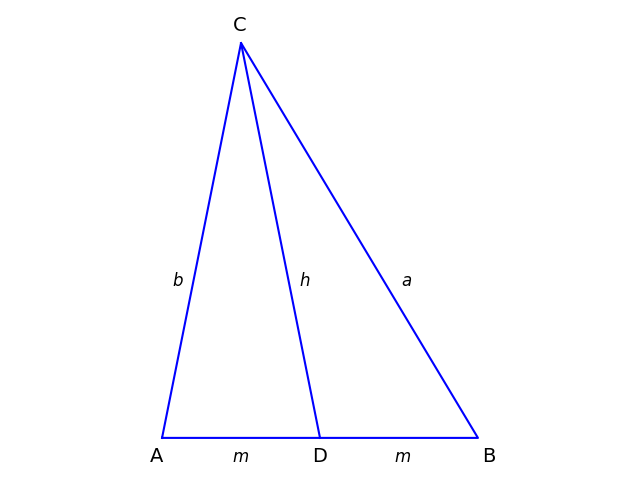

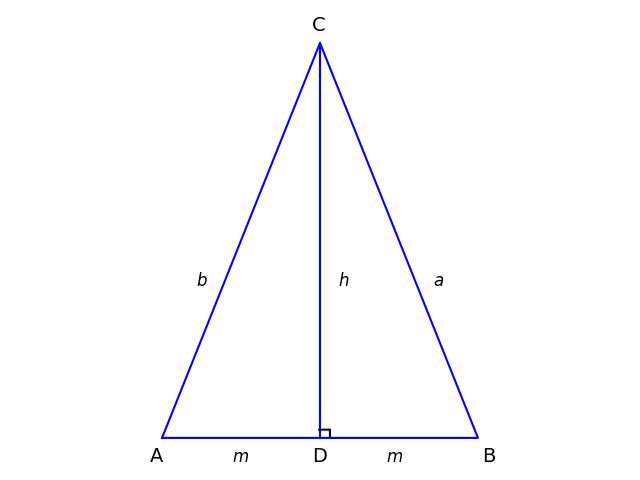

When you’re given two sides and an angle, there is a unique solution if the angle is between the two sides (SAS), but there may be two solutions if the angle is opposite one of the sides (SSA). This is the same for spherical and plane triangles.

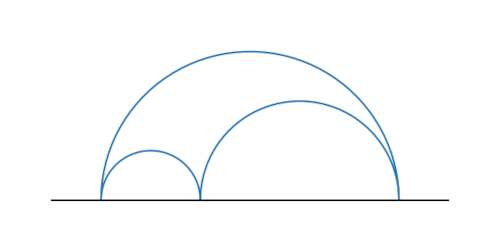

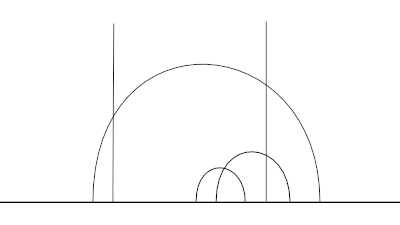

There could be even more than two solutions in the spherical case. Consider a triangle with one vertex at the North Pole and two vertices on the equator. Two sides are specified, running from the pole to the equator, and the angles at the equator are specified—both are right angles—but the side of the triangle on the equator could be any length.

ASA and AAS

When you’re given two angles and a side, there is a unique solution if the side is common to the two angles (ASA).

If the side is opposite one of the angles (AAS), there may be two solutions to a spherical triangle, but only one solution to a plane triangle. This is because two angles uniquely determine the third angle in a plane triangle, but not in a spherical triangle.

The example above of a triangle with one vertex at the pole and two on the equator also shows that an AAS problem could have a continuum of solutions.

Summary

Note that spherical triangles have a symmetry that plane triangles don’t: the spherical column above remains unchanged if you swap S’s and A’s. This is an example of duality in spherical geometry.