I saw an integral posted online that came from this year’s MIT Integration Bee.

My thoughts on seeing this were, in order:

- It looks like a beta function.

- The answer is a small number.

- You can evaluate the integral using the substitution u = 1 − x2025.

I imagine most students’ reactions would be roughly the opposite. They’d first see the substitution. Some might think of the value being small, and none would think of beta functions.

Size estimate

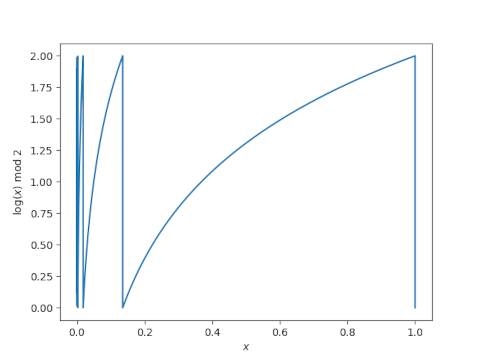

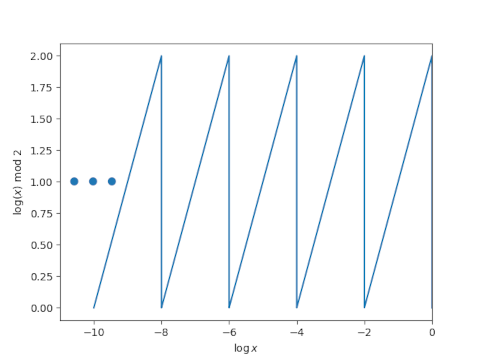

The value of the integral is small because you have numbers between 0 and 1 being raised to large powers. In real applications, being able to estimate the size of integral may be more valuable than being able to compute the integral.

But on a quiz, like the Integration Bee, knowing that the result is small would be worthless, except possibly to check a result. You know from the context of the problem being on a timed quiz that there must be a trick [1].

Incidentally, if you changed the problem slightly, say by replacing one of the instances of 2025 with 2025.03, the integration problem becomes much harder, but you could still estimate that the value is small.

Beta functions

The first time I saw someone evaluate an integral by recognizing a beta function it seemed like he was pulling a rabbit out of a hat. I was completely surprised that he thought something was common knowledge that I’d never seen.

Years later I went to work in biostatistics at MDACC and worked with the beta function and beta random variables all the time. If you look through my publications, you’ll see the word “beta” appears several times.

The beta function is defined as follows. You could take the first equality as the definition and the second as a theorem.

The integrand in the definition of the beta function is the probability density function for a beta(x, y) random variable, and so B(x, y) is the normalizing constant for a beta(x, y) probability distribution.

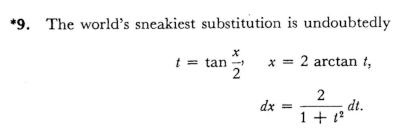

The integral in the Integration Bee question is not a beta function. I thought maybe it could be turned into a beta function, but the u substitution is simpler.

If you change the integrand above to

then you do have a beta function. The value of this integral is B(2025, 2026) which equals 2024! 2025! / 4050!, which is an extremely small number.

Numerical estimation

The integral in the previous section is roughly the same as that of x2024 (1 − x)2024. This function has its maximum at ½, and so the integrand is less than 2−4048 ≈ 10−1219.

If we want to evaluate 2024! 2025! / 4050! numerically we’ll need to be indirect about it because 2024! would overflow and the final result would underflow. We could calculate the log base 10 of the value using Python as follows.

>>> from scipy.special import gammaln >>> from math import log >>> (gammaln(2025) + gammaln(2026) - gammaln(4051))/log(10) -1220.576093208249

So our upper bound got us to within an order of magnitude or two of the result.

[1] When you see the current year used in a math problem, the quiz writer is winking at you. The year is often meant to suggest that a number is large but somewhat arbitrary. Here you suspect that the key to the question would be the same if 2024 and 2025 were replaced with 1997 and 1998. Also, the year is often a hint that you don’t want to use brute force. In this case, it’s a warning not to expand the integrand using the binomial theorem.