Suppose we have an n × n chessboard. The case n = 8 is of course most common, but we consider all positive integer values of n.

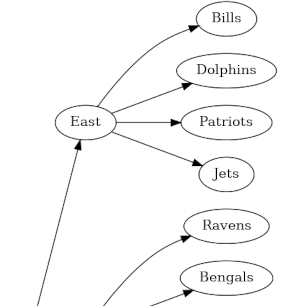

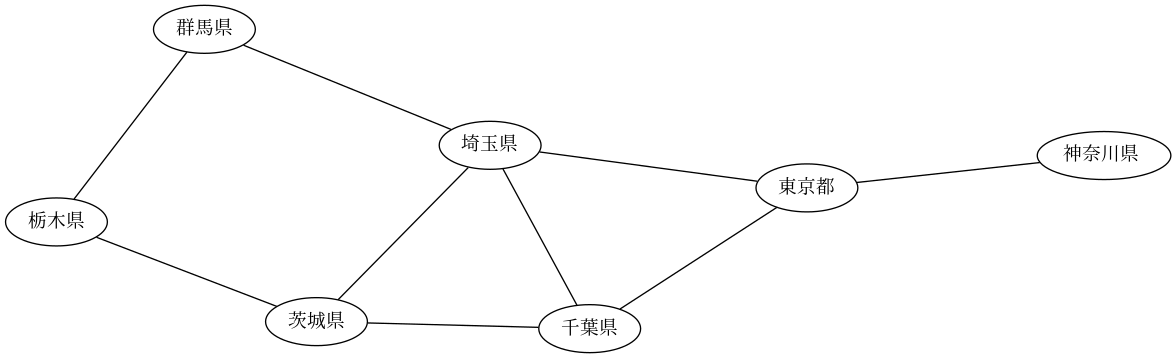

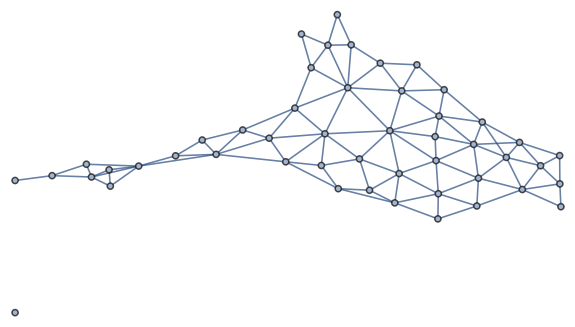

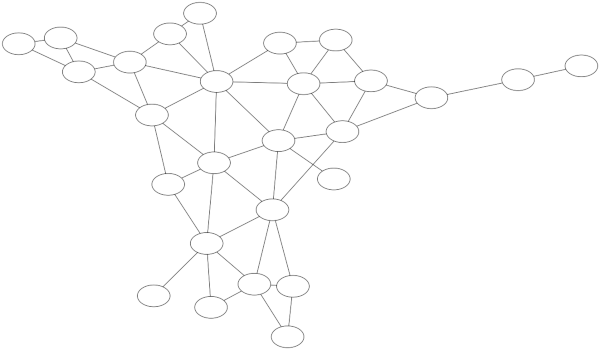

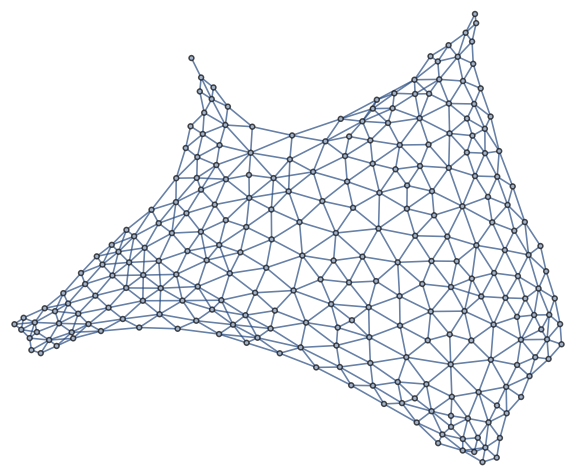

The graph of a chess piece has an edge between two squares if and only if the piece can legally move between the two squares.

Now suppose we have a rule that if a piece can move between two squares, the squares must be colored differently. The minimum number of colors necessary to color the chessboard this was is the chromatic number of that piece’s graph.

The chromatic number of a rook is clearly at least n: since a rook can move between any two squares in a row, every square in the row must be a different color. And in fact the chromatic number for a rook’s graph is exactly n.

Bishops are a little more complicated. If n is even, then the chromatic number for a bishop is n. If n is odd, the number is n for a white bishop but n − 1 for a black bishop. Thinking of a 3 × 3 board shows why the two bishops are different.

Now a queen is sorta like a rook and a bishop combined, but determining the chromatic number for a queen’s graph is more difficult. The chromatic number of a queen can be no less than that of a rook since the former can make all the moves of the latter. So the queen’s chromatic number is at least n, but is it equal to n?

The results stated above can be found in [1].

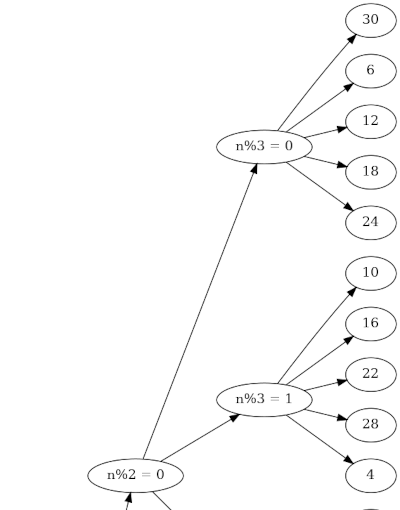

Let k(n) be the chromatic number of a queen’s graph on an the n × n chessboard. The results in [1] regarding k(n) are summarized as follows.

- k(1) = 1

- k(2) = 4

- k(3) = k(4) = 5

- k(6) = 7

- k(n) = n if n is not divisible by 2 or 3.

The authors stated that they had found an example proving that k(8) ≤ 9 and conjectured that k(8) = 9. You can find a C++ program that confirms this conjecture here.

The authors conjectured that k(n) ≤ n + 1 for all n > 3. I don’t know whether this has been proven. My impression following a brief search is that the problem of finding k(n) for all n is still not fully resolved.

Update: According to a link in the comments, n = 27 is the smallest n such that k(n) is unknown.

Related posts

[1] M. R. Iyer and V. V. Menon. On Coloring the n × n Chessboard. The American Mathematical Monthly, 1966, Vol. 73, pp. 721–725.