The recent post Converting between quaternions and rotation matrices describes an algorithm as “a mathematically correct but numerically suboptimal method/”

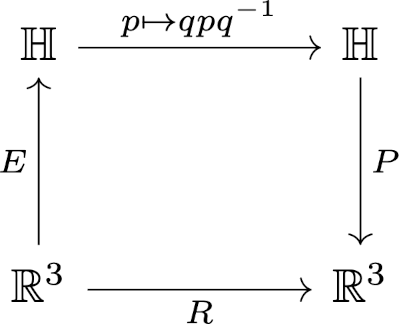

Let’s just look at a small piece of that post. The post explains how to find a rotation matrix to describe the same rotation as pre- and post- multiplying by a unit quaternion q, and how to recover q from a rotation. The latter involves

q0 = ½ √(1 + r11 + r22 + r33).

If you look at the definition of the r terms you’ll see that the quantity under the square root equal 4q0² and so the term is positive. In theory. In floating point arithmetic, the term you’re taking the square root of could be small or even slightly negative.

I mentioned at the bottom of the earlier post that I came up with a unit quaternion q that caused the code to throw an exception because it attempted to take the square root of a negative number.

There’s an easy way to keep the code from throwing an exception: check whether the argument is positive before taking the square root. If it’s positive, forge ahead. If it’s negative, then round it up to zero.

This makes sense. The argument is theoretically positive, so rounding it up to zero can’t do any harm, and in fact it would to a tiny bit of good. But this is missing something.

As a general rule of thumb, if an algorithm has trouble when a quantity is negative, it might have trouble when the quantity is small but positive too.

How could the term

1 + r11 + r22 + r33

which is theoretically positive be computed as a negative number? When all precision is lost in the sum. And this happens when

r11 + r22 + r33

is nearly −1, i.e. when you’re subtracting nearly equal numbers. In general, if two positive numbers a and b are the same up to n bits, you can lose n bits of precision when subtracting them. You could lose all of your precision if two numbers agree to n bits and all you have are n bits.

Putting in a “breaker” to prevent taking the square root of a negative number doesn’t address the problem of code not throwing an exception but returning an inaccurate result. Maybe you’re subtracting two numbers that don’t agree to all the bits of precision you have, but they agree to more bits than you’d care to lose.

The solution to this problem is to come up with another expression for q0 and the components, another expression that is equal in theory but numerically less sensitive, i.e. one that avoids subtracting nearly equal numbers. That’s what the authors do in the paper cited in the previous post.