I just revised a post from a week ago about rotations. The revision makes explicit the process of embedding a 3D vector into the quaternions, then pulling it back out.

The 3D vector is embedded in the quaternions by making it the vector part of a quaternion with zero real part:

(p1, p2, p3) → (0, p1, p2, p3)

and the quaternion is returned to 3D by cutting off the real part:

(p0, p1, p2, p3) → (p1, p2, p3).

To give names to the the process of moving to and from quaternions, we have an embedding E : ℝ3 to ℝ4 and a projection P from ℝ4 to ℝ3.

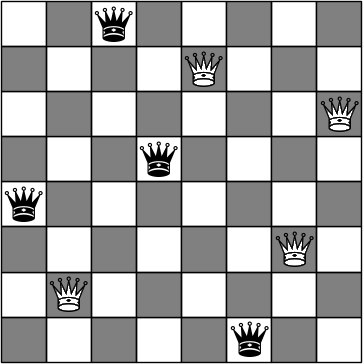

We can represent E as a 4 × 3 matrix

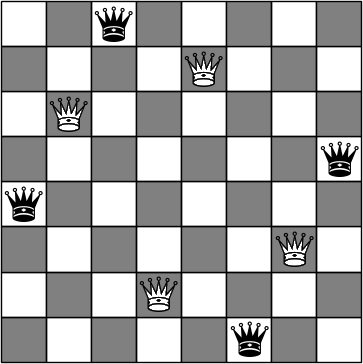

and P by a 3 × 4 matrix

We’d like to say E and P are inverses. Surely P undoes what E does, so they’re inverses in that sense. But E cannot undo P because you lose information projecting from ℝ4 to ℝ3 and E cannot recover information that was lost.

The rest of this post will look at three generalizations of inverses and how E and P relate to each.

Left and right inverse

Neither matrix is invertible, but PE equals the identity matrix on ℝ3 , and so P is a left inverse of E and E is a right inverse of P.

On the other hand, EP is not an identity matrix, and so E is not a left inverse of E, and neither is P a right inverse of E.

Adjoint

P is the transpose of E, which means it is the adjoint of E. Adjoints are another way to generalize the idea of an inverse. More on that here.

Pseudo-inverse

The Moore-Penrose pseudo-inverse acts a lot like an inverse, which is somewhat uncanny because all matrices have a pseudo-inverse, even rectangular matrices.

Pseudo-inverses are symmetric, i.e. if A+ is the pseudo-inverse of A, then A is the pseudo-inverse of A+.

Given an m by n matrix A, the Moore-Penrose pseudoinverse A+ is the unique n by m matrix satisfying four conditions:

- A A+ A = A

- A+ A A+ = A+

- (A A+)* = A A+

- (A+ A)* = A+ A

To show that A+ = P we have to establish

- EPE = E

- PEP = A+

- (EP)* = EP

- (PE)* = PE

We calculated EP and PE above, and both are real and symmetric, so properties 3 and 4 hold.

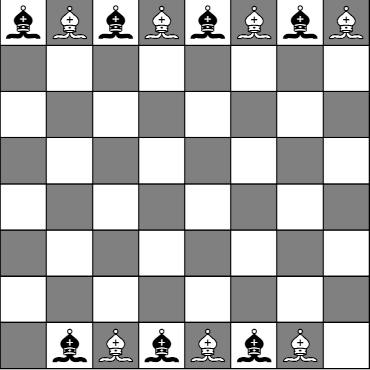

We can also compute

and

showing that properties 1 and 2 hold as well.