Richard Hamming [1] gives this nice example of an integral with a mild singularity:

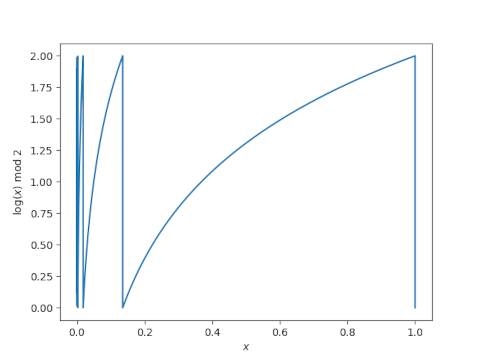

The integrand approaches −∞ as x approaches 0 and yet the integral is finite. If we try into numerically evaluate this integral, we will either get inaccurate results or we will have to go to a lot of work.

This post will show that with a clever reformulation of the problem we use simple methods to get accurate results, or use sophisticated methods with fewer function evaluations.

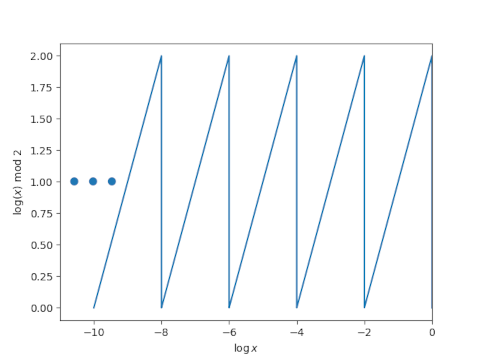

As I wrote about years ago, a common technique for dealing with singularities is to add and subtract a function with the same asymptotic behavior that can be integrated by hand. Hamming does a slight variation on this, multiplying and dividing by x inside the logarithm.

Here we integrated the singular part, log(x), and we are left with numerically integrating a well-behaved function, one that is smooth and bounded on the interval of integration. Because sin(x) ≈ x for small x, we can define sin(x)/x to be 1 at 0 and have a smooth function.

We’ll use NumPy’s sinc function to handle sin(x)/x properly near 0. There are two conventions for defining the sinc function, either as sin(x)/x or as sin(πx)/πx. NumPy uses the latter convention, so we define our own sinc function as follows.

import numpy as np

def mysinc(x): return np.sinc(x/np.pi)

Trapezoid rule

Let’s use the simplest numerical method, the trapezoid rule.

We run into a problem immediately: if we chop up the interval [0, 1] in a way that puts an integration point at 0, our resulting integral will be infinite. We could replace 0 with some ε > 0, but if we do, we should try a few different values of ε to see whether our choice of ε greatly impacts our integral.

for ε in [1e-6, 1e-7, 1e-8]:

xs = np.linspace(ε, 1, 100)

integral = sum( np.log(np.sin(x)) for x in xs ) / 100

This gives us

-1.152996659484881

-1.1760261818509377

-1.1990523999312201

suggesting that the integral does indeed depend on ε. We’ll see soon that our integral evaluates to around −1.05. So our results are not accurate, and the smaller ε is, the worse our answer is. [2]

Now let’s evaluate the integral as Hamming suggests. We’ll use a varying number of integration points so the difference between our integral estimates will give us some idea whether we’re converging.

for N in [25, 50, 100]:

xs = np.linspace(0, 1, N)

integral = sum( np.log(mysinc(xs)) )/N

integral -= 1 # subtract the integral of log(x)

print(integral)

This gives us

-1.0579531812873197

-1.057324013010989

-1.057019035348765

The consistency between the result suggests the integral is around −1.057.

Adaptive integration

We said at the top of this article that Hamming’s reformulation would either let us get accurate results from simple methods or let us get by with fewer function evaluations using a sophisticated method. Now we’ll demonstrate the latter, using the adaptive integration algorithm quad from SciPy.

from scipy.integrate import quad

# Integrate log(sin(x)) from 0 to 1

integral, error = quad(lambda x: np.log(np.sin(x)), 0, 1)

print(integral, error)

# Integrate log(x sin(x)/x) = log(x) + log(mysinc(x)) from 0 to 1

integral, error = quad(lambda x: np.log(mysinc(x)), 0, 1)

integral -= 1

print(integral, error)

This prints

-1.0567202059915843 1.7763568394002505e-15

-1.056720205991585 6.297207865333937e-16

suggesting that both approaches are working. Both estimate their error to be near machine precision. But as we’ll see, the direct approach uses about 10 times as many function evaluations.

Let’s ask quad to return more details by adding full_output = 1 as an argument.

integral, error, info = quad(lambda x: np.log(np.sin(x)), 0, 1, full_output=1)

print(integral, error, info["neval"])

integral, error, info = quad(lambda x: np.log(mysinc(x)), 0, 1, full_output=1)

integral -= 1

print(integral, error, info["neval"])

This shows that the first integration used 231 function evaluations, but the second used only 21.

The difference in efficiency doesn’t matter when you’re evaluating an integral one time, but if you were repeatedly evaluating similar integrals inside a loop, subtracting off the singularity could make your problem run 10 times faster. Simulations involving Bayesian statistics can have such integrations in the inner loop, and so making an integration an order of magnitude faster could make the overall simulation an order of magnitude faster, reducing CPU-days to CPU-hours.

Related posts

[1] Richard Hamming, Numerical Methods for Scientists and Engineers. Second edition. Dover.

[2] We can get better results if we let ε and 1/N go to zero at the same rate. The following code produces mediocre results, but better results than above.

for N in [10, 100, 1000]:

xs = np.linspace(1/N, 1, N)

integral = sum( np.log(np.sin(x)) for x in xs ) / N

print(integral)

This prints

-0.8577924592777062

-1.0253626377143321

-1.05243363818431

which at least seems to be getting closer to the correct result, but has bad accuracy for so many function evaluations.