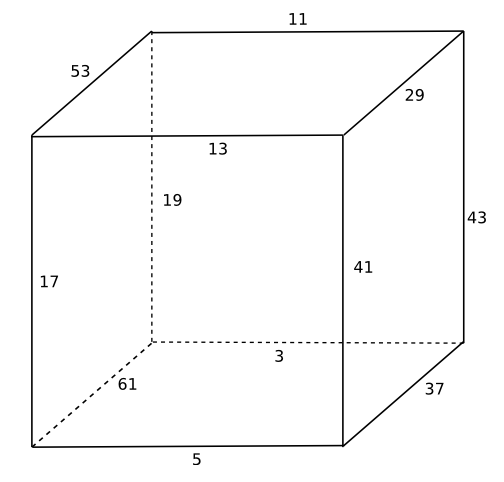

This is a drawing of Stewart’s cube. At each corner, the sum of the weights of the incoming edges is 83. Each edge has a prime weight, and all the weights are distinct.

Update: See Austin Buchanan’s post. He found a similar cube with smaller primes.

As I mentioned a few days ago, I’m winding down three of my Twitter accounts.

But I have started a new one: NetworkFact. This account has tweets about graph theory, analysis of large networks, etc.

Related links:

You can create an RSS feed for a Twitter account at RSS4Twitter, though they’re so overloaded that it might not work. You could also use BazQux RSS reader or try some of the alternatives mentioned in the comments here.

The Lambert W-function is the function w(z) implicitly defined by w exp(w) = z. When I first saw this, I thought that I’d seen something like this come up many times and that it would be really handy to know that this function has a name and has many software implementations [1]. Since then, however, I’ve hardly ever run into the W-function in application. But here’s an application I ran into recently.

A “giant component” in a random network is a component whose size grows in proportion to the size of the network. For a Poisson random network, let c = p(n – 1) be the expected number of vertices connected to any given vertex. If c > 1 then as n goes to infinity, there will be a giant component with probability 1. S, the proportion of nodes inside the a giant component, satisfies the equation

S = 1 – exp(-cS).

S plays a role similar to that of w in the definition of the W-function, which suggests the W-function might come in handy. And in fact, this book gives this solution for S:

S = 1 + w( –c exp(-c) )/c.

There are a couple issues. First, it’s not obvious that solution is correct. Second, we need to be more careful about how we define w(z) when z is negative.

Given some value of c, let S = 1 + w( –c exp(-c) )/c. Then

w( –c exp(-c) ) = –c(1 – S).

Apply the function f(x) = x exp( x ) to both sides of the equation above. By the definition of the W-function, we have

–c exp(-c) = –c(1 – S) exp( –c(1 – S) ).

From there it easily follows that

S = 1 – exp(-cS).

Now let’s look more carefully at the definition of the W-function. For positive real z, w exp(w) = z has a unique real solution defining w. But in the calculations above, we have evaluated w at –c exp(-c), which is negative since c > 1. For negative arguments, we have to pick a branch of w. Which branch guarantees that S = 1 – exp(-cS)? Any branch [2]. No matter which solution to w exp(w) = z we pick, the resulting S satisfies the giant component equation. However, the wrong branch might result in a meaningless solution. Since S is a proportion, S should be between 0 and 1.

Let’s go back and say that for our purposes, we will take w(z) to mean the real number no less than -1 satisfying w exp(w) = z. Then for c > 1, the solution S = 1 + w( –c exp(-c) )/c is between 0 and 1. You can show that S = 1 – exp(-cS) has a unique solution for 0 < S < 1, so any other branch would not give a solution in this interval.

Related post: Measuring graph connectivity with eigenvalues

[1] For example, in Python the Lambert W-function is scipy.special.lambertw. The function takes two arguments: z and an integer denoting the branch. By default, the branch argument is set to 0, giving the branch discussed in this post.

[2] For -1/e < z < 0, there are two real branches of w(z), but there are also infinitely many complex branches.

From Graph Theory in the Information Age by Fan Chung:

Empirically, most real-world graphs have the following properties:

SIAM News arrived this afternoon and had an interesting story on the front page: Applying math to myth helps separate fact from fiction.

In a nutshell, the authors hope to get some insight into whether a myth is based on fact by seeing whether the social network of characters in the myth looks more like a real social network or like the social network in a work of deliberate fiction. For instance, the social networks of the Iliad and Beowulf look more like actual social networks than does the social network of Harry Potter. Real social networks follow a power law distribution more closely than do social networks in works of fiction.

This could be interesting. For example, the article points out that some scholars believe Beowulf has a basis in historical events, though they don’t believe that Beowulf the character corresponds to a historical person. The network approach lends support to this position: the Beowulf social network looks more realistic when Beowulf himself is removed.

It seems however that an accurate historical account might have a suspicious social network, not because the events in it were made up but because they were filtered according to what the historian thought was important.

John Johnson did an analysis of the statistics blogosphere for the Coursera Social Networking Analysis class. His blog post about the analysis lists some of the lessons he learned from the project. It also includes a link to his paper and to the Python code used to do the analysis.

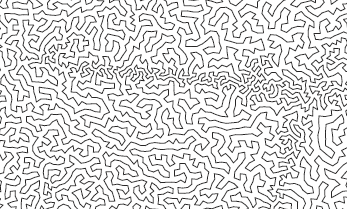

Image from Figure 6 of John’s paper.

Related: Help understanding complex networks

Bill Cook sent me a file yesterday that renders the Endeavour photo on my blog as the solution to a 66,290-city Traveling Salesman problem. His iPhone app Concord TSP chose 66,290 points and then solved for the shortest path connecting these points, a feat that would have strained a supercomputer a few years ago. (Bill Cook and I are not related as far as I know.)

Here is a thumbnail image of the full TSP tour:

You can find the full PDF here (1.24 MB). To show some of the detail, here is a close-up from near the top-left corner of the image:

I asked how the tour was constructed:

How do you construct a set of points whose TSP solution resembles a photograph? Is it sufficient to add more “cities” in regions where you want darker shading? And are the cities added at random with a density specified by color depth?

Bill Cook replied:

By default, the app will select the points along the line you describe: it splits the image into a grid, computes the average gray scale in each grid region, and drops a number of random cities into each grid region in proportion to the square of the average gray scale. This technique was first proposed by Bob Bosch and Adrianne Herman at Oberlin College. It is the default since it takes almost no time to compute, but I include two other options, that each take about a minute to render a large image on an iPhone 4.

The image of The Endeavour was created with a method Jim Bumgarnder proposed in his Stipple Cam project.

Related post: Moore’s law squared

Imagine a set of points in three dimensions. You want to connect the points with the shortest possible network. Sometimes you can make the network shorter by adding extra points. (These extra points are called Steiner points.) How much can extra points help? The answer is worth $1,000.

Here’s an example. Suppose our points are the corners of a unit cube. You can connect these points with a network of length 7. If you add a point in the center of the cube and connect every point to the center, you get a network of length 4 √ 3 = 6.928. So in this case, adding an extra point made it possible to reduce the size of the minimal spanning network by about 1%. You could do better by adding more points.

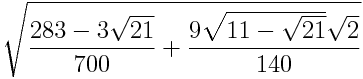

What is the most you can reduce the length of the minimum spanning network in three dimensions by adding extra points? The question concerns all possible sets of points, not just a particular set like the example above. It is conjectured that the most you can save is about 21.6%. That is, for any set of points, the ratio of the length of the shortest network with extra points to that of the shortest network without extra points is bounded below by

In their new book Magical Mathematics, Persi Diaconis and Ron Graham say “We currently offer a thousand dollars for a proof (or disproof) that this ratio is the best possible.”

As unwieldy as the number above appears, it makes some sense. It looks like the square roots come from repeated applications of the Pythagorean theorem. Someone may be able to reverse engineer the example the conjecture is based on by using the form of the proposed lower bound.

(Diaconis and Graham say that the corresponding problem in two dimensions have been solved and the optimal ratio is √ 3 / 2. However, this paper says that the conjecture is still unresolved, contrary to popular belief.)

The latest INFORMS podcast has an interview with David Alderson speaking about network science and protecting national infrastructure. He criticizes a couple network studies by saying the results are mathematically correct but the conclusions they draw are wrong because the models left out important details that would change the recommendations.

In many networks—social networks, electrical power networks, networks of web pages, etc.—the number of connections for each node follows a statistical distribution known as a power law. Here’s a model of network formation that shows how power laws can emerge from very simple rules.

Albert-László Barabási describes the following algorithm in his book Linked. Create a network by starting with two connected nodes. Then add nodes one at a time. Every time a new node joins the network, it connects to two older nodes at random with probability proportional to the number of links the old nodes have. That is, nodes that already have more links are more likely to get new links. If a node has three times as many links as another node, then it’s three times as attractive to new nodes. The rich get richer, just like with Page Rank.

Barabási says this algorithm produces networks whose connectivity distribution follows a power law. That is, the number of nodes with n connections is proportional to n–k. In particular he says k = 3.

I wrote some code to play with this algorithm. As the saying goes, programming is understanding. There were aspects of the algorithm I never would have noticed had I not written code for it. For one thing, after I decided to write a program I had to read the description more carefully and I noticed I’d misunderstood a couple details.

If the number of nodes with n connections really is proportional to n–k, then when you plot the number of nodes with 1, 2, 3, … connections, the points should fall near a straight line when plotted on the log-log scale and the slope of this line should be –k.

In my experiment with 10,000 nodes, I got a line of slope -2.72 with a standard error of 0.04. Not exactly -3, but maybe the theoretical result only holds in the limit as the network becomes infinitely large. The points definitely fall near a straight line in log-log scale:

In this example, about half the nodes (5,073 out of 10,000) had only two connections. The average number of connections was 4, but the most connected node had 200 connections.

Note that the number of connections per node does not follow a bell curve at all. The standard deviation on the number of connections per node was 6.2. This means the node with 200 connections was over 30 standard deviations from the average. That simply does not happen with normal (Gaussian) distributions. But it’s not unusual at all for power law distributions because they have such thick tails.

Of course real networks are more complex than the model given here. For example, nodes add links over time, not just when they join a network. Barabási discusses in his book how some elaborations of the original model still produce power laws (possibly with different exponents) and while others do not.