Let p be a prime number. Then the integers mod p form a finite field.

The number of elements in a finite field must be a power of a prime, i.e. the order q = pn for some n. When n > 1, we can take the elements of our field to be polynomials of degree n − 1 with coefficients in the integers mod p.

Addition works just as you’d expect addition to work, adding coefficients mod p, but multiplication is a little more complicated. You multiply field elements by multiplying their polynomial representatives, but then you divide by an irreducible polynomial and take the remainder.

When n = 2, for some p you can define the field by adding an imaginary unit.

When you can and cannot adjoin an i

For some finite fields of order p, you can construct a field of order p² by joining an element i to the field, very much the way you form the complex numbers from the real numbers. For example, you can create a field with 49 elements by taking pairs of (a, b) of integers mod 7 and multiplying them as if they were a + bi. So

(a, b) * (c, d) = (ac − bd, ad + bc).

This is equivalent to choosing the polynomial x² + 1 as your irreducible polynomial and following every polynomial multiplication by taking the remainder modulo x² + 1.

This works for a field with 49 elements, but not for a field of 25 elements. That’s because over the integers mod 5 the polynomial x² + 1 already has a root. Two of them in fact: x = 2 or x = 3. So you could say that mod 5, i = 2. Or i = 3 if you prefer. You can still form a field of 25 elements by taking pairs of elements from a field of 5 elements, but you have to choose a different polynomial as your irreducible polynomial because x² + 1 is not irreducible because

x² + 1 = (x − 2)(x + 2)

when working over the integers mod 5. You could use

x² + x + 1

as your irreducible polynomial. To prove that this polynomial is irreducible mod 5, plug in the numbers 0, 1, 2, 3, and 4 and confirm that none of them make the polynomial equal 0.

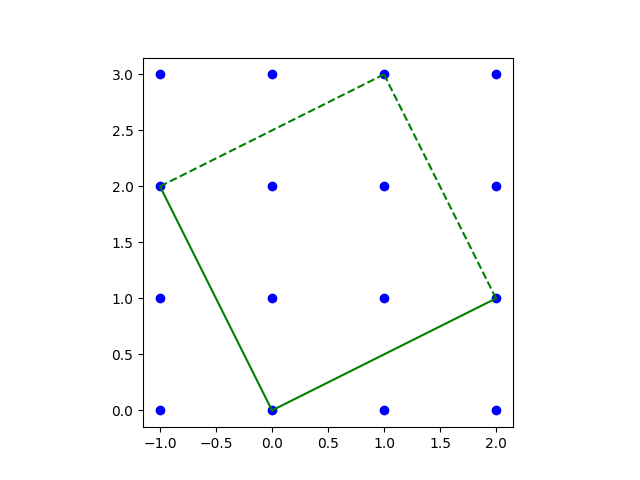

In general, you can create a field of order p² by adjoining an element i if and only if p = 3 mod 4.

Next we’ll look at an example of making a very large finite field even larger by adding an imaginary element.

Example from Ethereum

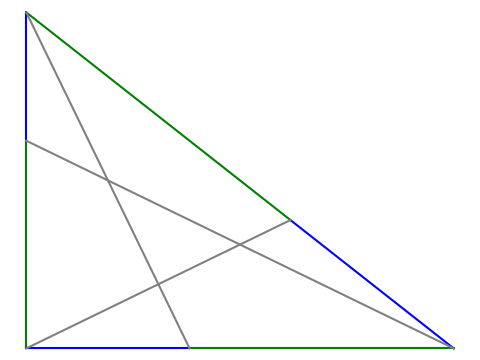

The Ethereum virtual machine has support for a pairing—more on that in a future post—of two elliptic curves, BN254 and alt_bn128. The BN254 curve is defined by

y² = x³ + 3

over the field Fp, the integers mod p, where

p = 21888242871839275222246405745257275088696311157297823662689037894645226208583.

The curve alt_bn128 is defined by

y² = x³ + 3/(9 + i)

over the field Fp[i], i.e. the field Fp, with an element i adjoined. Note the that last two digits of p are 83, and so p is congruent to 3 mod 4.

Special point on curve

The Ethereum documentation (EIP-197) singles out a particular point (x, y) on alt_bn128:

x = a + bi

y = c + di

where

a = 10857046999023057135944570762232829481370756359578518086990519993285655852781

b = 11559732032986387107991004021392285783925812861821192530917403151452391805634

c = 8495653923123431417604973247489272438418190587263600148770280649306958101930

d = 4082367875863433681332203403145435568316851327593401208105741076214120093531.

We will show that this point is on the curve as an exercise in working in the field Fp[i]. We’ll write Python code from scratch, not using any libraries, so all the details will be explicit.

def add(pair0, pair1, p):

a, b = pair0

c, d = pair1

return ((a + c) % p, (b + d) % p)

def mult(pair0, pair1, p):

a, b = pair0

c, d = pair1

return ((a*c - b*d) % p, (b*c + a*d) % p)

p = 21888242871839275222246405745257275088696311157297823662689037894645226208583

a = 10857046999023057135944570762232829481370756359578518086990519993285655852781

b = 11559732032986387107991004021392285783925812861821192530917403151452391805634

c = 8495653923123431417604973247489272438418190587263600148770280649306958101930

d = 4082367875863433681332203403145435568316851327593401208105741076214120093531

# Find (e, f) such that (e, f)*(9, 1) = (1, 0).

# 9e - f = 1

# e + 9f = 0

# Multiply first equation by 9 and add.

e = (9*pow(82, -1, p)) % p

f = (-e*pow(9, -1, p)) % p

prod = mult((e, f), (9, 1), p)

assert(prod[0] == 1 and prod[1] == 0)

y2 = mult((c, d), (c, d), p)

x3 = mult((a, b), mult((a, b), (a, b), p), p)

rhs = add(x3, mult((3, 0), (e, f), p), p)

assert(y2[0] == rhs[0])

assert(y2[1] == rhs[1])