I set up a GitHub account for a new employee this morning and spent a ridiculous amount of time proving that I’m human.

The captcha was to listen to three audio clips at a time and say which one contains bird sounds. This is a really clever test, because humans can tell the difference between real bird sounds and synthesized bird-like sounds. And we’re generally good at recognizing bird sounds even against a background of competing sounds. But some of these were ambiguous, and I had real birds chirping outside my window while I was doing the captcha.

You have to do 20 of these tests, and apparently you have to get all 20 right. I didn’t. So I tried again. On the last test I accidentally clicked the start-over button rather than the submit button. I wasn’t willing to listen to another 20 triples of audio clips, so I switched over to the visual captcha tests.

These kinds of tests could be made less annoying and more secure by using a Bayesian approach.

Suppose someone solves 19 out of 20 puzzles correctly. You require 20 out of 20, so you have them start over. When you do, you’re throwing away information. You require 20 more puzzles, despite the fact that they only missed one. And if a bot had solved say 8 out of 20 puzzles, you’d let it pass if it passes the next 20.

If you wipe your memory after every round of 20 puzzles, and allow unlimited do-overs, then any sufficiently persistent entity will almost certainly pass eventually.

Bayesian statistics reflects common sense. After someone (or something) has correctly solved 19 out of 20 puzzles designed to be hard for machines to solve, your conviction that this entity is human is higher than if they/it had solved 8 out of 20 correctly. You don’t need as much additional evidence in the first case as in the latter to be sufficiently convinced.

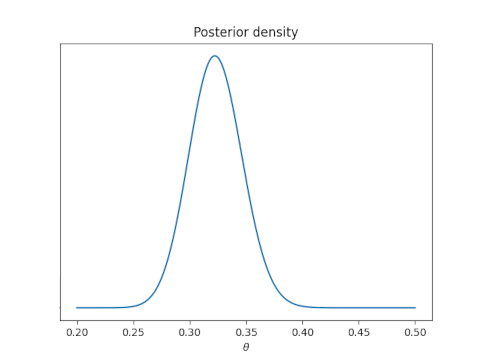

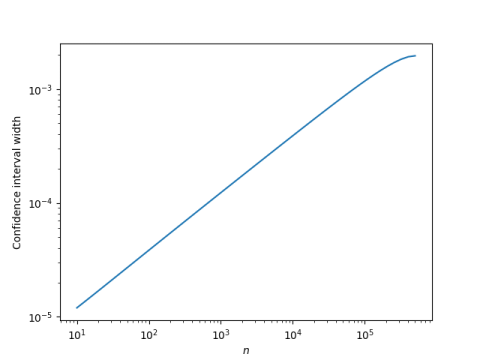

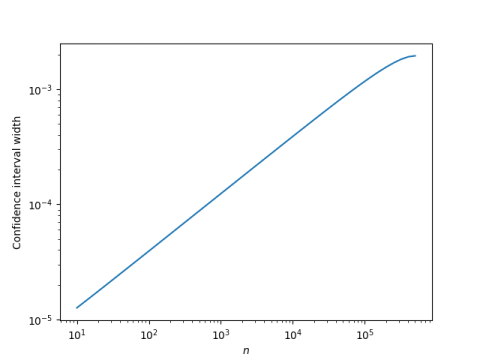

Here’s how a Bayesian captcha could work. You start out with some distribution on your probability θ that an entity is human, say a uniform distribution. You present a sequence of puzzles, recalculating your posterior distribution after each puzzle, until the posterior probability that this entity is human crosses some upper threshold, say 0.95, or some lower threshold, say 0.50. If the upper threshold is crossed, you decide the entity is likely human. If the lower threshold is crossed, you decide the entity is likely not human.

If solving 20 out of 20 puzzles correctly crosses your threshold of human detection, then after solving 19 out 20 correctly your posterior probability of humanity is close to the upper threshold and would only require a few more puzzles. And if an entity solved 8 out of 20 puzzles correctly, that may cross your lower threshold. If not, maybe only a few more puzzles would be necessary to reject the entity as non-human.

When I worked at MD Anderson Cancer Center we applied this approach to adaptive clinical trials. A clinical trial might stop early because of particularly good results or particularly bad results. Clinical trials are expensive, both in terms of human costs and financial costs. Rejecting poor treatments quickly, and sending promising treatments on to the next stage quickly, is both humane and economical.