Musician Adam Neely made a video asking What is the fastest music humanly possible? He emphasizes that he means the fastest music possible to hear, not the fastest to perform.

The video cites a psychology article [1] from 1894 that found that most people can reliably distinguish an inter-onset interval (time between notes) of 100 ms [2]. It also gives examples of music faster than this, such as a performance of Vivaldi with an inter-onset interval of 83 ms [3]. The limit seem to be greater than 50 ms because a pulse train with an inter-onset interval of 50 ms starts to sound like a 20 Hz pitch.

People are able to receive Morse code faster than this implies is possible. We will explain how this works, but first we need to convert words per minute to inter-onset interval length.

Morse code timing

Morse code is made of dots and dashes, but it is also made of spaces of three different lengths: the space between the dots and dashes representing a single letter, the space between letters, and the space between words.

According to an International Telecommunication Union standard

- A dash is equal to three dots.

- The space between the signals forming the same letter is equal to one dot.

- The space between two letters is equal to three dots.

- The space between two words is equal to seven dots.

The same timing is referred to as standard in a US Army manual from 1957,

Notice that all the numbers above are odd. Since a dot or dash is always followed by a space, the duration of a dot or dash and its trailing space is always an even multiple of the duration of a dot.

If we think of a dot as a sixteenth note, Morse code is made of notes that are either sixteenth notes or three sixteenth notes tied together. Rests are equal to one, three, or seven sixteenths, and notes and rests must alternate. All notes start on an eighth note boundary, i.e. either on a down beat or an up beat but not in between.

Words per minute

Morse code speed is measured in words per minute. But what exactly is a “word”? Words have a variable number of letters, and even words with the same number of letters can have very different durations in Morse code.

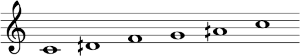

The most common standard is to use PARIS as the prototypical word. Ten words per minute, for example, means that dots and dashes are coming at you as fast as if someone were sending the word PARIS ten times per minute. Here’s a visualization of the code for PARIS with each square representing the duration of a dot.

This has the duration of 50 dots.

How does this relate to inter-onset interval? If each duration of a dot is an interval, then n words per minute corresponds to 50n intervals per minute, or 60/50n = 1.2/n seconds per interval.

This would imply that 12 wpm would correspond to an inter-onset interval of around 100 ms, pushing the limit of perception. But 12 wpm is a beginner speed. It’s not uncommon for people to receive Morse code at 30 wpm. The world record, set by Theodore Roosevelt McElroy in 1939, is 75.2 wpm.

What’s going on?

In the preceding section I assumed a dot is an interval when calculating inter-onset interval length. In musical terms, this is saying a sixteenth note is an interval. But maybe we should count eighth notes as intervals. As noted before, every “note” (dot or dash) starts on a down beat or up beat. Still, that would say 20 wpm is pushing the limit of perception, and we know people can listen faster than that.

You don’t have to hear with the precision of the diagram above in order to recognize letters. And you have context clues. Maybe you can’t hear the difference between “E E E” and “O”, but in ordinary prose the latter is far more likely.

At some skill level people quit hearing individual letters and start hearing words, much like experienced readers see words rather than letters. I’ve heard that this transition happens somewhere between 20 wpm and 30 wpm. That would be consistent with the estimate above that 20 wpm is pushing the limit of perception letter by letter. But 30 words per minute is doable. It’s impressive, but not unheard of.

What I find hard to believe is that there were intelligence officers, such as Terry Jackson, who could copy encrypted text at 50 wpm. Here a “word” is a five-letter code group. There are millions of possible code groups [4], all equally likely, and so it would be impossible to become familiar with particular code groups the way one can become familiar with common words. Maybe they learned to hear pairs or triples of letters.

Related posts

[1] Thaddeus L. Bolton. Rhythm. The American Journal of Psychology. Vol. VI. No. 2. January, 1894. Available here.

[2] Interonset is not commonly hyphenated, but I’ve hyphenated it here for clarity.

[3] The movement Summer from Vivaldi’s The Four Seasons performed at 180 bpm which corresponds to 720 sixteenth notes per minute, each 83 ms long.

[4] If a code group consisted entirely of English letters, there are 265 = 11,881,376 possible groups. If a code group can contain digits as well, there would be 365 = 60,466,176 possible groups.