Electrical and mechanical oscillations satisfy analogous equations. This is the basis of using the word “analog” in electronics. You could study a mechanical system by building an analogous circuit and measuring that circuit in a lab.

Mass, dashpot, spring

Years ago I wrote a series of four posts about mechanical vibrations:

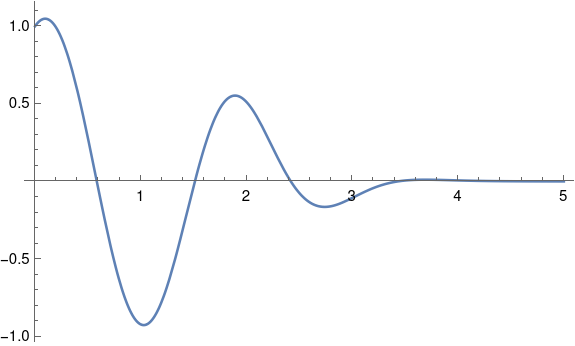

- Free, undamped vibrations

- Free, damped vibrations

- Forced, undamped vibrations

- Forced, damped vibrations

Everything in these posts maps over to electrical vibrations with a change of notation.

That series looked at the differential equation

where m is mass, γ is damping from a dashpot, and k is the stiffness of a spring.

Inductor, resistor, capacitor

Now we replace our mass, dashpot, and spring with an inductor, resistor, and capacitor.

Imagine a circuit with an L henry inductor, and R ohm resistor, and a C farad capacitor in series. Let Q(t) be the charge in coulombs over time and let E(t) be an applied voltage, i.e. an AC power source.

Charge formulation

One can use Kirchhoff’s law to derive

Here we have the correspondences

So charge is analogous to position, inductance is analogous to mass, resistance is analogous to damping, and capacitance is analogous to the reciprocal of stiffness.

The reciprocal of capacitance is called elastance, so we can say elastance is proportional to stiffness.

Current formulation

It’s more common to see the differential equation above written in terms of current I.

If we take the derivative of both sides of

we get

Natural frequency

With mechanical vibrations, as shown here, the natural frequency is

and with electrical oscillations this becomes

Steady state

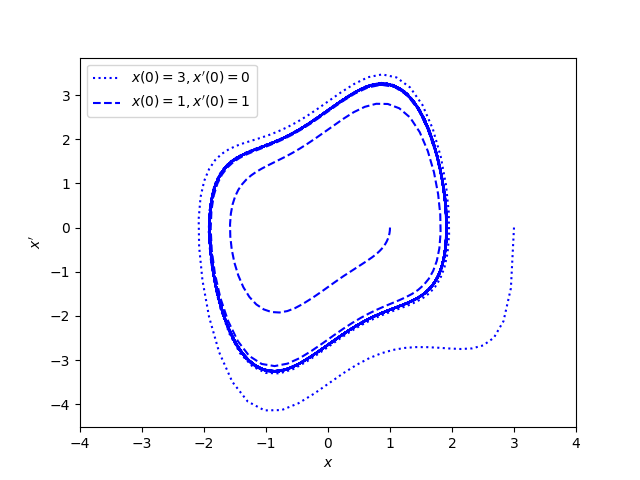

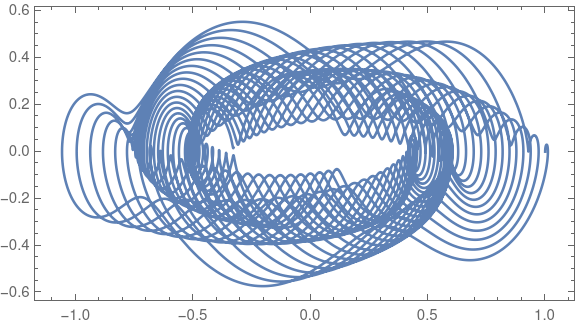

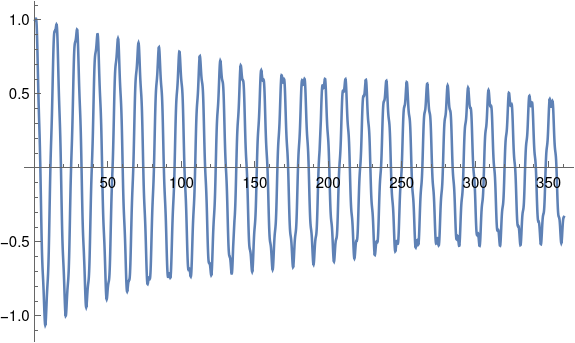

When a mechanical or electrical system is driven by sinusoidal forcing function, the system eventually settles down to a solution that is proportional to a phase shift of the driving function.

To be more explicit, the solution to the differential equation

has a transient component that decays exponentially and a steady state component proportional to cos(ωt-φ). The same is true of the equation

The proportionality constant is conventionally denoted 1/Δ and so the steady state solution is

for the mechanical case and

for the electrical case.

The constant Δ satisfies

for the mechanical system and

for the electrical system.

When the damping force γ or the resistance R is small, then the maximum amplitude occurs when the driving frequency ω is near the natural frequency ω0.

More on damped, driven oscillations here.