Randomized clinical trials essentially flip a coin to assign patients to treatment arms. Outcome-adaptive randomization “bends” the coin to favor what appears to be the better treatment at the time each randomized assignment is made. The method aims to treat more patients in the trial effectively, and on average it succeeds.

However, looking only at the average number of patients assigned to each treatment arm conceals the fact that the number of patients assigned to each arm can be surprisingly variable compared to equal randomization.

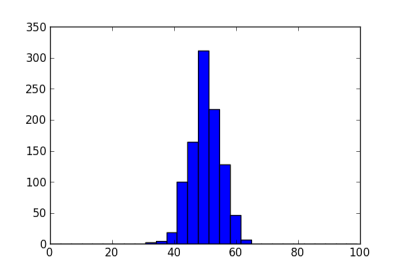

Suppose we have 100 patients to enroll in a clinical trial. If we assign each patient to a treatment arm with probability 1/2, there will be about 50 patients on each treatment. The following histogram shows the number of patients assigned to the first treatment arm in 1000 simulations. The standard deviation is about 5.

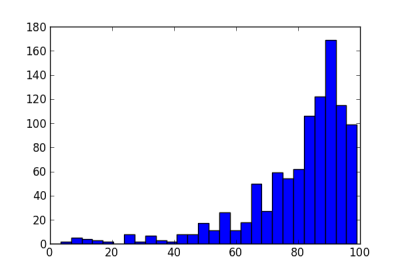

Next we let the randomization probability vary. Suppose the true probability of response is 50% on one arm and 70% on the other. We model the probability of response on each arm as a beta distribution, starting from a uniform prior. We randomize to an arm with probability equal to the posterior probability that that arm has higher response. The histogram below shows the number of patients assigned to the better treatment in 1000 simulations.

The standard deviation in the number of patients is now about 17. Note that while most trials assign 50 or more patients to the better treatment, some trials in this simulation put less than 20 patients on this treatment. Not only will these trials treat patients less effectively, they will also have low statistical power (as will the trials that put nearly all the patients on the better arm).

The reason for this volatility is that the method can easily be mislead by early outcomes. With one or two early failures on an arm, the method could assign more patients to the other arm and not give the first arm a chance to redeem itself.

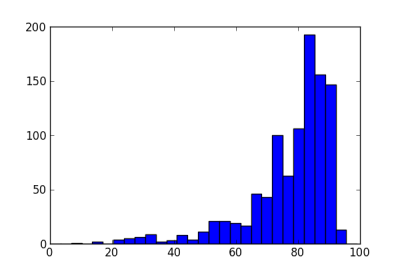

Because of this dynamic, various methods have been proposed to add “ballast” to adaptive randomization. See a comparison of three such methods here. These methods reduce the volatility in adaptive randomization, but do not eliminate it. For example, the following histogram shows the effect of adding a burn-in period to the example above, randomizing the first 20 patients equally.

The standard deviation is now 13.8, less than without the burn-in period, but still large compared to a standard deviation of 5 for equal randomization.

Another approach is to transform the randomization probability. If we use an exponential tuning parameter of 0.5, the sample standard deviation of the number of patients on the better arm is essentially the same, 13.4. If we combine a burn-in period of 20 and an exponential parameter of 0.5, the sample standard deviation is 11.7, still more than twice that of equal randomization.