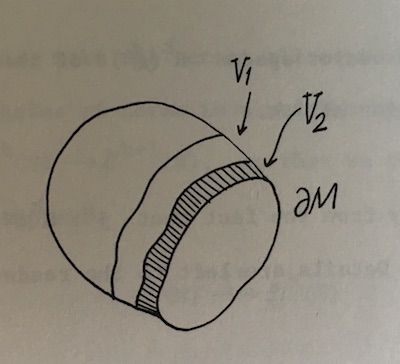

Spheres and balls are examples of common words that take on a technical meaning in math, as I wrote about here. Recall the unit sphere in n dimensions is the set of points with distance 1 from the origin. The unit ball is the set of points of distance less than or equal to 1 from the origin. The sphere is the surface of the ball.

Integrating a polynomial in several variables over a ball or sphere is easy. For example, take the polynomial xy² + 5x²z² in three variables. The integral of the first term, xy², is zero. If any variable in a term has an odd exponent, then the integral of that term is zero by symmetry. The integral over half of the sphere (ball) will cancel out the integral over the opposite half of the sphere (ball). So we only need to be concerned with terms like 5x²z².

Now in n dimensions, suppose the exponents of x1, x2, …, xn are a1, a2, …, an respectively. If any of the a‘s are odd, the integral over the sphere or ball will be zero, so we assume all the a‘s are even. In that case the integral over the unit sphere is simply

where

is the multivariate beta function and for each i we define bi = (ai + 1)/2. When n = 2 then B is the (ordinary) beta function.

Note that the integral over the unit sphere doesn’t depend on the dimension of the sphere.

The integral over the unit ball is

which is proportional to the integral over the sphere, where the proportionality constant depends on the sum of the exponents (the original exponents, the a‘s, not the b‘s) and the dimension n.

Note that if we integrate the constant polynomial 1 over the unit sphere, we get the surface area of the unit sphere, and if we integrate it over the unit ball, we get the volume of the unit ball.

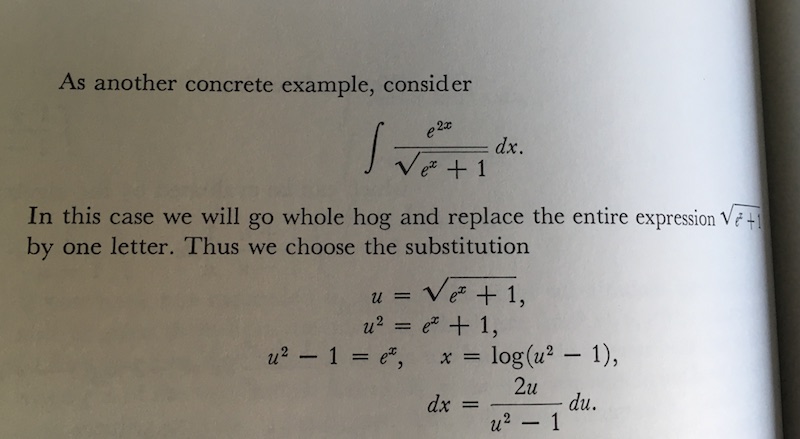

You can find a derivation for the integral results above in [1]. The proof is basically Liouville’s trick for integrating the normal distribution density, but backward. Instead of going from rectangular to polar coordinates, you introduce a normal density and go from polar to rectangular coordinates.

[1] Gerald B. Folland, How to Integrate a Polynomial over a Sphere. The American Mathematical Monthly, Vol. 108, No. 5 (May, 2001), pp. 446-448.