The other day I heard someone suggest that a good cocktail party definition of cryptography is puzzle making. Cryptographers create puzzles that are easy to solve given the key, but ideally impossible without the key.

Linearity is very useful in solving puzzles, and so a puzzle maker would like to create functions that are as far from linear as possible. That is why cryptographers are interested in perfectly nonlinear functions (PNs) and almost perfect nonlinear functions (APNs), which we will explore here.

The functions we are most interested in map a set of bits to a set of bits. To formalize things, we will look at maps between two finite Abelian groups A and B. If we look at lists of bits with addition defined as component-wise XOR [1], we have an Abelian group, but the ideas below apply to other kinds of Abelian groups as well.

We will measure the degree of nonlinearity of a function F from A to B by the size of the sets

where a and x are in A and b is in B [2].

If F is a linear function, F(a + x) = F(a) + F(x), and so δ(a, b) simplifies to

which equals all of the domain A, if F(a) = b. So for linear functions, the set δ(a, b) is potentially as large as possible, i.e. all of A. Perfectly nonlinear functions will be functions where the sets δ(a, b) are as small as possible, and almost perfectly nonlinear functions will be functions were δ(a, b) is as small as possible in a given context.

The uniformity of a function F is defined by

The restriction a ≠ 0 is necessary since otherwise the uniformity of every function would be the same.

For linear functions, the uniformity is as large as possible, i.e. |A|. As we will show below, a nonlinear function can have maximum uniformity as well, but some nonlinear functions have smaller uniformity. So some nonlinear functions are more nonlinear than others.

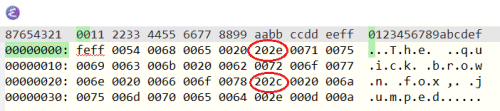

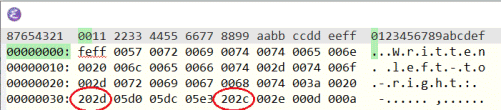

We will compute the uniformity of a couple functions, mapping 4 bits to 1 bit, with the following Python code.

A = range(16)

B = range(2)

nonZeroA = range(1, 16)

def delta(f, a, b):

return {x for x in A if f(x^a) ^ f(x) == b}

def uniformity(f):

return max(len(delta(f, a, b)) for a in nonZeroA for b in B)

We could look at binary [3] functions with domains and ranges of different sizes by changing the definitions of A, B, and nonZeroA.

Note that in our groups, addition is component-wise XOR, denoted by ^ in the code above, and that subtraction is the same as addition since XOR is its own inverse. Of course in general addition would be defined differently and subtraction would not be the same as addition. When working over a binary field, things are simpler, sometimes confusingly simpler.

We want to compute the uniformity of two functions. Given bits x0, x1, x2, and x3, we define

F(x0, x1, x2, x3) = x0 x1

and

G(x0, x1, x2, x3) = x0 x1 + x2 x3.

We can implement these in Python as follows.

def F(x):

x = [c == '1' for c in format(x, "04b")]

return x[0] and x[1]

def G(x):

x = [c == '1' for c in format(x, "04b")]

return (x[0] and x[1]) ^ (x[2] and x[3])

Next we compute the uniformity of the two functions.

print( uniformity(F) )

print( uniformity(G) )

This shows that the uniformity of F is 16 and the uniformity of G is 8. Remember that functions with smaller uniformity are considered more nonlinear. Both functions F and G are nonlinear, but G is more nonlinear in the sense defined here.

To see that F is nonlinear, let x = (1, 0, 0, 0) and y = (0, 1, 0, 0).

1 = F(x + y) ≠ F(x) + F(y) = 0.

It turns out that 8 is the smallest possible uniformity for a function from 4 bits to 1 bit, and the function G is said to be perfectly nonlinear.

I haven’t defined perfect nonlinearity or almost perfect nonlinearaity precisely, only saying that they have something to do with maximum nonlinearity (minimum uniformity) in some context. I may say more about that context in a future post.

Related posts

[1] XOR stands for exclusive or. For Boolean variables p and q, p XOR q is true if either p is true or q is true, but not both. The component-wise XOR of two binary numbers sets each bit of the result to the XOR of the corresponding input bits. For example, 1001 XOR 1010 = 0011.

[2] This definition comes from Perfect nonlinear functions and cryptography by Céline Blondeau and Kaisa Nyberg.

[3] The next post replaces binary numbers with base 5 and other bases.

![Mathematica plot of Cos[2 theta/3]](https://www.johndcook.com/polar_mmca.png)