This post will present a couple asymptotic series, explain how to use them, and point to some applications.

The most common special function in applications, at least in my experience, is the gamma function Γ(z). It’s often easier to work with the logarithm of the gamma function than to work with the gamma function directly, so one of the asymptotic series we’ll look at is the series for log Γ(z).

Another very common special function in applications is the error function erf(z). The error function and its relationship to the normal probability distribution are explained here. Even though the gamma function is more common, we’ll start with the asymptotic series for the error function because it is a little simpler.

Error function

We actually present the series for the complementary error function erfc(z) = 1 – erf(z). (Why have a separate name for erfc when it’s so closely related to erf? Sometimes erfc is easier to work with mathematically. Also, sometimes numerical difficulties require separate software for evaluating erf and erfc as explained here.)

If you’re unfamiliar with the n!! notation, see this explanation of double factorial.

Note that the series has a squiggle ~ instead of an equal sign. That is because the partial sums of the right side do not converge to the left side. In fact, the partial sums diverge for any z. Instead, if you take any fixed partial sum you obtain an approximation of the left side that improves as z increases.

The series above is valid for any complex value of z as long as |arg(z)| < 3π/4. However, the error term is easier to describe if z is real. In that case, when you truncate the infinite sum at some point, the error is less than the first term that was left out. In fact, the error also has the same sign as the first term left out. So, for example, if you drop the sum entirely and just keep the “1” term on the right side, the error is negative and the absolute value of the error is less than 1/2 z2.

One way this series is used in practice is to bound the tails of the normal distribution function. A slight more involved application can be found here.

Log gamma

The next series is the asymptotic series for log Γ(z).

If you throw out all the terms involving powers of 1/z you get Stirling’s approximation.

As before, the partial sums on the right side diverge for any z, but if you truncate the series on the right, you get an approximation for the left side that improves as z increases. And as before, the series is valid for complex z but the error is simpler when z is real. In this case, complex z must satisfy |arg(z)| < π. If z is real and positive, the approximation error is bounded by the first term left out and has the same sign as the first term left out.

The coefficients 1/12, 1/360, etc. require some explanation. The general series is

where the numbers B2m are Bernoulli numbers.

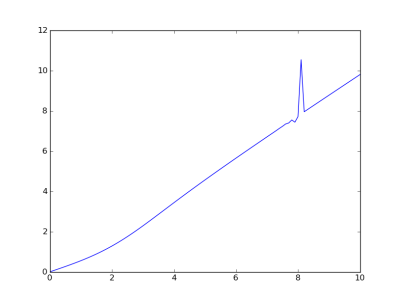

This post showed how to use this asymptotic series to compute log n!.

References

The asymptotic series for the error function is equation 7.1.23 in Abramowitz and Stegun. The bounds on the remainder term are described in section 7.1.24. The series for log gamma is equation 6.1.41 and the error term is described in 6.1.42.