Colin Wright pointed out a pattern in my previous post that I hadn’t seen before. He wrote about it here a couple years ago.

Start with the powers of 2 from the top of the post:

21 = 2

22 = 4

23 = 8

24 = 16

25 = 32

26 = 64

27 = 128

28 = 256

29 = 512

and sort the numbers on the right hand side in lexical order, i.e. sort them as you’d sort words. This is almost never what you want to do, but here it is [1].

128

16

2

256

32

4

512

64

8

Next, add a decimal point after the first digit in each number.

1.28

1.6

2

2.56

3.2

4

5.12

6.4

8

Now compare the decibel values listed at the bottom of the previous post.

1.25

1.6

2

2.5

3.2

4

5

6.3

8

Five of the numbers are the same and the remaining 4 are close. And where the numbers differ, the exact decibel value is between the the two approximate values.

Here’s a table to compare the exact value rounded to 3 decimals, the approximation given before, and the value obtained by sorting powers of 2 and moving the decimal.

|---+-----------+--------+-------|

| n | 10^(n/10) | approx | power |

|---+-----------+--------+-------|

| 1 | 1.259 | 1.25 | 1.28 |

| 2 | 1.585 | 1.60 | 1.60 |

| 3 | 1.995 | 2.00 | 2.00 |

| 4 | 2.512 | 2.50 | 2.56 |

| 5 | 3.162 | 3.20 | 3.20 |

| 6 | 3.981 | 4.00 | 4.00 |

| 7 | 5.012 | 5.00 | 5.12 |

| 8 | 6.310 | 6.30 | 6.40 |

| 9 | 7.943 | 8.00 | 8.00 |

|---+-----------+--------+-------|

So the numbers at the top and bottom of my list are practically the same, but in a different order.

Related post: 2s, 5s, and decibels.

[1] One reason I use ISO dates (YYYY-MM-DD) in my personal work is that that lexical order equals chronological order. Otherwise you can get weird things like December coming before March because D comes before M or because 1 (as in 12) comes before 3. Using year-month-day and padding days and months with zeros as needed eliminates this problem.

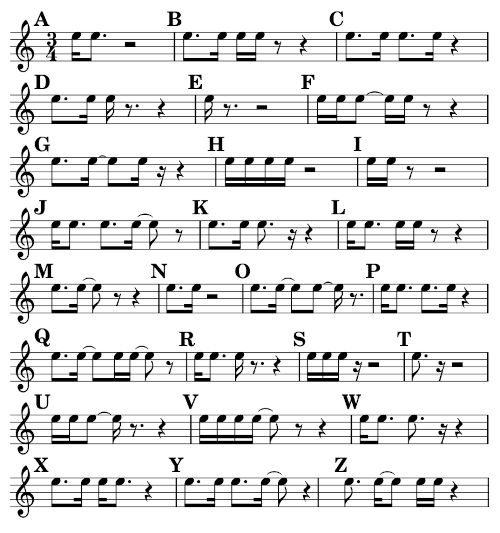

![Tree[Q, {Tree[R, {A, K, N, S, T}], Tree[S, {B, L, O}], Tree[T, {H, X}]}]](https://www.johndcook.com/qcodes.png)