One of the most famous theorems in chaos theory, maybe the most famous, is that “period three implies chaos.” This compact statement comes from the title of a paper [1] by the same name. But what does it mean?

This post will look at what the statement means, and the next post will look at a generalization.

Period

First of all, the theorem refers to period in the sense of function iterations, not in the sense of translations. And it applies to particular points, not to the function as a whole.

The sine function is periodic in the sense that it doesn’t change if you shift it by 2π. That is,

sin(2π+x) = sin(x)

for all x. The sine function has period 2π in the sense of translations.

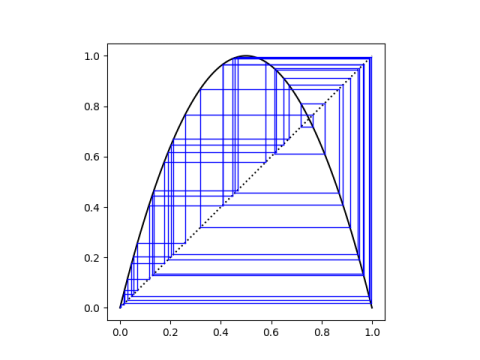

In dynamical systems, period refers to getting the same result, not when you shift a function, but when you apply it to itself. A point x has period n under a function f if applying the function n times gives you x back, but applying it any less than n times does not.

So, for example, the function f(x) = –x has period 2 for non-zero x, and period 1 for x = 0.

Three

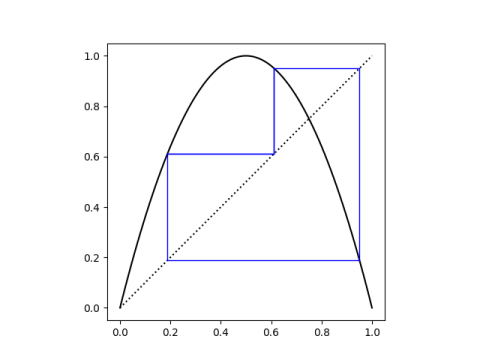

Period three specifically means

f( f( f(x) ) ) = x

but

x ≠ f(x)

and

x ≠ f( f(x) ).

Note that this is a property of x, not a property of f per se. That is, it is a property of f that one such x exists, but it’s not true of all points.

In fact it’s necessarily far from true for all points, which leads to what we mean by chaos.

Chaos

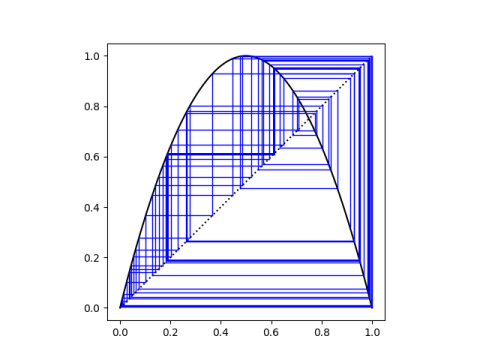

If f is a continuous function from some interval I to itself, it cannot be the case that all points have period 3.

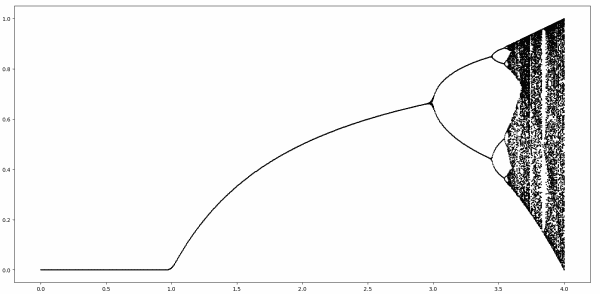

If one point has period 3, then some other point must have period 4. And another point must have period 97. And some point has period 1776.

If some point in I has period 3, then there are points in I that have period n for all positive n. Buy one, get infinitely many for free.

And there are some points that are not periodic, but every point in I is arbitrarily close to a point that is periodic. That is, the periodic points are dense in the interval. That is what is meant by chaos [2].

This is really an amazing theorem. It says that if there is one point that satisfies a simple condition (period three) then the function as a whole must have very complicated behavior as far as iteration is concerned.

More

If the existence of a point with period 3 implies the existence of points with every other period, what can you say about, for example, period 10? That’s the subject of the next post.

More posts on dynamical systems and chaos.

[1] Li, T.Y.; Yorke, J.A. (1975). “Period Three Implies Chaos” American Mathematical Monthly. 82 (10): 985–92.

[2] There is no single definition of chaos. Some take the existence of dense periodic orbits as the defining characteristic of a chaotic system. See, for example, [3].

[3] Bernd Aulbach and Bernd Kieninger. On Three Definitions of Chaos. Nonlinear Dynamics and Systems Theory, 1(1) (2001) 23–37