Suppose you have a polynomial p(x) and in interval [a, b] and you want to know how many distinct real roots the polynomial has in the interval. You can answer this question using Sturm’s algorithm.

Let p0(x) = p(x) and letp1(x) be its derivative p‘(x).

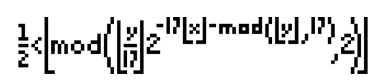

Then define a series of polynomials for i ≥ 1

pi+1(x) = – pi-1(x) mod pi(x)

until you reach a constant. Here f mod g means the remainder when f is divided by g.

This sequence of polynomials is called the Sturm series. Count the number of sign changes in the Sturm series at a and at b. Then the difference between these two counts is the number of distinct roots of p in the interval [a, b].

Example with Mathematica

As an example, let’s look at the number of real zeros for

p(x) = x5 – x – c

for some constant c. We’ll let Mathematica calculate our series.

p0[x_] := x^5 - x - c

p1[x_] := D[p0[x], x]

p2[x_] := -PolynomialRemainder[p0[x], p1[x], x]

p3[x_] := -PolynomialRemainder[p1[x], p2[x], x]

This works out to

p0(x) = x5 – x – c

p1(x) = 5x4 – 1

p2(x) = (4/5)x + c

p3(x) = 1 – 3125c4/256

Now suppose c = 3 and we want to find out how many zeros p has in the interval [0, 2].

Evaluating our series at 0 we get -3, -1, 3, -3109/16. So the pattern is – – + -, i.e. two sign changes.

Evaluating our series at 2 we get 27, 79, 23/5, -3109/16. So the pattern is + + + -, i.e. one sign change.

This says x5 – x – 3 has one real root between 0 and 2.

By the way, you can multiply the polynomials in the sequence by any positive constant you like if that makes calculations easier. This multiplies subsequent polynomials by the same amount and doesn’t change the signs.

Fine print

Note that the algorithm counts distinct roots; multiple roots are counted once.

You can let the end points of the interval be infinite, and so count all the real roots.

I first tried using Mathematica’s PolynomialMod function, but

PolynomialMod[5 x^4 - 1, 4 x/5 + c]

gave the unexpected result 5x4 – 1. That’s because PolynomialMod does not let you specify what the variable is. It assumed that 4x/5 + c was a polynomial in c. PolynomialRemainder is explicit about the variable, and that’s why the calls to this function above have x as the last argument.