I recently evaluated two software applications designed to find PII (personally identifiable information) in free text using natural language processing. Both failed badly, passing over obvious examples of PII. By contrast, I also tried natural language processing software on a nonsensical poem, it the software did quite well.

Doctor’s notes

It occurred to me later that the software packages to search for PII probably assume “natural language” has the form of fluent prose, not choppy notes by physicians. The notes that I tested did not consist of complete sentences marked up with grammatically correct punctuation. The text may have been transcribed from audio.

Some software packages deidentify medical notes better than others. I’ve seen some work well and some work poorly. I suspect the former were written specifically for their purpose and the latter were more generic.

Jabberwocky

I also tried NLP software on Lewis Carroll’s poem Jabberwocky. It too is unnatural language, but in a different sense.

Jabberwocky uses nonsense words that Carroll invented for the poem, but otherwise it is grammatically correct. The poem is standard English at the level of structure, though not at the level of words. It is the opposite of medical notes that are standard English at the word level (albeit with a high density of technical terms), but not at a structural level.

I used the spaCy natural language processing library on a couple stanzas from Lewis’ poem.

“Beware the Jabberwock, my son!

The jaws that bite, the claws that catch!

Beware the Jubjub bird, and shun

The frumious Bandersnatch!”He took his vorpal sword in hand;

Long time the manxome foe he sought—

So rested he by the Tumtum tree

And stood awhile in thought.

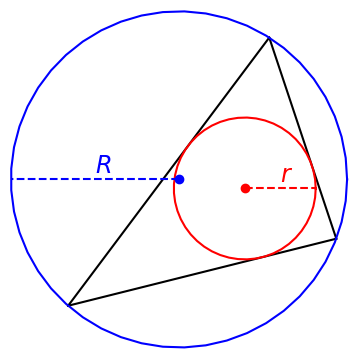

I fed the lines into spaCy and asked it to diagram the lines, indicating parts of speech and dependencies. The software did a good job of inferring the use of even the nonsense words. I gave the software one line at a time rather than a stanza at a time because the latter results in diagrams that are awkwardly wide, too wide to display here. (The spaCy visualization software has a “compact” option, but this option does not make the visualizations much more compact.)

Here are the visualizations of the lines.

And here is the Python code I used to create the diagrams above.

import spacy

from spacy import displacy

from pathlib import Path

nlp = spacy.load("en_core_web_sm")

lines = [

"Beware the Jabberwock, my son!",

"The jaws that bite, the claws that catch!",

"Beware the Jubjub bird",

"Shun the frumious Bandersnatch!",

"He took his vorpal sword in hand.",

"Long time the manxome foe he sought",

"So rested he by the Tumtum tree",

"And stood awhile in thought."

]

for line in lines:

doc = nlp(line)

svg = displacy.render(doc, style="dep", jupyter=False)

file_name = '-'.join([w.text for w in doc if not w.is_punct]) + ".svg"

output_path = Path(file_name)

output_path.open("w", encoding="utf-8").write(svg)