I saw someone point out recently that

10! = 7! × 5! × 3! × 1!

Are there more examples like this?

What would you call the pattern on the right? I don’t think there’s a standard name, but here’s why I think it should be called double super factorial or super double factorial.

Super factorial

The factorial of a positive number n is the product of the positive numbers up to and including n. The super factorial of n is the product of the factorials of the positive numbers up to and including n. So, for example, 7 super factorial would be

7! × 6! × 5! × 4! × 3! × 2! × 1!

Double factorial

The double factorial of a positive number n is the product of all the positive numbers up to n with the same parity of n. So, for example, the double factorial of 7 would be

7!! = 7 × 5 × 3 × 1.

Double superfactorial

The pattern at the top of the post is like super factorial, but it only includes odd terms, so it’s like a cross between super factorial and double factorial, hence double super factorial.

Denote the double super factorial of n as dsf(n), the product of the factorials of all numbers up to n with the same parity as n. That is,

dsf(n) = n! × (n − 2)! × (n − 4)! × … × 1

where the 1 at the end is 1! if n is odd and 0! if n is even. In this notation, the observation at the top of the post is

10! = dsf(7).

Super double factorial

We can see by re-arranging terms that a double super factorial is also a super double factorial. For example, look at

dsf(7) = 7! × 5! × 3! × 1!

If we separate out the first term in each factorial we have

(7 × 5 × 3 × 1)(6! × 4! × 2!) = 7!! dsf(6)

We can keep going and show in general that

dsf(n) = n!! × (n − 1)!! × (n − 2)!! … × 1

We could call the right hand side super double factorial, sdf(n). Just as a super factorial is a product of factorials, a super double factorials is a product of double factorials. Therefore

dsf(n) = sdf(n).

Factorials that equal double super factorials

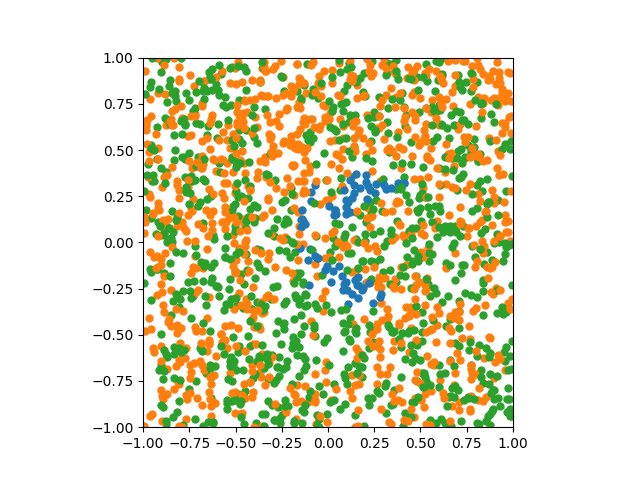

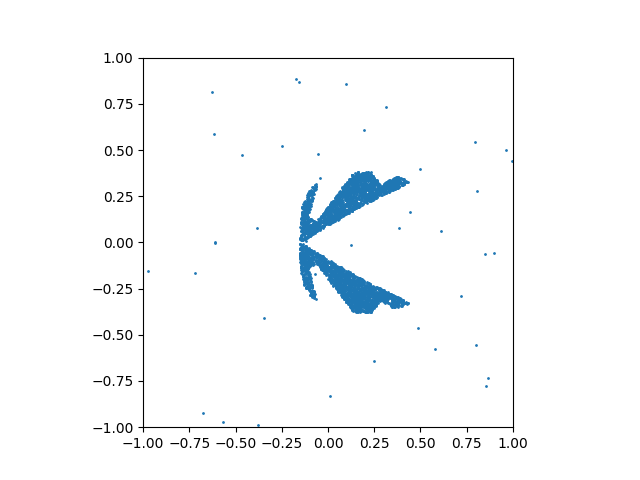

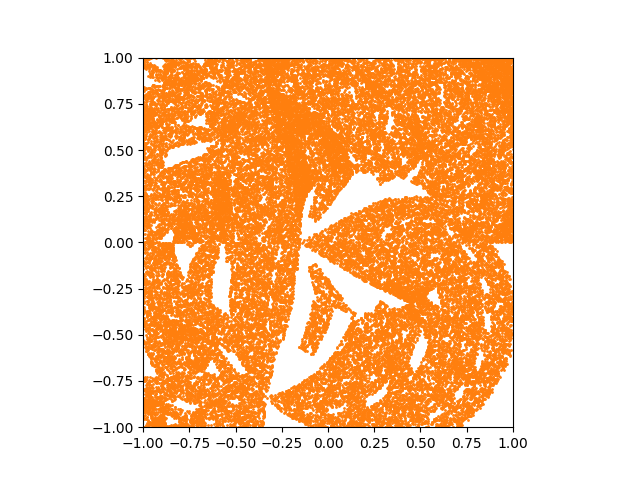

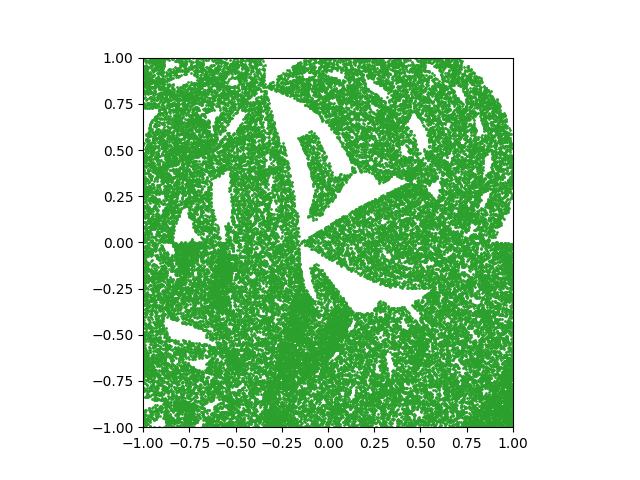

Are there more solutions to

n! = dsf(m).

besides n = 10 and m = 7? Yes, here are some.

0! = dsf(0)

1! = dsf(1)

2! = dsf(2)

3! = dsf(3)

6! = dsf(5)

There are no solutions to

n! = dsf(m)

if n > 10. Here’s a sketch of a proof.

Bertrand’s postulate says that for n > 1 there is always a prime p between n and 2n. Now p divides (2n)! but p cannot divide dsf(n) because dsf(n) only has factors less than or equal to n.

If we can show that for some N, n > N implies (2n)! < dsf(n) then there are no solutions to

n! = dsf(m)

for n > 2N because there is a prime p between N and 2N that divides the left side but not the right. In fact N = 12. We can show empirically there are no solutions for n = 11 up to 24, and the proof shows there are no solutions for n > 24.