Problem statement

Suppose you have a large number of points in 3D space and you want to find a sphere containing all the points. You’d like the sphere to be as small as possible, but you’re willing to accept a slightly larger sphere in exchange for simplicity or efficiency.

False starts

If you knew the center of the sphere, the solution would be easy: find the distance from every point to the center, and the large distance is the radius. But you don’t know the center of the bounding sphere when you start.

What if you found the two points the furthest apart from each other? Maybe that would give you the diameter of the bounding sphere, and the center would be the midpoint of the line connecting these two points.

There are a couple problems with this approach. First, how would you find the two points furthest from each other? The most obvious approach would be to compute the distance between all pairs of points. But if you have n points, you have n(n+1)/2 pairs of points. That would make the algorithm O(n²).

If you do know the two points that are furthest apart, that gives you a lower bound on the diameter of the bounding sphere, but not the diameter itself. For example, suppose we’re working in two dimensions and discover that the longest line between two points connects (-10, 0) and (10, 0). You might try centering a circle of radius 10 at the origin. But what if there’s a third point (0, 11)? It doesn’t change which two points are maximally distance, but it’s a distance of 11 from the origin.

Jack Ritter’s algorithm

A fairly simple algorithm by Jack Ritter runs in linear time, i.e. O(n), and is guaranteed to produce a sphere containing the given points. But the sphere it returns might be a little bigger than necessary. Ritter says [1] “the sphere calculated is about 5% bigger than the ideal minimum-radius sphere.”

When I read that, my first thought was “What kind of distribution is he assuming on points to come up with that 5% estimate?” The expected efficiency of the algorithm must depend on the the distribution on the input points.

Ritter gives two examples. The first started with a sphere “randomly populated with 100,000 points.” Random according to what distribution? I assume uniform. The calculated sphere had a radius 4% larger than optimal. The second example was a cube “randomly populated with 10,000 points.” Again I assume the distribution was uniform. The calculated sphere had a radius that was 7% larger than optimal.

I’ve seen a couple sources that say Ritter’s algorithm is between 5% and 20% from optimal. I’m curious what the 20% figure is based on; the examples below fit much better than that. Maybe the fit is worse for point sets with less symmetry. The number of points may also effect the expected efficiency.

Testing Ritter’s algorithm

To test Ritter’s algorithm, I grabbed an implementation in Python from here. (There’s a flaw in this implementation. See update below.)

Cube example

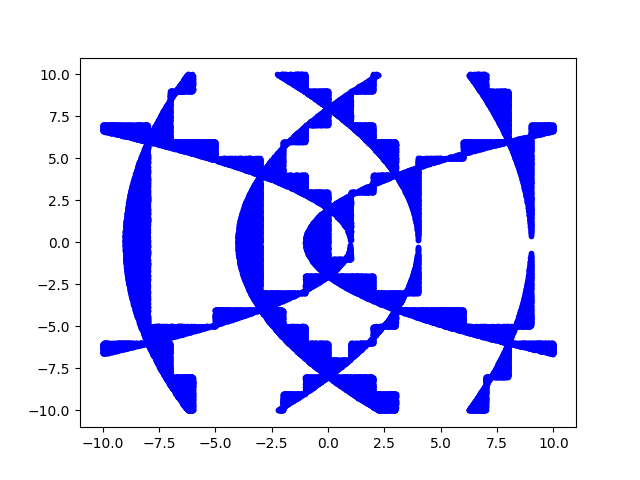

First, I took 10,000 uniform samples from the unit cube. I started with this example since sampling from a cube is easy.

I changed the last four of the random samples to be corners of the cube so I’d know the exact answer.

from scipy.stats import uniform

x = uniform.rvs(size=(10000, 3)).tolist()

x[-1] = [0, 0, 0]

x[-2] = [0, 0, 1]

x[-3] = [1, 0, 0]

x[-4] = [1, 1, 1]

print( bounding_sphere(x) )

This returned a sphere of radius 0.8772 centered at (0.5010, 0.4830, 0.4968). The exact solution is a sphere of radius 0.5√3 centered at (0.5, 0.5, 0.5). The radius of the computed sphere was only 1.3% greater than optimal. I repeated my experiment 10 times and the worst fit was 2.2% greater than optimal. It seems Ritter’s cube experiment result was either unlucky or flawed, and in either case pessimistic.

Sphere example

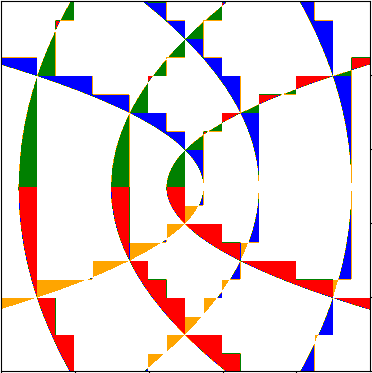

Next I wanted to generate around 10,000 points uniformly from a sphere of radius 0.5 centered at (0.5, 0.5, 0.5). I did this by generating points from the unit cube and throwing away those that weren’t in the sphere I was after [2]. The sphere takes up about half of the unit cube, so I generated 20,000 points in order to have about 10,000 that landed inside the sphere.

def inside(c):

return (c[0]-0.5)**2 + (c[1]-0.5)**2 + (c[2]-0.5)**2 < 1/4

cube = uniform.rvs(size=(20000, 3)).tolist()

x = [c for c in cube if inside(c)]

x[-1] = [0.5, 0.5, 0.0]

x[-2] = [0.0, 0.5, 0.5]

x[-3] = [0.5, 0.0, 0.5]

x[-4] = [0.5, 1.0, 0.5]

print( bounding_sphere(x) )

This returned a sphere of radius 0.5282 centered at (0.4915, 0.4717, 0.4955). This radius is about 5.6% larger than the optimal radius of 0.5.

I repeated the experiment 10 times, and on average the spheres were 5% larger than optimal. The worst case was 7.6% larger than optimal.

Update

Thanks to Chris Elion for pointing out that the implementation of Ritter’s algorithm that I found does not fully implement the algorithm: it does not update the center as Ritter does in [1].

Chris reran the calculations above and in each case roughly cut the distance between the computed and optimal radius in half.

Related posts

[1] Graphics Gems. Andrew S. Glassner, editor. Academic Press. 1990.

[2] There’s an apocryphal quote attributed to Michelangelo that when asked how he carved David, he said that he started with a block of marble and cut away everything that wasn’t David. In probability this approach is known as acceptance-rejection sampling. It’s simple, but not always the most efficient approach.