Old cryptography never dies. After a method is broken, its use declines, but never goes to zero.

And when I say “broken,” I do not mean no longer recommended, but broken to the point of being trivial to decrypt. I recently ran across an anecdote from World War I showing this is nothing new. The Vigenère cipher had been broken decades before the war broke out but was widely used anyway.

In this post I’ll explain what the Vigenère cipher is, give a little history of its rise and fall, and explain how it can be broken.

Vigenère cipher

A simple substitution cipher replaces one letter with another. For example, maybe you replace A with X, B with J, C with B, etc. Simple substitution ciphers are so easy to break that they’re included in pulp puzzle books.

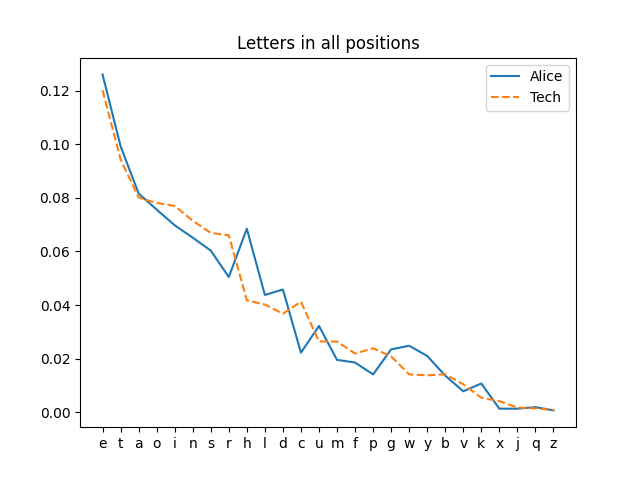

The Vigenère cipher is a step up from simple substitution. It combines a key of length n with the clear text in such a way that you effectively have n different simple substitution ciphers. It’s not hard to break—I’ll explain how to break it below—but it’s less vulnerable that simple substitution. It makes it harder to spot high-frequency letters like E because not all E’s are encrypted the same way.

In its simplest form Vigenère essentially adds a key and a message mod 26. For example, if your message is “Attack the bridge at dawn” and your key is “ossifrage” the encryption would work like this.

clear: ATTACKTHEBRIDGEATDAWN

key: OSSIFRAGEOSSIFRAGEOSS

cipher: OLLIHBTNIPJALLVAZHOOF

Starting from the left, O is the 14th letter of the alphabet (counting from 0), and so A is moved ahead 14 places to 0. Since the clear text and the key are involved symmetrically, you could say that the letter O is moved head zero places since A is the 0th letter of the alphabet.

The next two letters of the clear text and the key happen to be repeated [1]. T and S are the 19th and 18th letters of the alphabet, and 19 + 18 = 37, which is congruent to 11 mod 16. The 11th letter of the alphabet is L.

Here’s a little Python code to carry out the encryption.

clear = "ATTACKTHEBRIDGEATDAWN"

key = "OSSIFRAGE"

A = ord('A')

for i in range(len(clear)):

c = ord(clear[i]) - A

k = ord(key[i % len(key)]) - A

e = (c + k) % 26

print(chr(e + A), end="")

print()

A more secure version of Vigenère moves the clear text through scrambled alphabets. The simplified version above is a particularly insecure special case. However, using scrambled alphabets (“polyalphabetic substitution”) doesn’t make the method that much stronger.

World War I

According to [2],

[The Vigenère cipher] was commonly regarded as unbreakable and was widely used up through World War I, even though the Prussian cryptographer Friedrich Wilhelm Kasiski had published a method for breaking it in 1863.

David Kahn [3] gives more detail about Kasiski’s book:

…Die Geheimschriften und die Dechiffrir-kunst concentrates on answering the problem that had vexed cryptanalysts for more than 300 years: how to achieve a general solution for polyalphabetic ciphers with repeating keywords. … But the 95-page volume seems to have stirred almost no comment at the time.

So armies were depending on the security of Vigenère over 50 years after it had been broken. This was worse than using DES today, around 50 years after it came out. Weaknesses have been found in DES, and it’s 56-bit keys are too short to resist a brute force attack from contemporary computers. But DES has not been broken as thoroughly as Vigenère had been broken by the time of WWI.

Breaking Vigenère

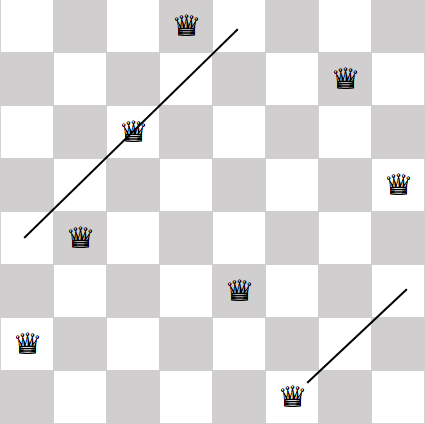

So how would you go about attacking Vigenère? At first it seems hard to break. You can’t naively look at letter frequencies. In the example above, the clear text has four A’s, but they are encrypted three different ways.

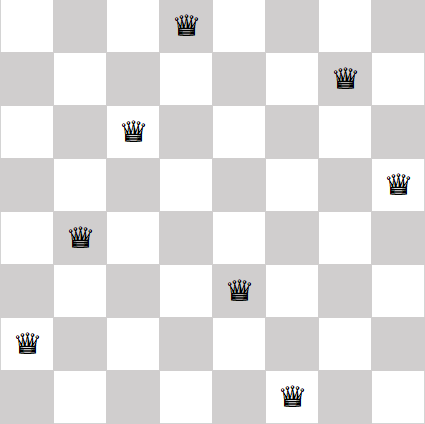

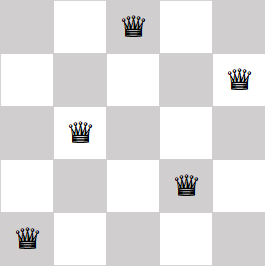

However, Vigenère with a key of length n is just a set of n different simple substitution ciphers. You can chop the cipher text into n pieces and break each one separately.

How would you know the key length n? You could just try brute force. Try 1, then 2, then 3, etc. For example, to see whether the example above might have a key of length 2, you could analyze the letters in even positions and the letters in odd positions separately. If n isn’t too large (and in practice it often wasn’t large) brute force is efficient.

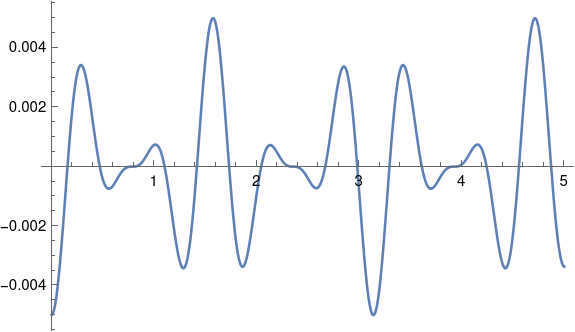

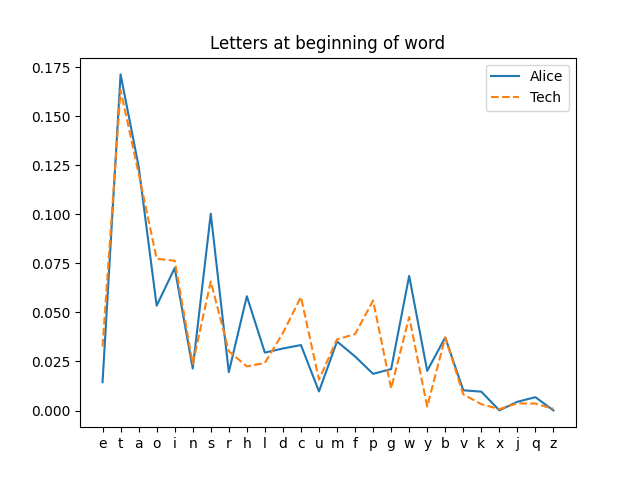

A more sophisticated approach would be to try different alignments and see which one results in statistical properties most consistent with English text. (Or French text if you suspect your clear text was in French etc.) This was the motivation for William Friedman developing his index of coincidence.

In hindsight this seems fairly obvious, but it was not obvious to anyone for three centuries before Kasiski, nor to many for decades after.

Why study obsolete cryptography?

Classical encryption methods are completely obsolete. The methods described in David Kahn’s book The Codebreakers can now be broken almost instantly. So why study old cryptography?

My interest in the subject was renewed recently because I had use for it in new projects. Not directly, though I have seen obsolete encryption in use, but indirectly. Some of the ideas from classical cryptography are relevant to searching for patterns in data, even though nothing was encrypted.

There are still lessons to learn from classical cryptography. For example, even a subtle lack of randomness in keys can be exploited. This was done during WWII, and flaws in random number generators are still causing security failures today.

Related posts

[1] This is a little foreshadowing of what can go wrong even with non-repeating keys: If the clear text and the key are English prose, coincidences like this will happen, and they are an exploitable weakness.

[2] James S. Craft and Lawrence C. Washington. An Introduction to Number Theory with Cryptography, 2nd edition. CRC Press.

[3] David Kahn. The Codebreakers: The Story of Secret Writing. Scribner, 1967.