Since you can describe a point in the plane with two numbers, why would you choose to use three numbers? Why would you ever want to use a coordinate system with more coordinates than necessary?

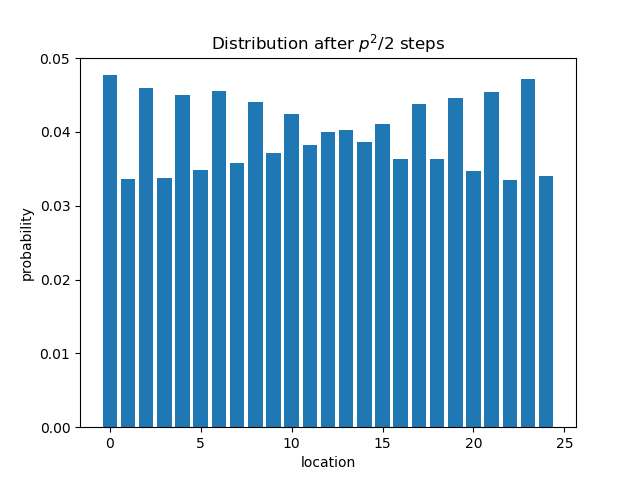

Barycentric coordinates

One way to indicate the location of a point inside a triangle is to give the distance to each of the vertices. These three distances are called barycentric coordinates. Why would you use three numbers when two would do?

Barycentric coordinates make some things much simpler. For example, the coordinates of the three vertices are (1, 0, 0), (0, 1, 0), and (0, 0, 1) for any triangle. The points inside are written as convex combinations of the vertices. The coordinates of the center of mass, the barycenter, are (1/3, 1/3, 1/3). The vertices are treated symmetrically, even if the triangle is far from symmetric.

Barycentric coordinates are useful in applications, such as computer graphics and finite element analysis, because they are relative coordinates. When a triangle moves or is rescaled, you only need to keep track of where the vertices went; the coordinates of the points inside relative to the vertices haven’t changed.

This can be generalized to more dimensions. For example, you could describe a point in a tetrahedron with four coordinates, more in higher dimensions you could describe a point in an n-simplex by the convex combination coefficients of the n vertices.

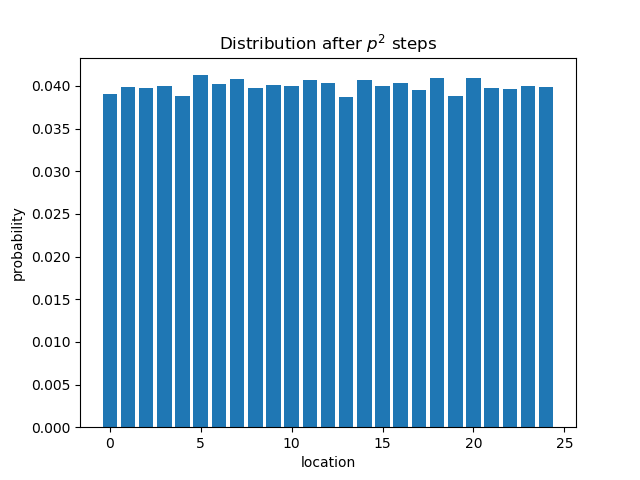

Barycentric coordinates are related to Dirichlet probability distributions. When you have n probabilities that sum to 1, you’ve got n-1 degrees of freedom. But it often simplifies things to work with n variables. As with the discussion of triangles above, the extra variable makes expressions more symmetric.

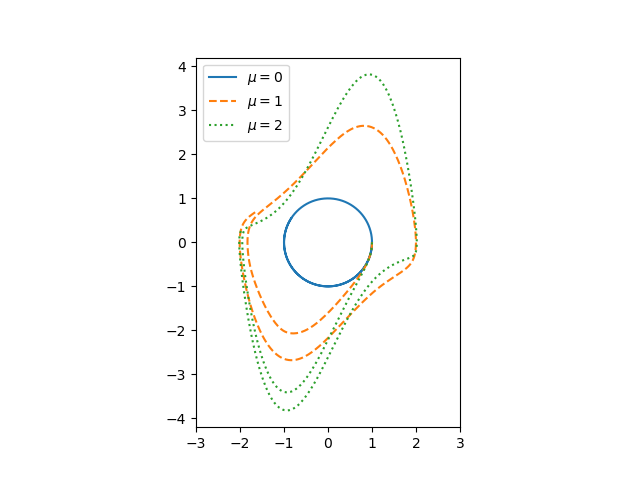

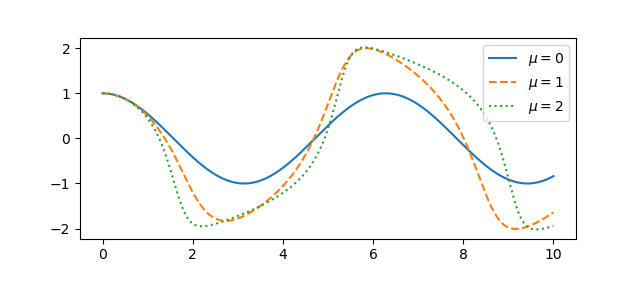

Quaternions and rotations

A point in three dimensional space can be described with three numbers, but it’s often useful to think of the usual three coordinates as the vector part of a quaternion, a set of four numbers.

Suppose you have a point

a = (x, y, z)

and you want to rotate it by an angle θ around an axis given by a unit vector

b = (u, v, w).

You can compute the rotation by associating the point a with the quaternion

p = (0, x, y, z)

and the axis b with the quaternion

q = (cos(θ/2), sin(θ/2) u, sin(θ/2) v, sin(θ/2) w)

The image of a is then given by the quaternion

q p q-1.

This quaternion will have zero real part, and so the Euclidean coordinates are given by the vector part, the last three components.

Of course the product above is a quaternion product, which is not commutative. That’s why the q and the q-1 don’t cancel out.

Using quaternions for rotations has several advantages over using rotation matrices. First, the quaternion representation is more compact, describing a rotation with four real numbers rather than nine. Second, the quaternion calculation can be better behaved numerically. But most importantly, the quaternion approach avoids gimbal lock, a sort of singularity in representing rotations.

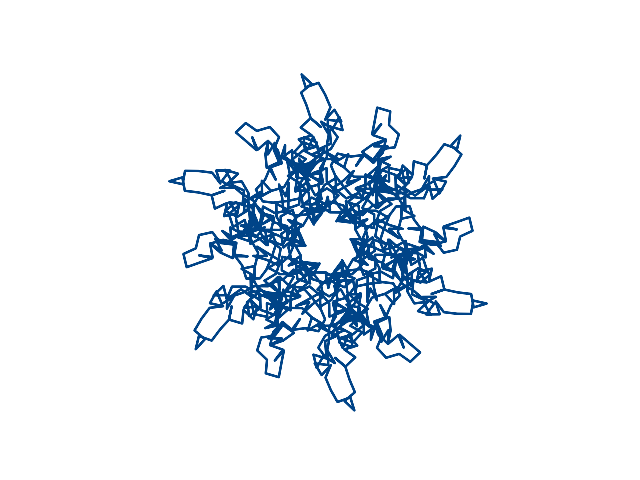

Projective planes

In applications of algebra, such as elliptic curve cryptography, you often need to add “points at infinity” to make things work out. To formalize this, you add an extra coordinate. So while an elliptic curve is usually presented as an equation such as

y² = x³ + ax + b,

it’s more formally an equation in three variables

y²z = x³ + axz² + bz³.

Points in the projective plane have coordinates (x, y, z) where points are considered equivalent if they differ by a non-zero multiple, i.e. (x, y, z) is considered the same point as (λx, λy, λz) for any non-zero λ.

You can often ignore the z, choosing λ so that the z coordinate is 1. But when you need to work with the point at infinity in a uniform way, you bring out the full coordinates. Now the “point at infinity” is not some mysterious entity, but simply the point (0, 1, 0).

Common themes

Projective coordinates, like barycentric coordinates, introduce symmetry. With the addition of an extra coordinate, the three coordinates all behave similarly, with no reason to distinguish any coordinate as special. And as with quanternion rotations, projective coordinates make singularities go away, which is consequence of symmetry.

Related posts