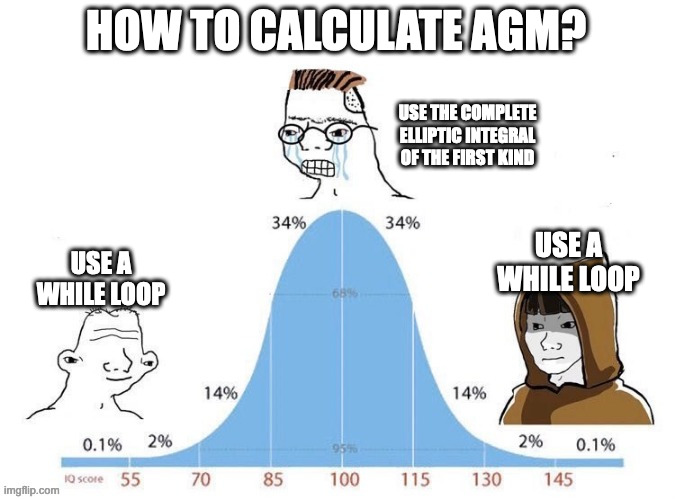

This post is a follow-on to a discussion that started on Twitter yesterday. This tweet must have resonated with a lot of people because it’s had over 250,000 views so far.

You almost have to study advanced math to solve basic math problems. Sometimes a high school student can solve a real world problem that only requires high school math, but usually not.

There are many reasons for this. For one thing, formulating problems is a higher-level skill than solving them. Homework problems have been formulated for you. They have also been rigged to avoid complications. This is true at all levels, from elementary school to graduate school.

A college school student tutoring a high school student might notice that homework problems have been crafted to always have whole number solutions. The college student might not realize how his own homework problems have been rigged analogously. Calculus homework problems won’t avoid fractions, but they still avoid problems that don’t have tidy solutions [1].

When I taught calculus, I looked around for homework problems that were realistic applications, had closed-form solutions, and could be worked in a reasonable amount of time. There aren’t many. And the few problems that approximately satisfy these three criteria will be duplicated across many textbooks. I remember, for example, finding a problem involving calculating the mass of a star that I thought was good exercise. Then as I looked through a stack of calculus texts I saw that the same homework problem was in most if not all the textbooks.

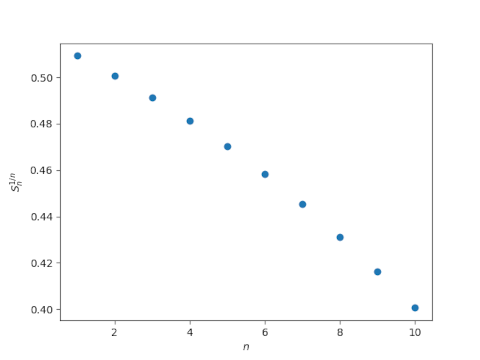

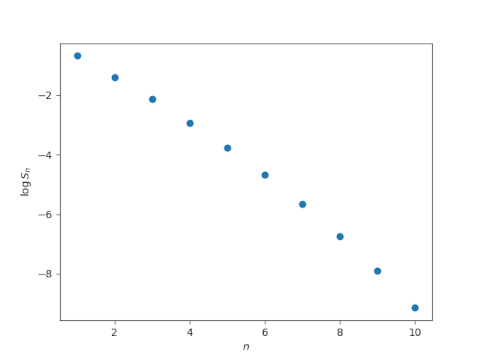

But it doesn’t stop there. In graduate school, homework problems are still crafted to avoid difficulties. When you see a problem like this one it’s not obvious that the problem has been rigged because the solution is complicated. It may seem that you’re able to solve the problem because of the power of the techniques used, but that’s not the whole story. Tweak any of the coefficients and things may go from complicated to impossible.

It takes advanced math to solve basic math problems that haven’t been rigged, or to know how to do your own rigging. By doing your own rigging, I mean looking for justifiable ways to change the problem you need to solve, i.e. to make good approximations.

For example, a freshman physics class will derive the equation of a pendulum as

y″ + sin(y) = 0

but then approximate sin(y) as y, changing the equation to

y″ + y = 0.

That makes things much easier, but is it justifiable? Why is that OK? When is that OK, because it’s not always.

The approximations made in a freshman physics class cannot be critiqued using freshman physics. Working with the un-rigged problem, i.e. keeping the sin(y) term, and understanding when you don’t have to, are both beyond the scope of a freshman course.

Why can we ignore friction in problem 5 but not in problem 12? Why can we ignore the mass of the pulley in problem 14 but not in problem 21? These are questions that come up in a freshman class, but they’re not freshman-level questions.

***

[1] This can be misleading. Students often say “My answer is complicated; I must have made a mistake.” This is a false statement about mathematics, but it’s a true statement about pedagogy. Problems that haven’t been rigged to have simple solutions often have complicated solutions. But since homework problems are usually rigged, it is true that a complicated result is reason to suspect an error.