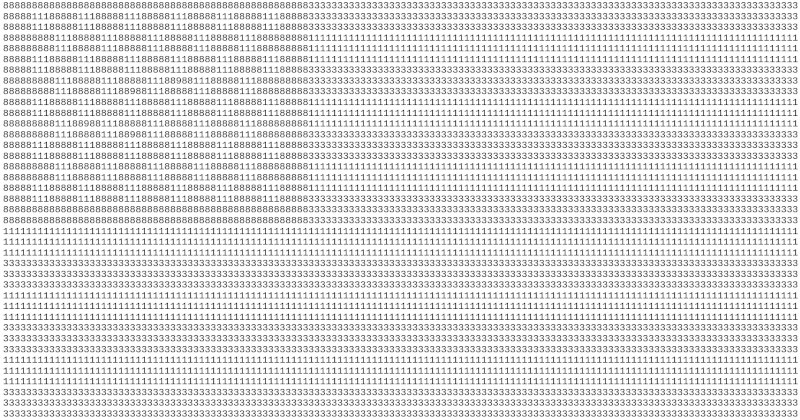

In the process of writing the previous post, I wanted to confirm that the number in the post

really is prime. This was useful in debugging my manual conversion of the image to text: errors did not result in a prime number. For example, I didn’t see the 9’s in the image at first, and I didn’t get a prime number until I changed four of the 8’s to 9’s.

But there was a problem along the way. Simply converting the string to an integer didn’t work. It produced the following error:

SyntaxError: Exceeds the limit (4300) for integer string conversion: value has 5382 digits; use sys.set_int_max_str_digits() to increase the limit – Consider hexadecimal for huge integer literals to avoid decimal conversion limits.

Note that the limitation is not on the size of a Python integer. The only limitation on the size of an integer is available memory. But there is a limitation on the size of string that can be converted to an integer.

The fix suggested in the error message didn’t work. But storing the number as several strings, i.e. each row of the image, and doing my own radix conversion did work.

from sympy import isprime

flaglines = [

"888888888888888888888888888888888888888888888888888883333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"888881118888811188888111888881118888811188888111888883333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"888881118888811188888111888881118888811188888111888883333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"888888888111888881118888811188888111888881118888888881111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"888888888111888881118888811188888111888881118888888881111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"888881118888811188888111888881118888811188888111888881111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"888881118888811188888111888881118888811188888111888883333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"888888888111888881118888811188988111888881118888888883333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"888888888111888881118898811188888111888881118888888883333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"888881118888811188888111888881118888811188888111888881111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"888881118888811188888111888881118888811188888111888881111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"888888888111889881118888811188888111888881118888888881111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"888888888111888881118898811188888111888881118888888883333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"888881118888811188888111888881118888811188888111888883333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"888881118888811188888111888881118888811188888111888883333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"888888888111888881118888811188888111888881118888888881111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"888888888111888881118888811188888111888881118888888881111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"888881118888811188888111888881118888811188888111888881111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"888881118888811188888111888881118888811188888111888883333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"888888888888888888888888888888888888888888888888888883333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"888888888888888888888888888888888888888888888888888883333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111111",

"333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

"333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333",

]

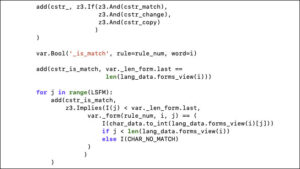

m = int(flaglines[0])

for i in range(1, len(flaglines)):

line = flaglines[i]

x = int(line)

m = m * 10**len(line) + x

print(isprime(n))